We may not have the course you’re looking for. If you enquire or give us a call on +61 272026926 and speak to our training experts, we may still be able to help with your training requirements.

Training Outcomes Within Your Budget!

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

If you are a Data Science enthusiast or a professional looking to advance your career, you might have heard of Apache Spark. Apache Spark is a robust framework that enables you to process large-scale data in a fast and distributed manner. But how well do you know Apache Spark? Are you ready to face the Apache Spark Interview Questions that challenge your skills and knowledge?

This blog will help you prepare for your Apache Spark Interview by providing you with the top 40 Apache Spark Interview Questions and Answers. These questions cover Apache Spark’s fundamental and advanced aspects, such as its core, SQL, streaming, MLlib, GraphX, architecture, components, features, operations, etc. By reading these Apache Spark Interview Questions and Answers, you will learn the concepts and best practices of Apache Spark and gain confidence and clarity for your Apache Spark Interview.

Table of Contents

1) Common Apache Spark Interview Questions

a) How would you describe Apache Spark?

b) What are the main characteristics of Spark?

c) What is the function of a Spark Engine?

d) What are Actions in Spark, and what do they do?

e) In what ways does Apache Spark differ from MapReduce?

f) Why are Resilient Distributed Datasets important in Spark?

g) How does Spark handle low latency workloads such as graph processing and Machine Learning efficiently?

h) What method triggers automatic clean-ups in Spark to manage accumulated metadata?

i) How do you define a Parquet file, and what are its benefits?

j) What does shuffling mean in Spark, and when does it happen?

2) Conclusion

Common Apache Spark Interview Questions

Here are some of the most commonly asked Apache Spark Interview Questions:

1) How would you describe Apache Spark?

Apache Spark is an open-source, distributed computing framework that provides fast and scalable Data Processing and analytics. Spark supports multiple programming languages, such as Scala, Python, Java, and R, and offers various libraries for SQL, streaming, Machine Learning, graph analysis, and more. Spark runs on a cluster of machines, where it can parallelise and distribute the data and computation across multiple nodes.

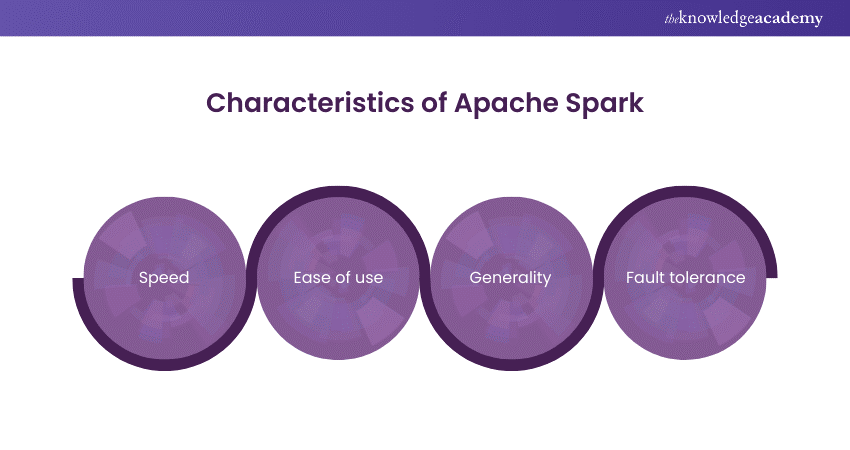

2) What are the main characteristics of Spark?

Some of the main characteristics of Spark are:

a) Speed: Spark can perform up to 100 times faster than Hadoop MapReduce, thanks to its in-memory computation and optimisation engine.

b) Ease of use: Spark provides a simple and expressive API that allows users to write concise and elegant code for Data Processing and analysis.

c) Generality: Spark supports various applications and data sources, such as batch processing, streaming, SQL, Machine Learning, graph analysis, text, images, etc.

d) Fault tolerance: Spark ensures high data availability and computation reliability by using resilient distributed datasets (RDDs) and lineage graphs.

3) What is the function of a Spark Engine?

The Spark Engine is the core component of Spark that manages the execution of tasks and jobs on the cluster. The Spark Engine consists of two main parts: the driver and the executors. The driver is the process that coordinates the overall execution of the application, while the executors are the processes that run the tasks assigned by the driver. The Spark Engine also interacts with the cluster manager, such as YARN, Mesos, or Kubernetes, to acquire and release resources for the application.

4) What are Actions in Spark, and what do they do?

Actions trigger the evaluation of an RDD or a DataFrame and return a result to the driver or write it to an external storage system. Actions are the final steps of a Spark application, where the actual computation takes place. Some examples of actions are:

a) collect(): returns all the elements of the RDD or DataFrame as an array to the driver.

b) count(): returns the number of elements in the RDD or DataFrame.

c) take(n): returns the first n elements of the RDD or DataFrame as an array to the driver.

d) saveAsTextFile(path): writes the RDD or DataFrame as a text file to the specified path.

e) reduce(func): applies a binary function to the elements of the RDD or DataFrame and returns the final result to the driver.

5) In what ways does Apache Spark differ from MapReduce?

Apache Spark differs from MapReduce in several ways, such as:

|

Feature |

Apache Spark |

MapReduce |

|

Processing speed |

Faster |

Slower |

|

Memory usage |

In-memory processing |

Disk-based processing |

|

Fault tolerance |

Built-in |

Requires checkpointing and Replication |

|

Iterative processing |

Supported |

Not well-supported |

|

Ease of use |

Easier |

More complex |

|

Data processing model |

Directed Acyclic Graph (DAG) |

Map and Reduce |

|

Supported languages |

Scala, Java, Python, SQL, R, Kotlin |

Java |

a) Data abstraction: Spark uses RDDs and DataFrames as the primary data abstraction, while MapReduce uses key-value pairs. RDDs and DataFrames allow users to manipulate the data more flexibly and expressively without worrying about low-level data partitioning and serialisation details.

b) Data Processing: Spark supports batch and streaming Data Processing, while MapReduce only supports batch processing. Spark can also perform iterative and interactive Data Processing, which is inefficient in MapReduce.

c) Data storage: Spark can store intermediate data in memory, which reduces the disk I/O and network overhead, while MapReduce always writes intermediate data to disk. Spark can also cache and persist data for reuse, which improves the performance of repeated queries and algorithms.

d) Programming model: Spark provides a rich and unified API that supports multiple languages, such as Scala, Python, Java, and R, and various libraries, such as SQL, streaming, Machine Learning, graph analysis, and more. MapReduce has a more limited and rigid API that only supports Java and a few other languages via wrappers.

Unlock the power of Big Data Processing with our comprehensive Apache Spark Training and propel your career to new heights!

6) Why are Resilient Distributed Datasets important in Spark?

Resilient Distributed Datasets (RDDs) are the fundamental data abstraction in Spark. RDDs are immutable, partitioned, and distributed data collections that can be operated in parallel. RDDs are important in Spark because they:

a) Enable fault tolerance: RDDs can recover from failures by using lineage graphs, which track the dependencies and transformations of the data. If a partition of an RDD is lost, Spark can recompute it from its parent RDDs without replicating the data across the cluster.

b) Support lazy evaluation: RDDs are only computed when an action is performed on them, which allows Spark to optimise the execution plan and avoid unnecessary computation.

c) Allow user-defined functions: RDDs can apply user-defined functions to the data, which gives users more flexibility and control over the Data Processing logic.

d) Support multiple data sources: RDDs can be created from various data sources, such as HDFS, local files, databases, etc., or existing RDDs.

7) How does Spark handle low latency workloads such as graph processing and Machine Learning efficiently?

Spark handles low latency workloads such as graph processing and Machine Learning efficiently by:

a) Using in-memory computation: Spark can store intermediate data in memory, which reduces the disk I/O and network overhead and improves the performance of iterative and interactive algorithms.

b) Providing specialised libraries: Spark offers various libraries for different domains, such as GraphX for graph processing, MLlib for Machine Learning, Spark SQL for structured and semi-structured data, etc. These libraries provide high-level APIs and optimised algorithms that simplify the development and execution of the applications.

c) Supporting broadcast variables and accumulators: Spark supports broadcast variables and accumulators, which are shared variables that can communicate information across the cluster. Broadcast variables can distribute extensive read-only data to the executors, while accumulators can aggregate results from the executors.

8) What method triggers automatic clean-ups in Spark to manage accumulated metadata?

The method to trigger automatic clean-ups in Spark to manage accumulated metadata is to use the spark.cleaner.ttl configuration parameter. This parameter sets a time-to-live (TTL) value for RDDs and DataFrames, meaning they will be removed from the memory and disk if they are not accessed for that duration. This can help free up space and resources for the application and prevent memory leaks and performance degradation.

9) How do you define a Parquet file, and what are its benefits?

A Parquet file is a columnar storage format designed for efficient and scalable Data Processing. A Parquet file stores the data in a compressed and encoded way, which reduces the storage space and improves the I/O performance. A Parquet file also preserves the data's schema and metadata, allowing Spark to optimise the query execution and push down the filters and projections to the file level. Some of the benefits of using Parquet files are:

a) Faster query performance: Parquet files can improve query performance by reducing the amount of data that needs to be read and processed. Parquet files can also leverage Spark's predicate pushdown and column pruning features, which can further reduce the data scan and computation.

b) Lower storage cost: Parquet files can reduce expenses by compactly compressing and encoding the data. Parquet files can also support different compression algorithms for other columns, which can optimise the compression ratio and speed.

c) Compatibility and interoperability: Parquet files can support various data types and complex structures, such as nested and repeated fields, arrays, maps, etc. Parquet files can also be compatible and interoperable with different frameworks and tools, such as Spark, Hive, Impala, Presto, etc.

10) What does shuffling mean in Spark, and when does it happen?

Shuffling means redistributing the data across the partitions of an RDD or a data frame based on a certain key or condition. Shuffling happens when an operation requires data to be grouped or aggregated by a key, such as join, groupBy, reduceByKey, etc. Shuffling can be expensive and time-consuming, involving disk I/O, network I/O, and serialisation and deserialisation of the data. Therefore, it is advisable to minimise the amount of shuffling and optimise the shuffling performance by tuning the parameters such as spark.shuffle.file.buffer, spark.shuffle.compress, spark.shuffle.spill.compress, spark.default.parallelism, etc.

Elevate your data-driven decision-making skills with our cutting-edge Big Data and Analytics Training!

11) What are the steps to convert a Spark RDD into a DataFrame?

There are two main steps to convert a Spark RDD into a DataFrame:

a) Define a schema: A schema is a description of the structure and data types of the DataFrame. A schema can be defined differently, such as using a case class, a StructType, or an implicit conversion.

b) Apply the schema: The schema can be applied to the RDD using the toDF() method, the createDataFrame() method, or the spark.read API.

For example, suppose we have an RDD of Person objects, where Person is a case class with name and age fields. We can convert it into a DataFrame as follows:

|

// Define a case class case class Person(name: String, age: Int) // Create an RDD of Person objects val rdd = sc.parallelize(Seq(Person("Alice", 25), Person("Bob", 30), Person("Charlie", 35))) // Convert the RDD to a DataFrame using toDF() val df = rdd.toDF() // Alternatively, convert the RDD to a DataFrame using createDataFrame() val df = spark.createDataFrame(rdd) // Alternatively, convert the RDD to a DataFrame using spark.read val df = spark.read.json(rdd.map(_.toJson)) |

12) What are the different types of operations that RDDs support?

RDDs support two types of operations: transformations and actions.

a) Transformations: Transformations are operations that create a new RDD from an existing one, such as map, filter, join, etc. Transformations are lazy, which means they are only executed when an action is performed on the RDD. Transformations can also be chained together to form a lineage graph, which tracks the dependencies and transformations of the RDD.

b) Actions: Actions are operations that trigger the evaluation of an RDD and return a result to the driver or write it to an external storage system, such as collect, count, saveAsTextFile, reduce, etc. Actions are the final steps of a Spark application, where the actual computation takes place.

13) How can you specify a schema for DataFrame programmatically?

There are different ways to specify a schema for DataFrame programmatically, such as:

1) Using a case class: A case class is a Scala class that can define the fields and data types of the DataFrame. A case class can be used to convert an RDD into a DataFrame using the toDF() method or the createDataFrame() method. For example:

|

// Define a case class case class Person(name: String, age: Int) // Create an RDD of Person objects val rdd = sc.parallelize(Seq(Person("Alice", 25), Person("Bob", 30), Person("Charlie", 35))) // Convert the RDD to a DataFrame using toDF() val df = rdd.toDF() // Alternatively, convert the RDD to a DataFrame using createDataFrame() val df = spark.createDataFrame(rdd) |

2) Using a StructType: A StructType is a Spark SQL class that can define the fields and data types of the DataFrame. A StructType can be used to create a DataFrame from an RDD of Row objects using the createDataFrame() method. For example:

|

// Import the necessary classes import org.apache.spark.sql.Row import org.apache.spark.sql.types._ // Define a schema using a StructType val schema = StructType( StructField("name", StringType, true) :: StructField("age", IntegerType, true) :: Nil ) // Create an RDD of Row objects val rdd = sc.parallelize(Seq(Row("Alice", 25), Row("Bob", 30), Row("Charlie", 35))) // Convert the RDD to a DataFrame using createDataFrame() val df = spark.createDataFrame(rdd, schema) |

3) Using an implicit conversion: An implicit conversion is a Scala feature that automatically converts one type to another. An implicit conversion can be used to convert an RDD into a DataFrame by importing the spark.implicits._ package, which provides the toDF() method for RDDs. For example:

|

// Import the spark.implicits._ package import spark.implicits._ // Create an RDD of tuples val rdd = sc.parallelize(Seq(("Alice", 25), ("Bob", 30), ("Charlie", 35))) // Convert the RDD to a DataFrame using toDF() val df = rdd.toDF("name", "age") |

14) What is the name of the transformation that returns a new DStream by filtering only those records of the source DStream that satisfy a function?

The name of the transformation that returns a new DStream by filtering only those records of the source DStream that satisfy a function is filter(). The filter() transformation takes a function as an argument, which returns a boolean value for each record of the source DStream. The filter() transformation then returns a new DStream that contains only those records for which the function returns true. For example:

|

// Define a function that checks if a word starts with "a" def startsWithA(word: String): Boolean = { word.startsWith("a") } // Create a DStream of words from a text file val words = ssc.textFileStream("hdfs://...").flatMap(_.split(" ")) // Apply the filter() transformation to get only the words that start with "a" val wordsWithA = words.filter(startsWithA) |

15) Does Apache Spark offer checkpoints?

Yes, Apache Spark provides checkpoints, a mechanism to save the state of an RDD or a DStream to a reliable storage system, such as HDFS, S3, etc. Checkpoints can be used to:

a) Ensure fault tolerance: Checkpoints can help recover from failures by restoring the state of the RDD or the DStream from the saved checkpoint. Checkpoints can also reduce the length of the lineage graph, improving the computation's performance and reliability.

b) Support long-running applications: Checkpoints can help support long-running applications, such as streaming or Machine Learning, by periodically saving the state of the RDD or the DStream and preventing the accumulation of metadata and memory usage.

Accelerate your career in Big Data with our industry-leading Hadoop Big Data Certification Training!

16) What is the difference between cache() and persist() methods in Spark?

The cache() and persist() methods are used to store RDDs in memory or disk for reuse. The difference between them is:

a) cache() is a shorthand for persist() with the default storage level, which is MEMORY_ONLY

b) persist() allows the user to specify the storage level, such as MEMORY_AND_DISK, MEMORY_ONLY_SER, etc.

17) How do you explain sliding window operation?

A sliding window operation is a type of operation that applies a function to a subset of data in a DStream based on a specified window duration and slide duration. A window duration is the length of the window or the number of batches of data to be considered for the operation. A slide duration is the interval at which the window operation is performed or the number of batches by which the window moves. A sliding window operation can be used to compute statistics or aggregations over a sliding window of data, such as the average, the sum, the count, etc. For example:

|

// Create a DStream of numbers from a socket stream val numbers = ssc.socketTextStream("localhost", 9999).map(_.toInt) // Define a window duration of 10 seconds and a slide duration of 5 seconds val windowDuration = Seconds(10) val slideDuration = Seconds(5) // Apply a sliding window operation to compute the sum of numbers in each window val windowSum = numbers.reduceByWindow(_ + _, windowDuration, slideDuration) |

18) What are the various levels of persistence in Spark?

The different levels of persistence in Spark are:

a) MEMORY_ONLY: The RDD or DataFrame is stored in memory as deserialised objects. This is the default and the fastest level of persistence, but it may cause data loss if there is insufficient memory.

b) MEMORY_AND_DISK: The RDD or DataFrame is stored in memory as deserialised objects, but some partitions are split into disks if there is not enough memory. This level of persistence can prevent data loss, but it may increase the disk I/O and computation time.

c) MEMORY_ONLY_SER: The RDD or DataFrame is stored in memory as serialised objects. This level of persistence can reduce memory usage, but it may increase the CPU overhead and the serialisation and deserialisation time.

d) MEMORY_AND_DISK_SER: The RDD or DataFrame is stored in memory as serialised objects, but if there is not enough memory, some partitions are split into disks. This level of persistence can reduce memory usage and prevent data loss, but it may increase the CPU overhead, the disk I/O, and the serialisation and deserialisation time.

e) DISK_ONLY: The RDD or DataFrame is stored only on disk. This level of persistence can prevent data loss and save memory, but it may increase the disk I/O and computation time.

f) MEMORY_ONLY_2, MEMORY_AND_DISK_2, etc.: These levels of persistence are similar to the previous ones, but they replicate each partition on two cluster nodes. This can improve the fault tolerance and availability of the data, but it may increase the memory and network usage.

19) How do map and flatMap transformations differ in Spark Streaming?

The map and flatMap transformation differ in Spark Streaming as follows:

1) map: The map transformation applies a function to each element of the DStream and returns a new DStream with the same number of elements as the original DStream. The function can change the value or the type of the element, but it cannot change the number of elements. For example:

|

// Create a DStream of words from a socket stream val words = ssc.socketTextStream("localhost", 9999).flatMap(_.split(" ")) // Apply the map transformation to convert each word to uppercase val upperWords = words.map(_.toUpperCase) |

2) flatMap: The flatMap transformation applies a function to each element of the DStream and returns a new DStream with a variable number of elements. The function can return zero or more elements for each input element, and the output elements are flattened into a single DStream. For example:

|

// Create a DStream of sentences from a socket stream val sentences = ssc.socketTextStream("localhost", 9999) // Apply the flatMap transformation to split each sentence into words val words = sentences.flatMap(_.split(" ")) |

20) What is the technique to compute the total count of unique words in Spark?

To count the total number of words in Spark, you can use these steps:

a) Create an RDD from the text file

b) Use the flatMap() function to split each line into words and flatten the result

c) Use the countByValue() function to count the occurrences of each word

d) Use the filter() function to remove any empty or non-word strings

The code for this in Scala is:

|

val textFile = sc.textFile("file.txt") val words = textFile.flatMap(line => line.split(" ")) val wordCounts = words.countByValue() val filtered = wordCounts.filter(word => word._1.matches("w+")) |

Unlock endless opportunities for insights-driven decision-making with our Big Data Analysis Course!

21) If you have a large text file, how can you use Spark to check if a specific keyword exists?

You can use Spark to check if a specific keyword exists in a large text file by creating an RDD from the file, filtering the RDD by the keyword, and counting the number of elements in the filtered RDD. If the count is greater than zero, the keyword exists.

22) What is the purpose of accumulators in Spark?

Accumulators are variables that can be used to aggregate information across the cluster. They can be utilised to implement counters or sums in parallel computations. Accumulators are write-only for the workers and read-only for the driver. They can only be updated by associative and commutative operations.

23) What are the different tools that MLlib provides in Spark?

MLlib is a library that provides various tools for Machine Learning in Spark. Some of the tools that MLlib delivers are:

a) DataFrames and Datasets for data manipulation and processing

b) Transformers and Estimators for feature engineering and model training

c) Pipelines and CrossValidators for workflow management and model selection

d) Evaluators and Metrics for model evaluation and performance measurement

e) Algorithms for classification, regression, clustering, recommendation, and more

24) What is the PageRank algorithm in Apache Spark GraphX, and how does it work?

The PageRank algorithm is an algorithm that assigns a score to each node in a graph based on the number and quality of links to that node. It is used to measure the importance or popularity of web pages. The PageRank algorithm in Apache Spark GraphX works by iteratively updating each node's scores using its neighbours' scores until convergence.

25) What is the role of Spark Driver?

The Spark Driver is the process that runs the main() method of the Spark application and creates the SparkSession. It is responsible for:

Coordinating the execution of tasks across the cluster

Managing the SparkContext and the DAGScheduler

Distributing the application code and data to the workers

Collecting and displaying the results of the computation

Master the art of data-driven insights with our comprehensive Data Science Analytics Course!

26) What is the function of Spark Executor?

The Spark Executor is the process that runs on each worker node and executes the tasks the driver assigns. It is responsible for:

a) Running the user code and performing the computations

b) Storing the intermediate and final results in memory or disk

c) Communicating with the driver and other executors

d) Reporting the status and metrics of the tasks

27) What is the definition of a worker node?

A worker node is a node in the cluster that runs one or more Spark executors. A worker node can have multiple cores and memory resources that can be allocated to different executors. A worker node receives tasks from the driver and executes them in parallel.

28) What is a sparse vector, and how is it different from a dense vector?

A sparse vector is a vector that has mostly zero values and only stores the non-zero values and their indices. A dense vector is a vector that stores all the values, including the zeros. A sparse vector is different from a dense vector in terms of:

a) Storage efficiency: A sparse vector can save space by not storing the zeros

b) Computational efficiency: A sparse vector can speed up some operations by skipping the zeros

c) Representation: A sparse vector can be represented as a tuple of two arrays (indices and values) or a dictionary (index-value pairs)

29) Is it possible to access and analyse data stored in Cassandra databases using Spark?

Yes, accessing and analysing data stored in Cassandra databases is possible using Spark. There are several ways to do this, such as:

a) Using the Spark Cassandra Connector, which allows Spark to read and write data from and to Cassandra tables as RDDs, DataFrames, or Datasets

b) Using the Cassandra Spark SQL DataSource, which allows Spark to query Cassandra tables using Spark SQL or DataFrames

c) Using the Cassandra Table Scan RDD, which allows Spark to scan Cassandra tables as RDDs

30) What is YARN, and what does it do?

YARN is a component of Hadoop that manages the resources and scheduling of applications running on a cluster. YARN stands for Yet Another Resource Negotiator. YARN does the following:

a) Allocates and monitors the resources (CPU, memory, disk, network) for each application

b) Maintains the availability and fault tolerance of the applications

c) Supports multiple frameworks and languages, such as MapReduce, Spark, Hive, etc.

Master real-time Data Processing with our Apache Storm Training and stay ahead in today's fast-paced digital landscape!

31) What are the languages that Spark can work with?

Spark can work with multiple languages, such as:

a) Scala: The native language of Spark and the most widely used

b) Python: The most popular language for Data Science and Machine Learning

c) Java: The most common language for enterprise applications and Big Data systems

d) R: The preferred language for statistical analysis and visualisation

e) SQL: The standard language for querying structured and semi-structured data

32) What are broadcast variables, and how are they useful in Spark?

Broadcast variables are read-only variables distributed to each node in the cluster but are only copied once per node. They are useful in Spark for:

a) Reducing the network traffic and data shuffling by sending large and common data to the workers

b) Improving the performance and efficiency of join operations by broadcasting the smaller table

c) Enhancing the security and consistency of the data by preventing the workers from modifying it

33) What are the benefits of using Spark SQL over SQL?

Spark SQL is a module that provides a SQL-like interface for querying structured and semi-structured data in Spark. Some of the benefits of using Spark SQL over SQL are:

a) Spark SQL can query data from various sources, such as Hive, Parquet, JSON, JDBC, etc.

b) Spark SQL can integrate with the Spark core API and support various operations, such as map, filter, reduce, etc.

c) Spark SQL can leverage the Spark engine and optimise the query execution by using the Catalyst optimiser and the Tungsten execution engine.

d) Spark SQL can support various data formats, such as DataFrames, Datasets, and RDDs

34) What are the challenges of implementing Machine Learning algorithms in Spark?

Some of the challenges of implementing Machine Learning algorithms in Spark are:

a) Dealing with the high dimensionality and sparsity of the data

b) Handling the scalability and parallelisation of the algorithms

c) Choosing the appropriate parameters and hyperparameters for the models

d) Evaluating the performance and accuracy of the models

e) Updating and maintaining the models over time

35) How does the job support function?

The job support function is a feature of Spark that allows the user to monitor and control the execution of a Spark job. The job support function provides the following capabilities:

a) Submitting a job to the cluster by using the submit() method

b) Cancelling a job by using the cancel() method

c) Getting the status of a job by using the status() method

d) Getting the result of a job by using the get() method

e) Adding a listener to a job by using the addListener() method

The job support function returns a JobHandle object that can be used to perform these operations.

Master Hadoop Training Course with Impala for advanced data processing with our comprehensive training! Register now.

36) What is Directed Acyclic Graph in Spark, and why is it important?

A Directed Acyclic Graph (DAG) is a graph that represents the sequence of operations and dependencies of a Spark computation. A DAG is important because:

a) It allows Spark to optimise the execution plan by applying transformations lazily and combining them into stages.

b) It allows Spark to recover from failures by recomputing the lost partitions based on the DAG.

c) It allows Spark to support various execution modes, such as batch, streaming, interactive, and graph.

d) It allows Spark to provide a visual representation of the computation by using the DAG visualisation tool.

37) What are the different modes of deployment in Apache Spark?

The different modes of deployment in Apache Spark are:

a) Local mode: The driver and the executor run on the same machine, using the local file system and the local threads. This mode is suitable for testing and development purposes.

b) Standalone mode: The driver and the executors run on a cluster of machines using Spark’s cluster manager and the distributed file system. This mode is suitable for simple and fast deployments.

c) YARN mode: The driver and the executors run on a cluster of machines using the YARN cluster manager and the distributed file system. This mode is suitable for integrating with Hadoop ecosystems and leveraging the features of YARN.

d) Mesos mode: The driver and the executors run on a cluster of machines, using the Mesos cluster manager and the distributed file system. This mode is suitable for running Spark on a shared and dynamic cluster.

38) What are the responsibilities of receivers in Apache Spark Streaming?

Receivers are the components of Apache Spark Streaming that are responsible for:

a) Receiving the data from various sources, such as Kafka, Flume, TCP sockets, etc.

b) Storing the data in the memory or disk of the executors as RDDs

c) Replicating the data to achieve fault tolerance

d) Sending the metadata of the data to the driver

Receivers can be reliable or unreliable, depending on the source and the storage level.

39) What are some of the drawbacks of using Spark?

Some of the drawbacks of using Spark are:

a) Spark requires a lot of memory and resources to run efficiently, which can be expensive and challenging to manage.

b) Spark does not support real-time processing, as it operates on micro-batches of data, which can introduce latency and overhead.

c) Spark does not have a mature and comprehensive ecosystem, as it is relatively new and evolving rapidly, which can cause compatibility and stability issues.

d) Spark does not have native support for complex data types, such as nested and hierarchical structures, which can limit the expressiveness and flexibility of the queries.

40) What does Distributed mean, and how does it relate to Spark?

Distributed means that the data and the computation are spread across multiple machines or nodes in a cluster rather than being centralised on a single machine. Distributed relates to Spark in the following ways:

a) Spark is a distributed computing framework that allows the user to process large-scale data in parallel and in a distributed manner.

b) Spark uses a distributed data structure called RDD, a collection of partitions that can be stored and computed on different nodes.

c) Spark uses a distributed execution model, where the driver coordinates the tasks, and the executors perform the tasks on different nodes.

d) Spark uses a distributed file system like HDFS to store and access the data on different nodes.

Transform your Data Processing skills with our Apache Spark and Scala Training for unparalleled performance and efficiency!

Conclusion

Mastering these Apache Spark Interview Questions is pivotal for anyone aiming to excel in Data Science roles. Whether you're a beginner or an experienced professional, the comprehensive coverage ensures you're well-prepared with the knowledge and insights needed to navigate and succeed in Apache Spark-related interviews effectively.

Elevate your data storage and processing capabilities with our Apache ORC Training for optimized performance and efficiency!

Frequently Asked Questions

Upcoming Data, Analytics & AI Resources Batches & Dates

Date

Apache Kafka Training Course

Apache Kafka Training Course

Thu 16th Jan 2025

Thu 6th Mar 2025

Thu 22nd May 2025

Thu 24th Jul 2025

Thu 4th Sep 2025

Thu 20th Nov 2025

Thu 18th Dec 2025

Top Rated Course

Top Rated Course

If you wish to make any changes to your course, please

If you wish to make any changes to your course, please