We may not have the course you’re looking for. If you enquire or give us a call on +61 272026926 and speak to our training experts, we may still be able to help with your training requirements.

Training Outcomes Within Your Budget!

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

Ever wondered how technology understands and responds to our commands? This blog will help you learn about the fascinating realm where humans and machines communicate effortlessly. From voice assistants to text-based interaction, we’ll unravel the secrets of What is Prompt Engineering, breaking down the process in simple terms.

Table of Contents

1) What is Prompt Engineering?

2) Importance of Prompt Engineering

3) Prompt Engineering examples

4) How does Prompt Engineering Work?

5) Prompt Engineering Techniques

6) Tips and Best Practices for writing prompts

7) The future of Prompt Engineering

8) Conclusion

What is Prompt Engineering?

Prompt Engineering refers to the process of creating and refining the prompts or input queries given to a language model to achieve desired outputs. It is the process where the generative AI solutions are guided to generate desired outputs. Even if Generative AI puts in maximum effort to imitate human-level communication, it needs instructions or programs to make high-quality and appropriate output.

Prompt Engineering helps us choose the relevant formats, phrases, words and punctuations that tell the AI to communicate with the users in a more meaningful way. Prompt Engineers usually make use of creativity and the trial-and-error method to create a group of input texts, so the generative AI of the application works as expected.

Importance of Prompt Engineering

Prompt Engineering is the foundation of user-friendly technology. It makes sure that the interactions with devices are efficient and intuitive. By carefully crafting prompts, we enable machines to understand human language, be it spoken or written, making technology more accessible to all. This is especially important in today’s digital world, where Effective Communication between humans and machines is the key to the enhancement of user experience (UX) and innovation.

Furthermore, Prompt Engineering is important in shaping the efficiency of voice assistants, chatbots, and other interactive systems. The success of these technologies in meeting user needs depends on their ability to interpret accurately and respond to user prompts. It is a dynamic field that not only helps in seamless interaction but also contributes to the evolution of AI, making way for a more sophisticated and responsive digital experience in our everyday lives.

Let’s look at some of the benefits of Prompt Engineering:

1) Greater developer control: It gives developers more control over the user interaction with AI. Prompts give intent and provide context to the Large Language Models (LLM). This helps AI sift out the output and present it effectively in the format required.

2) Improved user experience: Prompt Engineering makes it easier for the users to get appropriate results the first time. It helps diminish bias that remains from existing human interactions in the Large Language Model’s (LLM) training data.

3) Increased flexibility: High levels of separation upgrade AI models and allow companies to make more flexible tools at scale. Professionals can create commands with domain-neutral instruction focusing on logical links and patterns. Organisations can scale AI capabilities by quickly distributing insights across the company.

Master the AI skills with our Generative AI in Prompt Engineering Training Course – Sign up today!

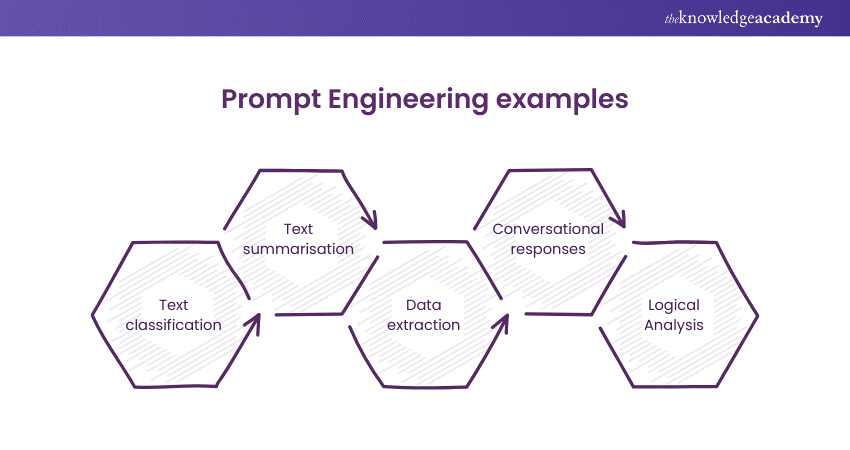

Prompt Engineering Examples

LLMs and Prompt Engineering have proven to be useful in several real-life apps, so let’s look at some of the best examples of them:

1) Text classification: Text classification is an important task in Software Testing that involves the classification of input text into categories like positive, negative, or neutral. Different natural language processing (NLP) strategies can be tried to get this classification.

2) Text summarisation: It is a description or explanation that requires a prompt and achieves responses in long paragraphs and lists. However, the length and intent of these responses can be managed through specific instructions. For e.g., “Provide a concise explanation of Prompt Engineering.”

3) Data extraction: This kind of prompt is involved when exact facts or data need to be given out as output. They often get to the point without subjectivity.

4) Conversational responses: Conversational prompts are often used for Artificial Intelligence, such as chatbots that can mimic human attitudes to generate text or images.

5) Logical Analysis: Logical prompts can include anything from a computer code request from a specific programming language to difficult maths problems. This entirely depends on the tasks the platform is asked to do.

Here are few examples of a prompts:

Situation 1 – Text Generation:

1) Explore the distinction between Generative AI and Conventional AI.

2) Create10 captivating headlines for “Innovative AI Applications in Business.”

3) Sketch an article framework highlighting generative AI’s advantages in marketing.

4) Compose a 300-word elaboration for every outlined section.

5) Craft a striking headline for every section.

6) Conjure a 100-word portrayal of ProductXYZ in a quintet of unique styles.

7) In the bard’s voice, define prompt engineering with a rhythmic beat.

Situation 2 – Code Generation:

1) Transform into an ASCII artist, converting object names into ASCII art.

2) Inspect and correct errors in this code excerpt.

3) Develop a function that multiplies two figures and delivers the outcome.

4) Construct a simple REST API using Python.

5) Decode the purpose of this code segment.

6) Streamline the code presented.

7) Progress the narrative of this code script.

Situation 3 – Image Concepts:

1) Envision a dog, donned in sunglasses and a cap, in a vehicle, through Dali’s surreal lens.

2) Picture a lizard lounging on the shore, rendered in claymation.

3) Capture a scene of a man on his phone in the subway, in 4K resolution with bokeh effect.

4) Illustrate a sticker of a lady sipping coffee, seated at a checkered table.

5) Depict a jungle expanse bathed in cinematic light, akin to a nature documentary.

6) Imagine a first-person view of amber clouds at dawn.

How does Prompt Engineering Work?

Generative AI systems are like masterful linguists, thanks to their transformer architecture that helps them understand the subtleties of language and sift through huge amounts of data. By building the right prompts, you can guide these AI systems to respond in ways that are both meaningful and relevant. Techniques such as breaking down text into manageable pieces (tokenization), fine-tuning the AI’s settings (model parameter tuning), and selecting the best possible responses (top-k sampling) are all part of the toolkit that ensures AI delivers useful answers.

The art of prompt engineering is essential to tap into the vast capabilities of foundational models, the powerhouses behind generative AI. These large language models are equipped with a wealth of information and built upon transformer architecture, ready to serve as the brain of the AI system.

At the heart of generative AI models is natural language processing (NLP), which allows them to take our words and turn them into complex creations. The combination of data science, transformer architecture, and Machine Learning Algorithms gives these models the ability to not just understand our language but also to use extensive datasets to generate text or even images.

Stay at the forefront of AI Skills with our Artificial Intelligence Tools Training - Join today!

Develop an Appropriate Prompt

Some of the basic rules for creating prompts for AI platforms, like clarity, should be the key to ensuring that the prompt is clear and concise. You can avoid jargon until it's absolutely necessary for the context. As mentioned earlier, you can try placing the models in specific roles to get better responses.

Use control and set boundaries to help guide the model to the required output. For example, “Describe the Leaning Tower of Pisa in three sentences” gives a clear-cut length of the sentence. Avoid using leading questions that can bias the model’s required output. It’s important to remain impartial to get an unbiased output.

Iterate and Asses

The process of improving the prompts is iterative. Here’s the basic workflow that is used for the same:

Create the starting prompt: This can be done based on the work you have and the required output.

Testing: You can test the prompt by using the AI model to provide an output

Assess the output: You can check if the output received matches the intent of the user and meets the required criteria.

Refine the input: To refine the prompt, you will have to make the needed corrections based on the assessment.

Repeat: You will have to repeat this process until you get the desired output quality.

While running this process, it is also important to give different inputs and situations to make sure that the prompt is effective in any given range of situations.

Adjust and Fine-tune

After fine-tuning the prompt, there's also the likelihood of adjusting or refining the AI model. This includes calibrating the model’s framework to match better with the specific inputs or datasets. This can thoroughly improve the model’s functioning for specific applications as it is a more advanced technique.

Prompt Engineering Techniques

Prompt engineering is a craft that blends language expertise with creative flair to refine prompts for AI tools. It’s like being a digital wordsmith, sculpting the input to guide AI’s language abilities. Here’s a peek into some of the strategies used:

1) Chain-of-thought prompting

Imagine tackling a big problem by breaking it down into bite-sized pieces. That’s what chain-of-thought prompting does. It’s like how we humans solve puzzles—step by step. This way, AI can dive deep into each segment, leading to sharper, more precise answers.

Take the question, “How does climate change affect biodiversity?” Instead of a straight shot answer, AI using this method would dissect it into parts like:

1) How climate change tweaks temperatures

2) The ripple effect on habitats

3) The domino effect on biodiversity

Then, AI acts like a detective, piecing together how each part influences the next, crafting a comprehensive response to the original query.

2) Tree-of-thought Prompting

This technique grows from the chain-of-thought, branching out into multiple paths. It’s like mapping out a journey with various routes and exploring each path for a richer understanding.

3) Maieutic Prompting

Here, AI turns introspective, explaining its thought process. It’s like a teacher asking a student to show their work—ensuring the reasoning is sound and the learning is deep.

For instance, ask why renewable energy matters, and AI might first say it cuts down greenhouse gases. Push further, and it’ll delve into the nuances of wind and solar power, painting a bigger picture of a cleaner future.

4) Complexity-based Prompting

This is about choosing the path less traveled—the one with the most steps. It’s like preferring a winding trail over a straight road because it promises a more thorough exploration.

5) Generated Knowledge Prompting

This is about doing homework before writing the essay. AI gathers all the essential info first, ensuring the content is not just creative but also informed and accurate.

6) Least-to-most Prompting

Picture solving a puzzle from the outer edges inward. AI lists the sub-tasks and tackles them in order, building on each solution to construct the final masterpiece.

7) Self-refine Prompting

This is self-improvement at its best. AI drafts a solution, critiques it, and then refines it, considering both the problem and the feedback. It’s like an artist sketching a drawing, then erasing and enhancing until it’s just right.

8) Directional Stimulus Prompting

This is about giving AI a nudge in the right direction. It’s like suggesting themes for a poem, guiding the creative flow toward the desired outcome.

And there you have it—a glimpse into the toolbox of prompt engineering, where every technique is a key to unlocking AI’s potential in language processing. It’s a blend of art and science, ensuring that AI doesn’t just compute, but also comprehends and creates with nuance and depth.

Tips and Best Practices for Writing Prompts

Here are some tips and best practices for writing prompts:

1) Experimentation is Key: Start by articulating a concept in various ways to discover the most effective expression. Dive into different methods of requesting variations, considering factors like modifiers, styles, viewpoints, and even the influence of certain authors or artists, as well as presentation formats. This exploration will help you uncover subtle differences that lead to the most captivating outcomes for your queries.

2) Identify Workflow-specific Best Practices: For instance, if your task involves crafting marketing content for product descriptions, investigate diverse approaches to solicit variations, styles, and detail levels. Conversely, when grappling with complex ideas, comparing and contrasting them with similar concepts can illuminate their distinct features.

3) Be Inventive With Your Prompts: They can be a blend of examples, data, directives, or inquiries. Experiment with various combinations. Although tools often have input limitations, you can set guidelines in one session that persist through subsequent interactions.

4) Familiarise Yourself with Specialised Modifiers: Once you’re comfortable with a tool, delve into its unique modifiers. Generative AI applications typically offer concise keywords to define attributes like style, abstraction level, resolution, and aspect ratio. They also provide ways to emphasise certain prompt elements. These shortcuts enable you to specify your needs more accurately, saving time in the process.

5) Explore Prompt Engineering Environments: Integrated Development Environments (IDEs) designed for prompt engineering are invaluable for organising prompts and outcomes. They assist engineers in refining AI models and aid users in achieving specific results. While some IDEs like Snorkel, PromptSource, and PromptChainer cater to engineers, others such as GPT-3 Playground, DreamStudio, and Patience are more user-centric.

Explore AI language generation and understanding with our ChatGPT Course – join now!

The Future of Prompt Engineering

As we stand on the threshold of the AI-driven era, Prompt Engineering will play a key role in shaping the future of human interaction with artificial intelligence (AI). Even at best, this field is promising and has potential for growth.

1) Continuous Exploration and Advancements

With explorations and advancements emerging at a rapid pace, the world of AI is at its peak. In the field of Prompt Engineering:

Adaptive prompts: Researchers are working on how to change models in a way that they start generating their own prompts based on the intent by reducing the need for a manual guide.

Multimodal prompting: The field of Prompt Engineering is on the rise to include visual inputs with the increase of multimodal AI models that can process both texts and images.

Ethical prompts: As Artificial Intelligence (AI) ethics gains importance, there's a need to create prompts that make sure of fairness, transparency and reducing bias.

Unlock the future of ChatGPT with our ChatGPT Course – Sign up today!

Enduring Significance and Value

As Artificial Intelligence models develop and are integrated into many applications from healthcare to entertainment, the need for effective communication becomes even more important. Prompt Engineers ensure that these models are relevant, user-friendly and accessible.

Furthermore, as AI is democratised and numerous people without technical knowledge start to interact with these models, the role of the Prompt Engineer will gradually change. They’ll handle the creation of intuitive interfaces, create user-friendly inputs, and ensure that AI remains a tool that helps with human capabilities.

Difficulties and Possibilities

Similar to any progressing field, Prompt Engineering also has its list of difficulties like:

Model complexity: Crafting of prompts effectively becomes more challenging as the models grow in its size and complexity.

Honesty and fairness: Making sure that prompts don’t accidently introduce or create biases in model outputs.

Collaboration of disciplines: Prompt Engineering makes up the centre of the intersection of linguistics, computer science, and Psychology, making the collaboration of these disciplines a necessity.

These challenges also give out various opportunities. They create innovation, ensure collaboration of disciplines and make way for the future generation of AI techniques and solutions.

Reach heights in the field of AI with our AI Tools in Performance Marketing Training - Join today!

Conclusion

“What is Prompt Engineering” is no longer a difficult question to answer. It is a creative and resourceful process. Since the terms related to almost all aspects of Prompt Engineering are so broad, it is important to understand and thoroughly analyse each of them. The process of determining prompt inputs and, over time, the most appropriate Prompt Engineering method from the language model becomes the main idea of the entire process. Therefore, it is necessary to have a deep knowledge of all aspects of prompts.

Become the master of AI with our Generative AI Course – Sign up today!

Frequently Asked Questions

Becoming a Prompt Engineer requires a blend of formal education and skill development. Start with a strong educational background in fields like computer science, linguistics, or cognitive science, and focus on courses in AI, machine learning (ML), and NLP. Stay updated on AI advancements and network with professionals through communities and events.

The most common degree needed is a computer science degree.

The Knowledge Academy takes global learning to new heights, offering over 30,000 online courses across 490+ locations in 220 countries. This expansive reach ensures accessibility and convenience for learners worldwide.

Alongside our diverse Online Course Catalogue, encompassing 17 major categories, we go the extra mile by providing a plethora of free educational Online Resources like News updates, Blogs, videos, webinars, and interview questions. By tailoring learning experiences further, professionals can maximise value with customisable Course Bundles of TKA.

The Knowledge Academy’s Knowledge Pass, a prepaid voucher, adds another layer of flexibility, allowing course bookings over a 12-month period. Join us on a journey where education knows no bounds.

The Knowledge Academy offers various Data Analytics & AI Courses, including Big Data and Analytics Training, AI Voice Assistant Training and Duet AI for Workspace Training. These courses cater to different skill levels, providing comprehensive insights into knowing How to Become a Prompt Engineer.

Our Data, Analytics & AI Blogs cover a range of topics related to AI, offering valuable resources, best practices, and industry insights. Whether you are a beginner or looking to advance your AI and Data Analytical skills, The Knowledge Academy's diverse courses and informative blogs have got you covered.

Upcoming Data, Analytics & AI Resources Batches & Dates

Date

ChatGPT Course

ChatGPT Course

Fri 24th Jan 2025

Fri 21st Mar 2025

Fri 2nd May 2025

Fri 27th Jun 2025

Fri 29th Aug 2025

Fri 3rd Oct 2025

Fri 5th Dec 2025

Top Rated Course

Top Rated Course

If you wish to make any changes to your course, please

If you wish to make any changes to your course, please