We may not have the course you’re looking for. If you enquire or give us a call on 01344203999 and speak to our training experts, we may still be able to help with your training requirements.

Training Outcomes Within Your Budget!

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

Data has become an essential factor in decision-making, innovation, and progress in the information age. However, not all data is created equal. The juxtaposition of Big Data vs. Small Data marks a fundamental distinction in Data Analytics. While both hold immense potential, they come with their characteristics and considerations.

In this Big Data vs Small Data blog, we will discover the main differences between Big Data and Small Data in this blog. Read on to learn more!

Table of Contents

1) Defining Big Data and Small Data

2) Volume: The Size Factors

3) Velocity: The Speed of Data Generation

4) Variety: Diverse Data Sources

5) Veracity: Data Quality and Reliability

6) Value: Extracting Insights

7) Tools and Technologies

8) Challenges and Considerations

9) Conclusion

Defining Big Data and Small Data

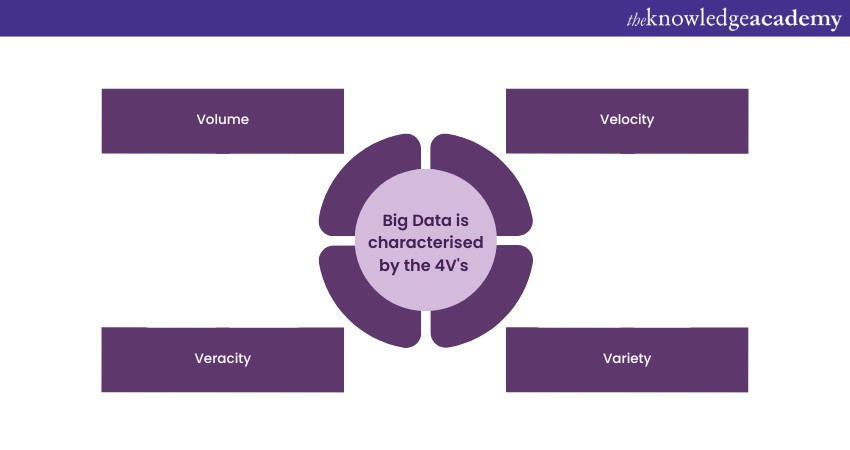

Big Data includes vast and complex datasets that exceed the capabilities of traditional data processing methods. It is characterised by the 4Vs: Volume, Velocity, Variety, and Veracity.

a) Volume: Big Data involves massive datasets, often measured in terabytes, petabytes, or exabytes. Examples include social media archives, sensor data, and genomic sequences.

b) Velocity refers to the rapid generation and collection of data, often in real-time or near real-time. Social media posts, sensor readings, and financial market transactions are prime examples.

c) Variety: Big Data is diverse, containing structured, semi-structured, and unstructured data types. It includes text, images, videos, sensor streams, and more, making it highly heterogeneous.

d) Veracity: Due to its vast and diverse sources, Big Data often needs to grapple with data quality issues, including inaccuracies, missing values, and inconsistencies. Rigorous data cleaning and validation are required.

Small Data, in contrast, pertains to manageable datasets in size and scope, often measured in gigabytes or megabytes. It typically consists of well-structured and homogenous data, such as monthly sales reports, individual patient records, or weather observations at specific intervals.

Small Data is prized for its higher data quality and immediate interpretability, making it well-suited for certain types of analysis. It may need more depth and breadth of insights derived from Big Data. Understanding these distinctions is crucial for organisations to choose the right approach and tools for their data-related endeavours.

Volume: The Size Factors

Volume is a critical dimension that distinguishes Big Data from Small Data. It refers to the sheer size of the datasets involved in Big Data scenarios. Big Data sets are massive, often scaling into terabytes, petabytes, or even exabytes of data. These datasets are far beyond what traditional Data Management Systems can handle efficiently.

Examples of high-volume data sources include:

a) Social media platforms with millions of daily posts and interactions.

b) Scientific experiments generate vast sensor data.

c) Financial markets process a continuous stream of transactions.

This massive volume poses significant challenges regarding data storage, processing power, and network bandwidth. It necessitates specialised storage solutions and distributed computing frameworks like Hadoop and Spark to effectively manage and analyse the data.

Big Data's immense volume is both a challenge and an opportunity, as it allows organisations to uncover insights and patterns that may remain hidden in smaller datasets. Still, it also demands substantial infrastructure investments and robust data management strategies to harness its full potential.

Keen to have deeper knowledge of Data analytics, refer to our blog on "data Architecture"

Velocity: The Speed of Data Generation

Velocity in the context of data refers to the remarkable speed at which data is generated, collected, and processed in Big Data scenarios. This rapid data generation is a defining characteristic that sets Big Data apart from Small Data.

In today's interconnected world, numerous sources produce data astonishingly. For example, social media platforms receive millions of new posts, comments, and interactions every minute. Sensor networks, such as those on the Internet of Things (IoT), generate constant data from various devices. Financial markets produce real-time data on stock trades, market orders, and price fluctuations.

The high velocity of data poses several challenges and opportunities. It necessitates real-time or near-real-time data processing capabilities to extract valuable insights as events unfold. Analysing this torrent of data requires advanced technologies, such as stream processing frameworks like Apache Kafka and complex event processing systems. Businesses and organisations that leverage the power of Big Data can achieve a competitive edge by making faster, data-driven decisions and responding quickly to changing conditions.

Join our Big Data Analytics & Data Science Integration Course today and immerse yourself in the world of Big Data. Do not miss out on this opportunity.

Variety: Diverse Data Sources

Variety is a fundamental aspect of Big Data that sets it apart from Small Data. It refers to the diverse data sources and types incorporated into Big Data environments.

In Big Data scenarios, data comes in various forms, including structured, semi-structured, and unstructured data. Like traditional databases, structured data follows a well-defined format, making it easily organised and analysed. Semi-structured data, such as XML or JSON files, has some structure but allows for flexibility. Unstructured data needs a predefined format and can include text, images, videos, and social media content.

This diversity presents both challenges and opportunities. Big Data technologies and analytics platforms are designed to handle this wide variety of data sources. Advanced methods such as Natural Language Processing (NLP) and image recognition can extract valuable insights from unstructured data. Integrating data from numerous sources, including social media, sensors, and customer feedback, can give organisations a comprehensive view of their operations.

Managing and extracting insights from these diverse data sources is a critical challenge in Big Data analytics. Still, it offers unparalleled opportunities for gaining comprehensive insights and making well-informed decisions.

Veracity: Data Quality and Reliability

Veracity represents a critical aspect of Big Data that deals with data quality, accuracy, and reliability within a dataset. In the context of Big Data, veracity underscores the challenges of ensuring that the immense volumes of data collected are trustworthy and suitable for meaningful analysis.

Big Data often faces veracity issues due to several factors:

a) Data Variety: Big Data incorporates diverse data sources, including user-generated content, sensor readings, and more. This diversity can introduce inconsistencies, inaccuracies, and missing values, making it easier to rely on the data with proper cleaning and validation.

b) Data Ingestion: As data is collected from various sources in real-time, errors can occur during the ingestion process, leading to discrepancies in the dataset.

c) Data Bias: Biases in data collection or sampling methods can affect the representativeness of the dataset, potentially skewing analysis results.

Addressing veracity challenges involves data cleansing, validation, and quality assurance processes. Data scientists and analysts must carefully assess data quality, identify and rectify errors, and establish trust in the data before conducting meaningful analyses.

Data provenance and transparency in data sources play an essential part in securing the integrity of Big Data, as they provide insights into how the data was collected and processed. Addressing integrity is vital to making accurate, reliable decisions based on Big Data.

Value: Extracting Insights

Value in the context of Big Data refers to the ability to extract meaningful insights and derive actionable information from vast and complex datasets. While Big Data is characterised by its volume, velocity, variety, and veracity (the 4Vs), its true significance lies in the potential value it can generate for businesses, organisations, and researchers.

a) Discovering Patterns: Big Data analytics can uncover hidden patterns and correlations within the data that might not be apparent in smaller datasets. These insights can drive strategic decisions, optimise processes, and enhance customer experiences.

b) Predictive Analytics: By analysing historical and real-time data, Big Data can enable predictive modelling, allowing organisations to anticipate trends, customer behaviours, and potential issues. This proactive approach aids in risk mitigation and resource allocation.

c) Personalisation: Big Data can power personalised recommendations in e-commerce, content delivery, and healthcare. Tailoring products, services, and content to individual preferences enhances customer satisfaction and engagement.

d) Operational Efficiency: Businesses can optimise operations, supply chains, and resource allocation based on data-driven insights, reducing costs and improving efficiency.

e) Innovation: Big Data fosters innovation by providing the foundation for developing new products, services, and business models. It encourages experimentation and the discovery of novel solutions.

f) Competitive Advantage: Organisations that effectively leverage Big Data attain a competitive edge by making data-driven decisions and anticipating market trends.

Realising the value of Big Data involves employing advanced analytics techniques, machine learning algorithms, and data visualisation tools to extract actionable insights. It also requires a data-driven culture within organisations that emphasises the importance of data in decision-making processes. Improved business outcomes, better customer experiences, and a deeper understanding of complex phenomena can result from extracting value from Big Data.

Tools and Technologies

The field of Big Data analytics is supported by a wide array of tools and technologies designed to handle the challenges posed by massive datasets. Here, we explore essential tools and technologies for Big and Small Data scenarios.

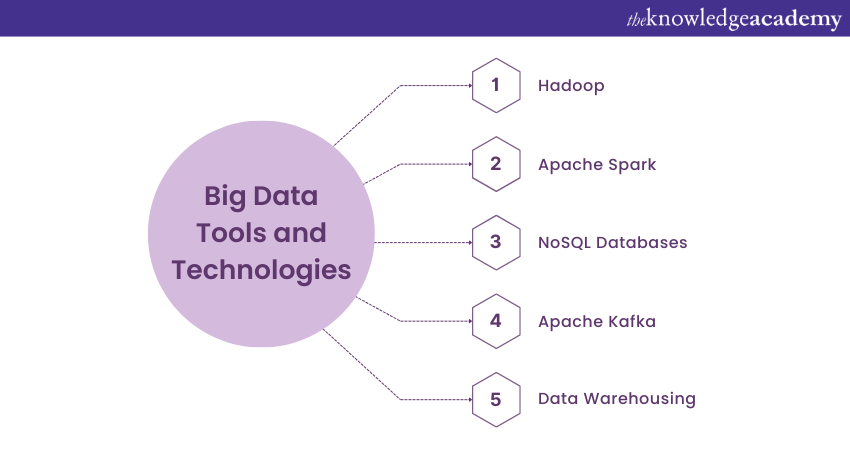

Following are the Big Data Tools and Technologies:

a) Hadoop: This framework enables distributed processing of large datasets across computer clusters. It includes a Hadoop Distributed File System (HDFS) for storage and MapReduce for data processing.

b) Apache Spark: Known for its speed and versatility, Spark is an in-memory, distributed computing framework that excels in data processing, machine learning, and graph processing.

c) NoSQL Databases: Databases like MongoDB, Cassandra, and HBase are designed for handling unstructured and semi-structured data, making them crucial for Big Data applications.

d) Apache Kafka: A distributed streaming platform, Kafka is ideal for real-time data streaming and event processing.

e) Data Warehousing: Solutions like Amazon Redshift and Google BigQuery offer scalable data warehousing for storing and querying large datasets.

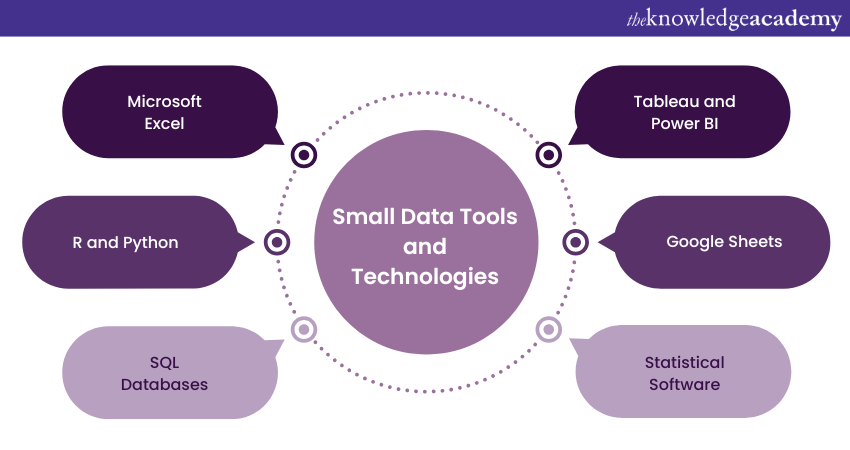

Following are the Small Data Tools and Technologies:

a) Microsoft Excel: Often the go-to tool for small-scale data analysis due to its user-friendliness and versatility.

b) R and Python: Popular programming languages for statistical analysis and data visualisation offer numerous libraries and packages for small-scale data analysis.

c) SQL Databases: Relational databases like MySQL and PostgreSQL are well-suited for structured data storage and querying.

d) Tableau and Power BI: These tools provide intuitive data visualisation capabilities for small datasets, making it easier to derive insights.

e) Google Sheets: A web-based spreadsheet application that facilitates collaboration and fundamental data analysis.

f) Statistical Software: Statistical packages like SPSS and SAS are used for advanced statistical analysis of smaller datasets.

Selecting the appropriate tools and technologies depends on factors like data volume, complexity, and organisational needs. While Big Data tools excel in processing and analysing vast datasets, Small Data tools are more straightforward and user-friendly, catering to smaller-scale analytics needs.

Challenges and Considerations

There are several challenges and considerations in Big Data vs Small Data analytics. Protecting sensitive information is paramount, which calls for robust measures to ensure data security and privacy. Additionally, both Big Data and Small Data environments must efficiently handle growing datasets, which requires scalability and efficient storage management.

Resource constraints can limit Small Data analysis, requiring careful allocation of computing resources. Ethical considerations, such as bias in algorithms and responsible data usage, are essential. Complying with regulations such as GDPR, which deal with data privacy, can be complex. Organisations are constantly trying to balance the cost of data processing with its benefits, so finding the right balance in their data strategies is essential.

Dive deeper into the world of Big Data and Analytics by exploring our Big Data Analysis Course. Sign up now to gain the skills and knowledge you need!

Conclusion

In Data Analytics, Big Data and Small Data exist together and highlight the complexity of our information ecosystem. Big Data allows us to analyse large and complex datasets to extract valuable insights, while Small Data provides quick and precise answers to specific questions. Unlocking the true potential of data requires understanding and harnessing each unique strength to drive innovation, efficiency, and informed decision-making in an interconnected world.

Attain knowledge to examine raw data with our Advanced Data Analytics Certification - Register today for a better future!

Frequently Asked Questions

Big Data involves large, complex datasets that require advanced tools for storage, analysis, and processing. Meanwhile, simple data consists of smaller, manageable datasets often processed with basic tools. Big data provides deeper insights, whereas simple data is typically used for simple tasks.

Big data offers richer insights by analysing large, diverse datasets, enabling trends, predictions, and decision-making that small data cannot achieve. Its scalability, variety, and real-time analysis capabilities make it better for tackling complex challenges and driving innovation in businesses.

The Knowledge Academy takes global learning to new heights, offering over 30,000 online courses across 490+ locations in 220 countries. This expansive reach ensures accessibility and convenience for learners worldwide.

Alongside our diverse Online Course Catalogue, encompassing 19 major categories, we go the extra mile by providing a plethora of free educational Online Resources like News updates, Blogs, videos, webinars, and interview questions. Tailoring learning experiences further, professionals can maximise value with customisable Course Bundles of TKA.

The Knowledge Academy’s Knowledge Pass, a prepaid voucher, adds another layer of flexibility, allowing course bookings over a 12-month period. Join us on a journey where education knows no bounds.

The Knowledge Academy offers various Big Data and Analytics Training, including the Advanced Data Analytics Certification, Certified Artificial Intelligence (AI) for Data Analysts Training, and Data Analytics with R. These courses cater to different skill levels, providing comprehensive insights into What is Data.

Our Data, Analytics & AI Blogs cover a range of topics related to Big Data, offering valuable resources, best practices, and industry insights. Whether you are a beginner or looking to advance your Data Analytics skills, The Knowledge Academy's diverse courses and informative blogs have got you covered.

Upcoming Data, Analytics & AI Resources Batches & Dates

Date

Big Data Architecture Training

Big Data Architecture Training

Fri 24th Jan 2025

Fri 28th Mar 2025

Fri 23rd May 2025

Fri 25th Jul 2025

Fri 26th Sep 2025

Fri 28th Nov 2025

Top Rated Course

Top Rated Course

If you wish to make any changes to your course, please

If you wish to make any changes to your course, please