We may not have the course you’re looking for. If you enquire or give us a call on 01344203999 and speak to our training experts, we may still be able to help with your training requirements.

Training Outcomes Within Your Budget!

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

When it comes to Statistical Analysis, handling complicated and limited data can be really tough. These issues can make it hard to make good decisions or trust your analysis results. However, understanding the Bootstrap Method can help you manage these difficulties better, giving you a stronger and more adaptable way.

If you are curious to learn about this powerful statistical tool, then this blog is for you. In this blog, you will learn what the Bootstrap Method is, its different types, benefits, and limitations, and how it works.

Table of Contents

1) What is Bootstrap Method?

2) Types of Bootstrap Method

3) How does the Bootstrap Method work?

4) Benefits of the Bootstrap Method

5) Limitations of the Bootstrapping Method

6) Applications of the Bootstrap Method

7) Conclusion

What is Bootstrap Method?

The Bootstrap Method is a statistical procedure that involves repeatedly resampling a dataset with replacement. This technique is used to estimate the distribution of a statistic (like the mean or median) by generating numerous samples from the observed data. It allows Statisticians and researchers to understand the variability of their estimates and to construct confidence intervals, even when the underlying distribution is unknown or complex.

This method has become a staple in modern Statistical Analysis due to its simplicity and effectiveness in dealing with various types of data.

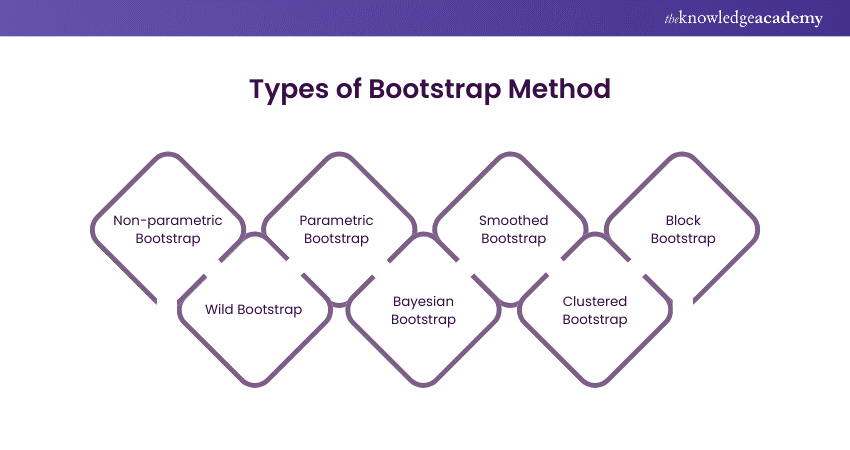

Types of Bootstrap Method

The Bootstrap Method can be categorised into several types, each tailored to different statistical needs and data characteristics. These variations allow for flexibility in approach and application. Here are some of the prominent types:

1) Non-parametric Bootstrap: The most common form, it does not assume any specific underlying distribution. It resamples directly from the data, maintaining the original sample's empirical distribution.

2) Parametric Bootstrap: Assumes that the data follows a specific distribution. It involves fitting a model to the data and then resampling from this model. It is ideal for situations where the underlying distribution is known or can be reasonably approximated.

3) Smoothed Bootstrap: Enhances the non-parametric Bootstrap by adding a small amount of random noise to the resamples. This type is useful for data with discrete values or when a smoother estimate is required.

4) Block Bootstrap: Designed for data with an inherent structure, such as time series, where observations are dependent. It resamples blocks of data instead of individual observations to preserve the internal structure.

5) Wild Bootstrap: Used particularly in Regression Analysis. It's suited for models with heteroskedastic errors and involves resampling from the residuals of the fitted model.

6) Bayesian Bootstrap: Instead of resampling data points, it involves resampling weights associated with each observation. This approach aligns more closely with Bayesian inferential methods.

7) Clustered Bootstrap: Applicable when data is organised in clusters or groups. It involves resampling entire clusters instead of individual observations to account for within-group correlations.

Each type addresses specific challenges and scenarios in Statistical Analysis. This showcases the Bootstrap Method's adaptability and wide-ranging applicability.

How does the Bootstrap Method work?

Bootstrap Methods operate by creating multiple simulated samples from the original dataset. This process is key to estimating the variability and confidence of statistical measures. It involves several key steps that collectively contribute to its effectiveness. Let's explore these steps in detail:

1) Sample creation: Random samples are drawn from the original dataset, with each sample having the same size as the original set.

2) Replacement: During sampling, each observation is replaced, allowing it to be chosen more than once.

3) Calculating statistics: For each resampled dataset, the statistic of interest (e.g., mean, median) is calculated.

4) Repetition: This process is repeated a large number of times, often thousands or more, to build a distribution of the statistic.

5) Estimation: The collected statistics form an empirical distribution used to estimate the true population parameter.

6) Confidence intervals: From this distribution, confidence intervals and standard errors for the statistics can be derived.

7) Analysis: The results provide insights into the variability and reliability of the estimated statistics from the original data.

Transform your code into career success with our Programming Training – Sign up now!

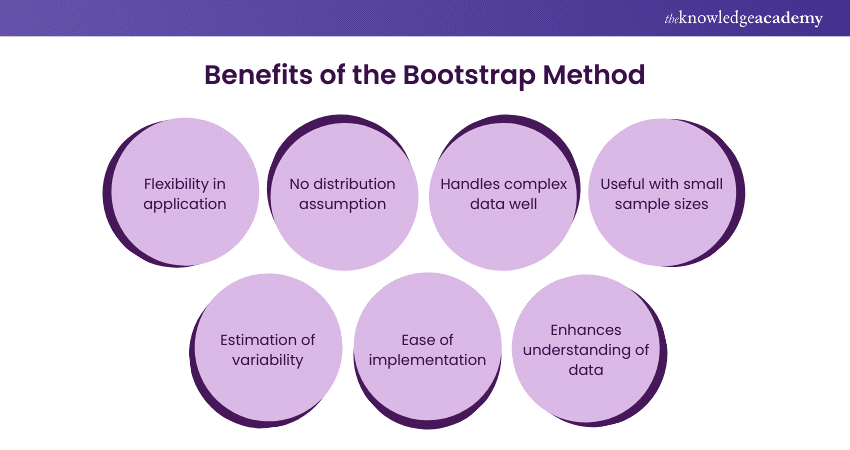

Benefits of the Bootstrap Method

The Bootstrap Method stands out in Statistical Analysis for its remarkable flexibility and robustness. It is especially beneficial in scenarios where traditional statistical methods might be limited. Here are some of its key advantages:

1) Flexibility in application: It can be applied to a wide array of statistical problems, showcasing its versatility across various research fields.

2) No distribution assumption: Unlike many traditional methods, it does not require assumptions about the data's distribution, making it suitable for any type of data.

3) Handles complex data well: This method excels with complex datasets where determining theoretical distribution is challenging.

4) Useful with small sample sizes: It provides reliable estimates even with small samples, a scenario where traditional methods often fall short.

5) Estimation of variability: Directly estimates the variability and confidence intervals of a statistic, which can be cumbersome by other means.

6) Ease of implementation: With modern computing power, implementing this method is feasible even for large datasets.

7) Enhances understanding of data: Repeated sampling offers a deeper insight into the sample's properties and the reliability of estimates.

These attributes make the Bootstrap Method a highly effective tool in modern statistical practices, offering solutions and insights where conventional methods might not be effective.

Limitations of the Bootstrapping Method

While the Bootstrap Methods offers significant advantages in Statistical Analysis, it's important to be aware of its limitations. These constraints can impact its effectiveness and applicability in certain scenarios. Let's delve into some of these limitations:

1) Dependence on the original sample: The method's accuracy heavily relies on the representativeness of the initial sample. A non-representative sample can lead to biased Bootstrapping results.

2) Computationally intensive: It requires significant computational resources, particularly with large datasets and many resampling iterations.

3) Not always applicable: In cases like time series data, where dependencies exist, standard Bootstrapping may not be suitable or might need adjustments.

4) Handling extreme values: Bootstrapping can struggle with data that includes extreme outliers or high skewness, as these can be magnified during resampling.

5) Independence assumption: The method assumes sample independence, which may not be true for all datasets, restricting its use in such cases.

6) Determining resample numbers: Choosing the correct number of resamples can be a complex decision, varying with data type and desired precision.

7) Risk of overfitting in Machine Learning: When used in Machine Learning without proper validation, Bootstrapping can lead to overfitting, especially with complex models.

Acknowledging these limitations is crucial for Statisticians and Data Analysts to ensure they use the Bootstrap Methods appropriately and effectively in their analyses.

Transform your skills with our Python Course – Sign up today!

Applications of the Bootstrap Method

The Bootstrap Method finds its applications in a wide range of fields, demonstrating its versatility as a statistical tool. Its ability to work with different types of data and estimate parameters, where traditional methods may struggle, has made it a popular choice in various disciplines. Here are some key areas where the Bootstrap Methods is extensively applied:

1) Statistical Analysis: It is commonly used to estimate the distribution of sample statistics and to perform hypothesis testing, particularly in cases where the theoretical distribution of a statistic is unknown or difficult to derive.

2) Estimating standard errors and confidence intervals: The Bootstrap Method is particularly useful for estimating the standard error of a statistic and constructing confidence intervals, especially when traditional analytical methods are not feasible or applicable.

3) Machine Learning and model validation: In the field of Machine Learning, Bootstrapping is used for model validation, assessing model stability, and improving prediction accuracy. It's also utilised in ensemble methods to reduce variance and prevent overfitting.

4) Econometrics and finance: Bootstrapping methods are widely used in econometrics for Regression Analysis and in finance for risk assessment and portfolio optimisation.

5) Biostatistics and epidemiology: In medical research and epidemiology, Bootstrapping helps analyse complex datasets and draw inferences about population health.

6) Social sciences and psychology: Researchers in these fields employ Bootstrapping to analyse survey data, especially when dealing with small sample sizes or complex sampling designs.

7) Quality Control and manufacturing: It is used in industrial settings for process optimisation, quality control, and in making data-driven decisions based on empirical evidence.

The widespread use of the Bootstrap Method across these varied fields highlights its importance as a fundamental tool in modern Statistical Analysis and data-driven decision-making.

Conclusion

We hope you read and understand everything about the Bootstrap Method. The Bootstrap Method is a vital statistical tool, offering remarkable flexibility and robustness across various fields. Although it has some limitations, its ability to handle complex data and provide reliable estimates makes it indispensable in modern Statistical Analysis.

Unlock your potential with our Bootstrap Training – Sign up now!

Frequently Asked Questions

Unlike traditional methods that often rely on normal distribution assumptions, the Bootstrap Method uses resampling, making it more flexible and applicable to various data types.

Yes, the Bootstrap Method is versatile and can be applied to different data types, but it requires a representative sample for accurate results.

The Knowledge Academy takes global learning to new heights, offering over 30,000 online courses across 490+ locations in 220 countries. This expansive reach ensures accessibility and convenience for learners worldwide.

Alongside our diverse Online Course Catalogue, encompassing 17 major categories, we go the extra mile by providing a plethora of free educational Online Resources like News updates, blogs, videos, webinars, and interview questions. By tailoring learning experiences further, professionals can maximise value with customisable Course Bundles of TKA.

The Knowledge Academy’s Knowledge Pass, a prepaid voucher, adds another layer of flexibility, allowing course bookings over a 12-month period. Join us on a journey where education knows no bounds.

The Knowledge Academy offers various Programming Courses, including Bootstrap, Object Oriented Programming (OOPs) and Python with Machine Learning. These courses cater to different skill levels, providing comprehensive insights into Programming methodologies in general.

Our Programming blogs cover a range of topics related to Bootstrap, offering valuable resources, best practices, and industry insights. Whether you are a beginner or looking to advance your Programming skills, The Knowledge Academy's diverse courses and informative blogs have you covered.

Upcoming Programming & DevOps Resources Batches & Dates

Date

Bootstrap Training

Bootstrap Training

Fri 7th Feb 2025

Fri 4th Apr 2025

Fri 6th Jun 2025

Fri 8th Aug 2025

Fri 3rd Oct 2025

Fri 5th Dec 2025

Top Rated Course

Top Rated Course

If you wish to make any changes to your course, please

If you wish to make any changes to your course, please