We may not have the course you’re looking for. If you enquire or give us a call on 01344203999 and speak to our training experts, we may still be able to help with your training requirements.

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

In software development and deployment, the choice between Virtual Machines (VMs) and Docker is an important decision. Both serve to replicate development environments and manage dependencies. However, they exhibit distinct characteristics that influence this decision.

Docker is used for lightweight application deployment, while Virtual Machines are required for more robust isolation and compatibility with different operating systems. A comparison between Docker vs VM would provide a comprehensive understanding of both.

In this blog, we look into the nuances of VMs and Docker containers, offering a comprehensive comparison to help you make the right choice for your specific application. Read more to learn more!

Table of Contents

1) What is virtualisation?

2) How does a Virtual Machine work?

3) What is a Hypervisor?

4) How does a Docker container work?

5) Docker vs. Virtual Machine

6) Conclusion

What is virtualisation?

Virtualisation involves the creation of a simulated or digitalised counterpart of computing assets. It involves servers, storage units, operating systems, and networks, which are decoupled from their underlying physical hardware.

This separation facilitates enhanced adaptability, expandability, and swiftness in the administration and deployment of computational resources. It permits the generation of numerous Virtual Machines using the components of a solitary device, crafting a computer within a computer through software emulation.

How does a Virtual Machine work?

A Virtual Machine operates by leveraging a software layer called a Hypervisor, which plays a pivotal role in managing and orchestrating multiple VMs on a single physical server or host machine. The Hypervisor acts as an intermediary that abstracts and controls the physical hardware resources. It ensures that these resources are allocated efficiently among the VMs.

The following provides a more detailed breakdown of how a VM works:

1) Hypervisor: The Hypervisor is a critical component of VM technology. It comes in two main types, Type 1 and Type 2. The Hypervisor is responsible for distributing physical resources, such as CPU, memory, storage, and network bandwidth, among the VMs running on the host machine.

2) Resource allocation: It ensures that each VM gets its fair share of resources, preventing one VM from monopolising them at the expense of others.

3) Guest operating systems: Each VM runs its guest operating system, which is entirely separate from the host operating system. This is a crucial feature that allows you to run different operating systems (e.g., Windows, Linux, macOS) on the same physical server. It also provides the VMs with a familiar environment to execute applications and processes.

4) Virtual hardware: The Hypervisor simulates virtual hardware components within each VM. These virtual hardware components include virtual CPUs, virtual memory, virtual disks, and virtual network adapters. These components are emulated to appear as real hardware to the guest operating systems, which interact with them as if they were physical.

5) Isolation: VMs are isolated from each other. This isolation means that what happens in one VM does not affect others. If one VM crashes or faces issues, it does not impact the stability or functionality of other VMs on the same host. This isolation is particularly important for scenarios where security and separation are critical.

6) Snapshots and migration: VMs offer the capability to create snapshots or checkpoints of their state at a specific point in time. This is immensely useful for backup and disaster recovery. VMs can be migrated between physical hosts, making it possible to balance workloads and perform maintenance without service interruption.

7) Versatility: VMs are versatile and can host a wide range of applications and workloads. They are well-suited for situations where strong isolation, compatibility with various operating systems, and the ability to run legacy applications are crucial.

What is a Hypervisor?

The software responsible for creating and managing virtual computing environments is known as a Hypervisor. It functions as a lightweight software or firmware layer positioned between the physical hardware and the virtualised environments. It allows multiple operating systems to operate concurrently on a single physical machine.

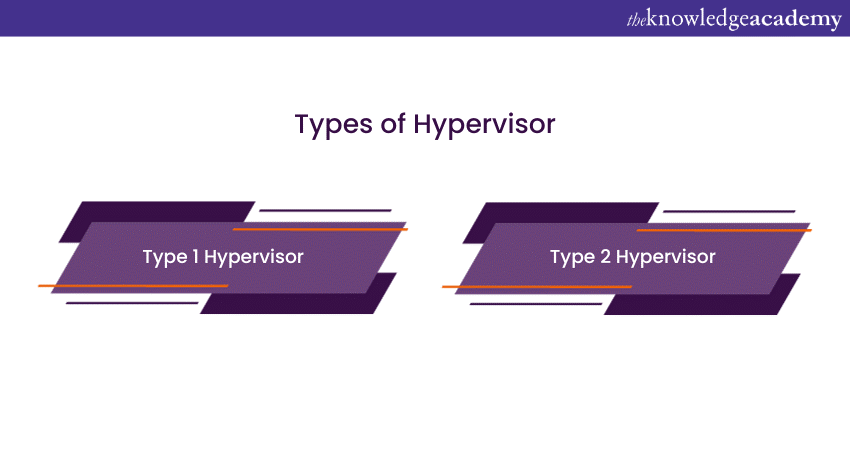

The primary role of the Hypervisor is to abstract and partition the underlying hardware resources, including central processing units (CPUs), memory, storage, and networking, and then distribute these resources to the virtual environments. There exist two distinct categories of hypervisors:

1) Type 1 Hypervisor: This type functions directly on the physical hardware, eliminating the need for a host operating system, which enhances both efficiency and security. Popular examples of Type 1 Hypervisors include ESX vs. ESXi, along with VMware vSphere and Microsoft Hyper-V.

2) Type 2 Hypervisor: Type 2 Hypervisors run on top of an existing operating system. They are user-friendly and convenient for development and testing environments. Examples include Oracle VirtualBox and VMware Workstation.

Enhance your credibility in the job market with our DevOps Courses and stay ahead of the learning curve!

How does a Docker container work?

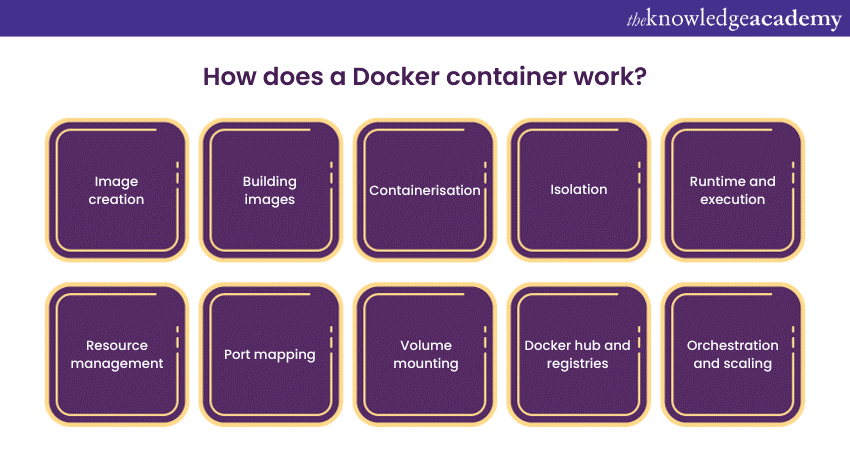

The following represents how a Docker container work:

Image creation

It all starts with creating a Docker image, which serves as the blueprint for a container. An image is a snapshot of a file system, along with an application and its dependencies. This snapshot is defined in a file called a "Dockerfile." The Dockerfile specifies the base image, any software to install, and the application code.

Building images

Once you have a Dockerfile, you use the Docker CLI to build an image. Docker will pull the necessary base image from a registry and execute the instructions in your Dockerfile to create a new image. This new image contains everything your application needs to run.

Containerisation

To run an application in a container, you create an instance of an image. This instance is a running container. When you start a container, Docker allocates a portion of the host's resources, such as CPU, memory, and storage, to it. Containers share the host's OS kernel, making them lightweight and efficient.

Isolation

Containers are isolated from one another and the host system. Each container has its own file system, processes, and network stack. This isolation allows you to run multiple containers on the same host without them interfering with each other.

Runtime and execution:

Docker uses the host OS's kernel to execute processes inside containers. Containers are designed to be stateless, which means that you can start, stop, and delete containers without affecting the host or other containers.

Resource management

Docker provides fine-grained control over the resources allocated to containers. You can limit the amount of CPU, memory, and network bandwidth that a container can use. This resource control is helpful in ensuring fair allocation and preventing one container from monopolising resources.

Port mapping

Docker allows you to map network ports on the host to ports in the container. This is particularly important for web applications, where you can expose a specific port in the container to the outside world. Port mapping enables the seamless interaction of containers with the host and external networks.

Volume mounting

Docker provides a way to mount directories from the host system into containers. This is called volume mounting and is helpful for persisting data, configuration files, or other resources between container runs. It ensures that data can survive the lifecycle of a container.

Docker hub and registries

Docker Hub is a repository where Docker images can be shared and accessed by the community. Docker images can also be stored in private repositories. This facilitates collaboration and the distribution of containerised applications.

Orchestration and scaling

For larger-scale deployments, Docker can be used in conjunction with container orchestration tools like Docker Swarm and Kubernetes. These tools help manage and scale containers across multiple hosts, ensuring high availability and load balancing.

Learn the latest developments, practices and tools with our Certified DevOps Professional (CDOP) Course and gain a competitive edge over your peers!

Docker vs. Virtual Machine

The following table explains the differences between Docker vs VMs based on various aspects:

|

Differences |

Docker |

Virtual Machine |

|

Operating system |

Docker utilises a container-based model where containers are software packages used to execute applications on any operating system. In Docker, containers share the host OS kernel, allowing multiple workloads to run on a single OS. |

Virtual Machines, on the other hand, do not follow a container-based model. They utilise user space in conjunction with the kernel space of an OS, and each workload requires a complete OS or hypervisor. VMs do not share the host kernel. |

|

Performance |

Docker containers offer high-performance because they utilise the same operating system without the need for additional software, like a Hypervisor. They also start up quickly, resulting in minimal boot-up time. |

Virtual Machines have comparatively poorer performance as they use separate OS instances, leading to increased resource consumption. VMs do not start quickly, impacting their performance. |

|

Portability |

Docker containers provide excellent portability. Users can create an application, package it into a container image, and run it in host environments. These containers are smaller than VMs, making the transfer of files on the host's filesystem simpler. |

VMs face portability issues because they lack a central hub. They require more memory space to store data, and when transferring files, VMs must include a copy of the OS and its dependencies. This increases image size and complicates the process of sharing data. |

|

Speed |

Docker containers enable applications to start instantly since the OS is already up and running. They were designed to expedite the deployment process of applications. |

Virtual Machines take significantly longer to run applications. To deploy a single application, VMs must initiate the entire OS, resulting in a full boot process, causing delays. |

Understand the process of building Docker images and using registries with our Introduction To Docker Training and learn monitoring techniques for Docker containers

Conclusion

The choice between Docker vs VM ultimately hinges on your specific application and its requirements. Site reliablility enginners leverage Docker for containerization to enhance system reliablity. VMs provide strong isolation and support legacy applications but come with resource overhead and slower start times. While Dockers offer remarkable efficiency, portability, and faster deployment, making them ideal for modern microservices and DevOps environments. By considering performance, resource utilisation, and isolation, you can decide whether to opt for the robustness of VMs or the agility of Docker containers.

Attain in-depth knowledge about better service delivery with ITSM For DevOps Training and acquire skills to automate ITSM tasks to reduce errors!

Frequently Asked Questions

What are the Other Resources and Offers Provided by The Knowledge Academy?

The Knowledge Academy takes global learning to new heights, offering over 3,000 online courses across 490+ locations in 190+ countries. This expansive reach ensures accessibility and convenience for learners worldwide.

Alongside our diverse Online Course Catalogue, encompassing 19 major categories, we go the extra mile by providing a plethora of free educational Online Resources like News updates, Blogs, videos, webinars, and interview questions. Tailoring learning experiences further, professionals can maximise value with customisable Course Bundles of TKA.

Upcoming Programming & DevOps Resources Batches & Dates

Date

Docker Course

Docker Course

Fri 11th Apr 2025

Fri 13th Jun 2025

Fri 15th Aug 2025

Fri 10th Oct 2025

Fri 12th Dec 2025

Top Rated Course

Top Rated Course

If you wish to make any changes to your course, please

If you wish to make any changes to your course, please