We may not have the course you’re looking for. If you enquire or give us a call on 01344203999 and speak to our training experts, we may still be able to help with your training requirements.

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

Natural Language Processing (NLP) is a dynamic field within Artificial Intelligence (AI) that focuses on the interaction between computers and human languages. Building NLP Projects is a fantastic way for beginners and advanced learners alike to delve into the intricacies of language-based AI applications. In this blog, we'll explore 15 NLP Project ideas suitable for various skill levels, providing a roadmap for individuals keen on mastering NLP concepts and techniques.

Table of Contents

1) List of Top 15 NLP Project Ideas

a) Summarising Text

b) Identifying Toxic Comments

c) Named Entity Recognition (NER)

d) Spell and Grammar Checking

e) Analysing Sentiments

f) Filtering Spam Messages

g) Creating Titles for Research Papers

h) Identifying Languages

i) Generating Image Captions

j) Assisting with Homework

k) Topic Modeling in NLP (LDA and NMF)

l) Analysing Speech Emotions

m) Image captioning using LSTM

n) Question answering with DistilBERT

o) Completing Masked Words with BERT

2) Conclusion

List of Top 15 NLP Project Ideas

Here are 15 of the top NLP Project Ideas to build on and enhance your NLP skills and knowledge:

Summarising text

Summarising text using NLP involves the application of algorithms and models to condense large volumes of text while retaining the essential information. One popular approach to text summarisation is extractive summarisation, which ranks and selects the most important sentences from the original text. The TextRank algorithm, implemented in libraries like Gensim, is commonly used. It assigns weights to sentences based on importance, generating a concise summary.

In contrast, abstractive summarisation techniques, often leveraging advanced models like BERT or GPT, aim to create new sentences that capture the original text's meaning. These models can generate human-like language, allowing for more contextually rich summaries. Summarising text with NLP is a valuable tool for information retrieval, enabling the efficient extraction of key insights from extensive content.

Identifying toxic comments

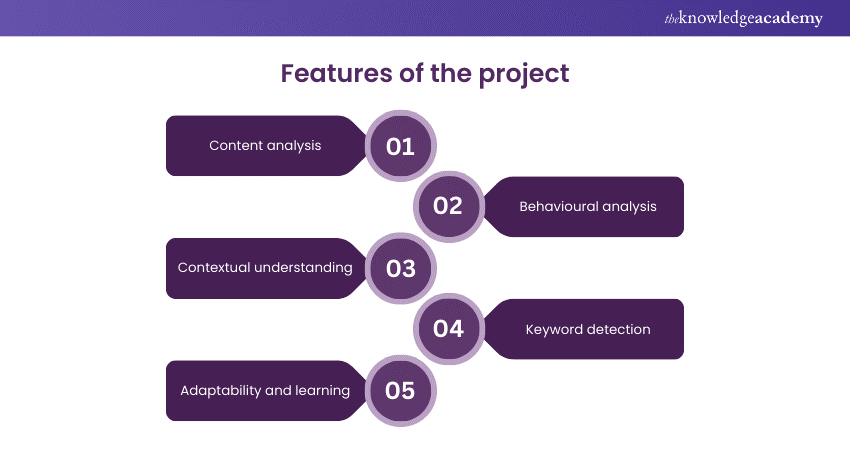

Identifying toxic comments using NLP is a crucial application to detect and mitigate harmful content in online discussions. Toxic comments can include hate speech, offensive language, or any form of content that can harm individuals or communities. NLP models automatically analyse and categorise text, distinguishing between normal and toxic language.

The process involves training machine learning models on labelled datasets containing examples of toxic and non-toxic comments. Techniques such as sentiment analysis, word embeddings, and deep learning architectures are often utilised to capture the nuanced nature of toxic language. The models learn patterns and linguistic features associated with toxicity, enabling them to classify comments in real time.

This application is particularly valuable for online platforms, social media, and forums where community guidelines aim to maintain a safe and respectful environment. By leveraging NLP, platforms can automatically filter or flag toxic comments, fostering a healthier online discourse and protecting users from harmful content.

Named Entity Recognition (NER)

Named Entity Recognition (NER) is a crucial task in NLP that involves identifying and classifying named entities in a given text. Named entities are real-world objects such as persons, organisations, locations, dates, etc. NER aims to extract structured information from unstructured text, making it valuable for various applications.

A NER model analyses the text and labels entities based on predefined categories. For instance, in the sentence "Steve Jobs in Cupertino founded Apple Inc.," NER would identify "Apple Inc." as an organisation, "Steve Jobs" as a person, and "Cupertino" as a location.

Implementing NER typically involves machine learning models, including rule-based systems or more advanced approaches like Conditional Random Fields (CRF) and deep learning models. Popular NLP libraries such as SpaCy and NLTK provide pre-trained models for NER tasks, simplifying the integration of this technology into information extraction and analysis applications.

Spell and grammar checking

Spell and grammar checking using NLP is a crucial application that enhances the quality of written content by identifying and correcting spelling, grammar, and syntax errors. NLP algorithms leverage linguistic patterns and context to detect mistakes in written text, providing users with suggestions for corrections.

These systems employ rule-based approaches, statistical models, or a combination of both to analyse the input text. Rule-based systems use predefined grammatical rules and dictionaries to identify errors. At the same time, statistical models learn patterns from large datasets to predict the likelihood of certain words or phrases being correct.

NLP-based spell and grammar checkers not only correct typographical errors but also offer contextual suggestions, making them more effective in understanding the intended meaning of a sentence. These tools improve communication, professional writing, and overall language accuracy in various domains, from document editing to online communication platforms. The continuous development of NLP models further refines these systems' accuracy and contextual understanding, making them indispensable for writers and content creators.

Analysing sentiments

Analysing sentiments using NLP involves the application of algorithms and techniques to determine the emotional tone expressed in a piece of text. Sentiment analysis is crucial in understanding public opinion, customer feedback, and social media sentiments. The process typically involves classifying the sentiment of text as positive, negative, or neutral.

In NLP, sentiment analysis can be implemented through various approaches, including machine learning models, rule-based systems, and deep learning methods. Machine learning models often use labelled datasets to train algorithms to recognise patterns in language indicative of specific sentiments. Rule-based systems rely on predefined rules and lexicons to assess sentiment, while deep learning methods, such as recurrent neural networks (RNNs) and transformers, can capture intricate contextual information for more nuanced sentiment analysis.

Sentiment analysis has widespread applications, including brand monitoring, product reviews, social media monitoring, and market research. Businesses leverage sentiment analysis to gain insights into customer opinions, adapt marketing strategies, and enhance overall decision-making processes.

Filtering spam messages

Filtering spam messages using NLP involves employing computational linguistics techniques to distinguish between legitimate and unwanted messages. NLP algorithms can analyse text messages' content and linguistic features to identify patterns commonly associated with spam. This process often includes extracting features such as the frequency of certain words, the presence of hyperlinks, and the overall context.

By leveraging machine learning models trained on labelled datasets, NLP systems can learn to classify messages as spam or non-spam based on these features. Common techniques for spam filtering include using support vector machines, decision trees, or more advanced models like neural networks.

NLP-based spam filters continuously adapt and improve their accuracy as they encounter new examples, making them effective tools for mitigating the influx of unwanted and potentially harmful messages in various communication platforms, including email and messaging applications.

Creating titles for research papers

Creating titles for research papers using NLP involves leveraging algorithms and models to generate concise and informative titles based on the content of the papers. This task is particularly useful in academic and scientific fields where clear and relevant titles are crucial in attracting readers and conveying the essence of the research.

NLP Models can analyse a research paper's abstract, key phrases, and main content to identify significant concepts and ideas. By understanding the context and extracting relevant information, these models generate titles that encapsulate the core contributions of the paper. This process not only aids researchers in crafting effective titles but also saves time and effort in manual title generation.

Implementations may vary, with approaches ranging from rule-based systems to advanced deep-learning models. Techniques such as named entity recognition, sentiment analysis, and summarisation can contribute to the precision and relevance of the generated titles. NLP-driven title creation enhances the accessibility and impact of research papers within academic and scientific communities.

Want to unlock your Data Science potential today? Then register now for our Data Science Training!

Identifying languages

Identifying languages using NLP involves leveraging algorithms and models to automatically detect the language of a given text. This task is essential for various applications, such as content filtering, language-specific analysis, and multilingual support in software.

One common approach is to use character-level n-grams and statistical methods to determine the language of a text. Language identification models analyse patterns in the distribution of characters or words to make predictions. For instance, the frequency of certain letter combinations may differ between languages, aiding in accurate identification.

Libraries, including NLTK and spaCy, provide pre-trained language identification models, making it accessible for developers to implement language detection in their applications. By accurately identifying languages, NLP systems can adapt their processing strategies, enabling effective communication and analysis across diverse linguistic contexts.

Generating image captions

Generating image captions using NLP involves the integration of computer vision and language understanding to create descriptive textual explanations for images. This task has practical applications in making images more accessible to visually impaired individuals and enhancing content understanding in various domains.

In this process, a model is trained to analyse the content of an image and generate coherent and contextually relevant captions. Deep learning models are commonly employed, particularly those based on convolutional neural networks (CNNs) for image processing and recurrent neural networks (RNNs) for language generation.

During training, the model learns to associate visual features extracted from the image with corresponding textual descriptions. This enables it to generalise and generate captions for new, unseen images. The generated captions aim to capture the images' key elements, objects, and relationships, enriching the understanding of the visual content through natural language descriptions. Advances in transformer-based architectures, such as using BERT and other pre-trained models, have further improved the quality and contextuality of generated image captions.

Assisting with homework

Assisting with homework using NLP involves leveraging computational linguistics to aid students in understanding and completing their assignments. NLP applications in this context encompass various functionalities, such as providing explanations, answering questions, and offering educational support.

For instance, NLP Chatbots can engage students in natural language conversations, clarify concepts, offer hints, and guide them through problem-solving. NLP algorithms can be applied to analyse and interpret textual content from educational resources, enabling extracting relevant information for homework-related queries. Additionally, automatic summarisation techniques can condense complex material into more digestible formats, aiding students in quickly grasping key concepts.

Overall, integrating NLP into homework assistance tools enhances accessibility, fosters interactive learning experiences, and supports students in comprehending and completing assignments more effectively. This technological approach contributes to personalised and efficient educational support, catering to the diverse needs of students across various subjects and academic levels.

Level up your Data Science skills with our Python Data Science Training. Join now!

Topic modelling in NLP (LDA and NMF)

Topic modelling is a crucial application in NLP, and two popular techniques for this purpose are Latent Dirichlet Allocation (LDA) and Non-Negative Matrix Factorisation (NMF). These methods are employed to discover latent topics within a collection of text documents.

LDA is a generative probabilistic model that assumes each document is a mixture of topics and each topic is a mixture of words. LDA uncovers underlying themes present in the dataset by analysing the distribution of words across documents and topics. On the other hand, NMF factorises the given document-term matrix into two lower-dimensional matrices representing topics and words, emphasising the non-negativity of the factorisation.

Applying LDA and NMF to topic modelling projects involves preprocessing text data, constructing document-term matrices, and tuning parameters for optimal results. These techniques aid in extracting meaningful insights from large textual datasets, facilitating tasks such as content categorisation, trend analysis, and document summarisation in diverse fields such as journalism, academia, and business intelligence.

Analysing speech emotions

Analysing speech emotions using NLP involves the application of algorithms and models to decipher and understand the emotional content conveyed through spoken words. NLP techniques enable the extraction of emotional cues from speech patterns, tones, and linguistic features, identifying and classifying various emotions such as happiness, sadness, anger, and more.

One common approach to speech emotion analysis is using machine learning models, including deep learning architectures like Recurrent Neural Networks (RNNs) or Convolutional Neural Networks (CNNs). These models are trained on labelled datasets containing speech samples with annotated emotional states. The models can learn to associate acoustic characteristics with specific emotions through feature extraction and pattern recognition.

Speech emotion analysis has diverse applications, from customer service interactions and virtual assistants to mental health monitoring. By leveraging NLP techniques, researchers and developers aim to enhance human-computer interaction and create systems that can understand and respond appropriately to the emotional nuances present in spoken language.

Image captioning using LSTM

Image captioning using Long Short-Term Memory (LSTM) networks in NLP involves generating descriptive text for an image. LSTMs are a type of recurrent neural network (RNN) that excels at capturing dependencies in sequential data, making them suitable for tasks like language modelling.

The process begins by feeding an image into a convolutional neural network (CNN) to extract features. These features serve as the LSTM input, generating a sequence of words representing the image's content. The model learns to associate visual features with corresponding words during training.

This approach enables the creation of detailed and contextually relevant captions for images, showcasing the model's ability to understand the visual context. Image captioning with LSTM networks finds applications in areas such as computer vision, accessibility for visually impaired individuals, and enriching multimedia content with informative descriptions. Advanced models, such as those combining transformer architectures with image embeddings, further enhance the performance of image captioning systems in contemporary NLP applications.

Question answering with DistilBERT

Question Answering (QA) with DistilBERT, a distilled version of the Bidirectional Encoder Representations from Transformers (BERT) model, is a notable application in NLP. DistilBERT retains the powerful contextualised embeddings of BERT but in a more lightweight architecture, making it computationally efficient. In QA tasks, DistilBERT is fine-tuned on datasets where the model learns to understand the context and provide accurate answers to user queries.

The model excels in comprehending the relationships between words and phrases, allowing it to deduce the most relevant information for a given question. DistilBERT's ability to capture context from both left and right context words enables it to outperform traditional models in understanding the nuances of human language. Implementing QA with DistilBERT involves training the model on question-answer pairs, enabling it to generalise and answer new questions accurately based on its learned contextual representations. This approach showcases the effectiveness of transformer-based models in efficiently handling complex NLP tasks.

Completing masked words with BERT

Completing Masked Words with BERT is a state-of-the-art NLP task that predicts missing or masked words within a sentence. BERT, developed by Google, is a transformer-based model known for capturing context and bidirectional relationships in language understanding.

In this task, certain words in a sentence are replaced with [MASK] tokens, and the model is trained to predict the original words based on the context of the surrounding words. BERT's bidirectional nature allows it to consider the preceding and following words when making predictions, enabling a deeper understanding of language semantics.

Completing masked words with BERT has proven effective in various applications, including text generation, language translation, and sentiment analysis. By leveraging pre-trained BERT models and fine-tuning them on specific tasks, researchers and practitioners can achieve robust performance in completing masked words, contributing to advancements in NLP capabilities.

Develop skills in statistics with our Probability and Statistics for Data Science Training. Register now!

Conclusion

Embarking on NLP projects is an excellent way to enhance your skills, from basic text processing to advanced Machine Learning Applications. These project ideas cater to various interests and skill levels, providing a comprehensive journey through the fascinating realm of Natural Language Processing. Whether you're a beginner eager to grasp the basics or an advanced learner seeking complex challenges, these NLP projects offer a valuable hands-on experience in the world of language-based artificial intelligence.

Master Data Science with R programming. Register now for our Data Science with R Training

Frequently Asked Questions

What are the Other Resources and Offers Provided by The Knowledge Academy?

The Knowledge Academy takes global learning to new heights, offering over 3,000 online courses across 490+ locations in 190+ countries. This expansive reach ensures accessibility and convenience for learners worldwide.

Alongside our diverse Online Course Catalogue, encompassing 19 major categories, we go the extra mile by providing a plethora of free educational Online Resources like News updates, Blogs, videos, webinars, and interview questions. Tailoring learning experiences further, professionals can maximise value with customisable Course Bundles of TKA.

Upcoming Business Skills Resources Batches & Dates

Date

Natural Language Processing (NLP) Fundamentals with Python

Natural Language Processing (NLP) Fundamentals with Python

Thu 29th May 2025

Thu 24th Jul 2025

Thu 25th Sep 2025

Thu 27th Nov 2025

Top Rated Course

Top Rated Course

If you wish to make any changes to your course, please

If you wish to make any changes to your course, please