We may not have the course you’re looking for. If you enquire or give us a call on 01344203999 and speak to our training experts, we may still be able to help with your training requirements.

Training Outcomes Within Your Budget!

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

Deep Learning and Neural Networks are at the forefront of computer science and the IT industry, offering innovative speech recognition, image analysis, and natural language processing (NLP) solutions. Recent advancements have demonstrated AI's ability to learn complex tasks such as painting, 3D modelling, user interface design (e.g., pix2code), and generating graphics from text descriptions.

In this blog, we will explore the different Types of Neural Networks and provide a comprehensive understanding of how they function. Discover how these transformative technologies revolutionise various fields and unlock new possibilities across multiple applications.

Table of Contents

1) What are Neural Networks?

2) How Neural Networks work?

3) Types of Neural Networks

4) Pros and Cons of Neural Networks

5) Conclusion

What are Neural Networks?

Neural Networks, inspired by the structure and functionality of the human brain, are a cornerstone of Artificial Intelligence (AI) and Machine Learning. These networks consist of interconnected nodes, or artificial neurons, organised into layers that process information like biological Neural Networks. As input data passes through the network, each connection is assigned a weight, which adjusts during training to optimise output accuracy.

Neural Networks are highly effective at pattern recognition, making them ideal for tasks such as image and speech recognition, natural language processing, and decision-making. Deep learning, a subset of Machine Learning, often utilises complex neural network architectures like convolutional Neural Networks (CNNs) for image analysis and recurrent Neural Networks (RNNs) for handling sequential data. This ability to learn and adapt from data allows Neural Networks to tackle intricate tasks, driving significant advancements in AI applications.

How Neural Networks work?

Neural Networks, inspired by the human brain, are key AI models composed of interconnected artificial neurons arranged in input, hidden, and output layers. Data enters through the input layer and is processed by hidden layers via weighted connections that adjust during training to optimise accuracy. The training process, called backpropagation, refines these weights using algorithms like gradient descent to minimise prediction errors. The output layer provides the final result, such as image classification or speech recognition.

Deep learning extends Neural Networks with multiple hidden layers, enabling more complex feature extraction. Convolutional Neural Networks (CNNs) excel in image analysis, while Recurrent Neural Networks (RNNs) manage sequential data. Neural Networks' adaptability makes them effective across tasks like image recognition, speech processing, and natural language understanding.

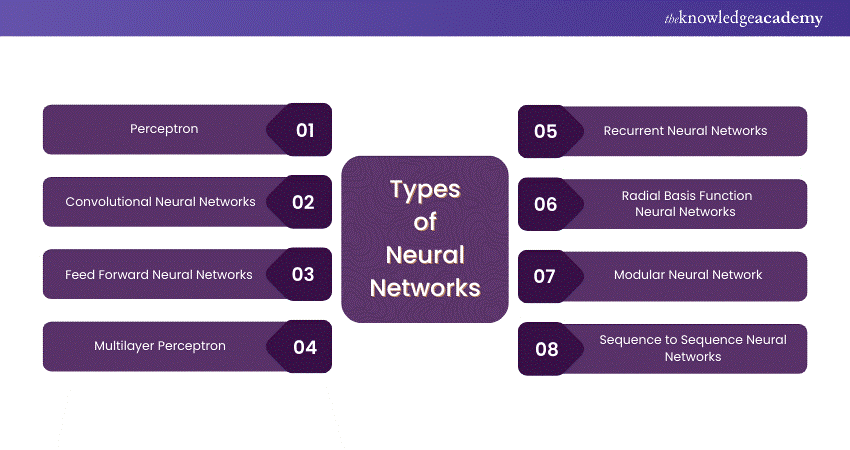

Types of Neural Networks

Here are the 8 basic Types of Neural Networks which are most used in the industry:

1) Perceptron

The Perceptron model, developed by Minsky and Papert, is one of the earliest and simplest neural models. It serves as a foundational unit in Machine Learning. It performs computations to detect patterns in input data, functioning as a Threshold Logic Unit (TLU) for binary decisions.

The Perceptron uses weighted inputs and an activation function to produce an output, making it suitable for binary classification tasks. It defines a hyperplane, represented by w⋅x+b=0, where w is the weight vector, x is the input vector, and b is the bias.

Advantages: Perceptrons can implement logic gates like AND, OR, and NAND.

Disadvantages: They handle only linearly separable problems and fail with non-linear cases like the XOR problem.

2) Convolutional Neural Networks

Convolutional Neural Networks (CNNs) are specialised for tasks like image processing, computer vision, speech recognition, and machine translation. Unlike standard Neural Networks, CNNs have a three-dimensional neuron arrangement, making them highly effective for visual data.

Structure:

a) Convolutional Layer: Filters extract features (edges, textures) from localised regions.

b) Pooling Layer: Reduces spatial dimensions, retaining essential information and lowering computational load.

c) Fully Connected Layer: Classifies images based on extracted features.

Operation:

a) Feature Extraction: Utilises filters to detect patterns and objects.

b) Activation Functions: ReLU introduces non-linearity, while softmax aids in multi-class classification.

Advantages: Efficient for deep learning with fewer parameters compared to fully connected layers.

Disadvantages: Complex to design and maintain, and can be slow with many hidden layers.

3) Feed Forward Neural Networks

Feed-forward Neural Networks (FFNNs) are foundational in neural network architecture. They are suitable for tasks like simple classification, face recognition, computer vision, and speech recognition. FFNNs process data in a unidirectional flow, making them efficient for handling noisy data.

Structure:

FFNNs consist of input, output, and optional hidden layers. Data moves from input nodes through any hidden layers to output nodes.

Activation and Propagation:

Using forward propagation, data flows in one direction without feedback. Activation functions (e.g., step functions) determine neuron firing based on weighted inputs, outputting 1 or -1 based on thresholds.

Advantages: It is simple to design, fast due to one-way propagation, and effective with noisy data.

Disadvantages: It is not suitable for deep learning, lacking dense layers and backpropagation capabilities.

4) Multilayer Perceptron

The Multi-Layer Perceptron (MLP) is an entry point into complex Neural Networks suited for tasks like speech recognition, machine translation, and complex classification. MLPs feature a multilayered structure with input, output, and multiple hidden layers, forming a fully connected network.

Operation:

a) Bidirectional Propagation: Utilises forward propagation to compute outputs and backpropagation to adjust weights based on errors.

b) Weight Adjustment: Weights are optimised during backpropagation to minimise prediction errors.

c) Activation Functions: Nonlinear functions enhance modelling capabilities, with softmax often used in the output layer for multi-class classification.

Advantages: Effective for deep learning due to dense layers and backpropagation.

Disadvantages: It is complex to design and maintain, and performance can be slow depending on the number of hidden layers.

Want to unlock your Data Science potential today? Then register now for our Data Science Training!

5) Recurrent Neural Networks

Recurrent Neural Networks (RNNs) excel at processing sequential data, making them ideal for tasks like language modelling, machine translation, speech recognition, and time-series prediction. Unlike Feed-Forward Neural Networks, RNNs have connections that loop back, allowing information to persist and capturing dependencies across time steps.

Operation:

a) Sequential Processing: This process maintains an internal state, processing input sequences one step at a time and retaining context from previous inputs.

b) Activation Functions: Utilises functions like tanh or ReLU to capture complex temporal patterns.

Advantages: Efficient for sequential data, capturing dependencies in time-based tasks.

Disadvantages: RNNs are prone to issues like vanishing or exploding gradients, making training challenging over long sequences. Additionally, they can be slower compared to simpler Neural Networks.

6) Radial Basis Function Neural Networks

Radial Basis Function Neural Networks (RBFNNs) are effective for pattern recognition, function approximation, and time-series prediction. They consist of three layers: input, hidden (radial basis function layer), and output.

Operation:

a) Hidden Layer: This layer uses radial basis functions (e.g., Gaussian) to measure the distance between input data and neuron centre points, determining neuron activation.

b) Output Layer: Combines activations linearly to produce the final output.

c) Advantages: RBFNNs are highly efficient for interpolation tasks, offering fast training and the ability to handle non-linear data.

Disadvantages: Performance decreases with high-dimensional data, and selecting appropriate radial centres can be challenging, affecting overall accuracy. They are also less suitable for deep learning tasks than other Neural Networks.

7) Modular Neural Network

Modular Neural Networks (MNNs) consist of independent neural network modules working together to solve complex tasks. Each module handles a specific sub-task, and their outputs are combined for the final result, making MNNs suitable for tasks like pattern recognition, time-series prediction, and multi-object classification.

Operation:

a) Independent Modules: Each module processes input data separately, focusing on distinct features or tasks.

b) Integration: Outputs from modules are aggregated, typically using a master network or layer, to generate the final output.

Advantages: Dividing complex tasks offers faster training, parallel processing, and improved scalability.

Disadvantages: Designing and integrating modules can be complex, and module interdependencies may affect overall performance if not well-coordinated.

8) Sequence to Sequence models

Sequence-to-sequence (Seq2Seq) models are Neural Networks designed to transform one sequence into another. They excel in tasks like machine translation, text summarisation, and speech recognition. They consist of two main components: an encoder and a decoder.

Operation:

a) Encoder: Processes the input sequence into a fixed-length context vector that captures essential information.

b) Decoder: Converts the context vector into the target sequence, generating outputs step-by-step.

Advantages: Effective for handling variable-length input and output sequences, making them versatile for many sequential tasks.

Disadvantages: Performance can degrade with long sequences due to limited context retention. Training can be computationally intensive, and Seq2Seq models may struggle with complex dependencies without enhancements like attention mechanisms.

Level up your Data Science skills with our Python Data Science Training. Join now!

Pros and Cons of Neural Networks

Neural networks, a fundamental artificial intelligence component, have seen widespread application, each with advantages and drawbacks.

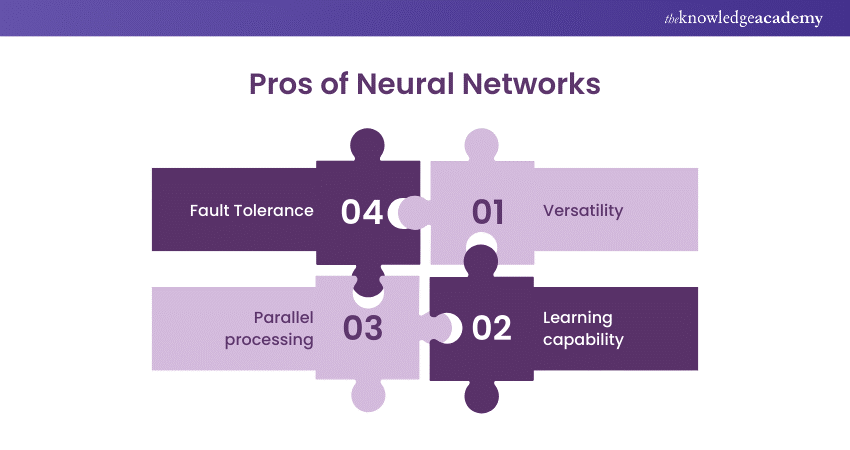

Pros of Neural Networks

a) Versatility: Neural networks exhibit remarkable versatility, applicable across various domains such as image and speech recognition, natural language processing, and game playing. Their ability to adapt makes them valuable in diverse scenarios.

b) Learning capability: Neural networks excel at learning from data patterns, adjusting their parameters based on experience. This enables them to enhance performance over time, making them suitable for tasks that involve complex relationships.

c) Parallel processing: Neural networks leverage parallel processing, mimicking the human brain's simultaneous handling of multiple inputs. This accelerates computations, rendering them suitable for large-scale data processing tasks.

d) Fault Tolerance: Neural networks demonstrate a degree of fault tolerance. Even with partial damage or loss of neurons, they can often continue functioning, contributing to their robustness in real-world applications.

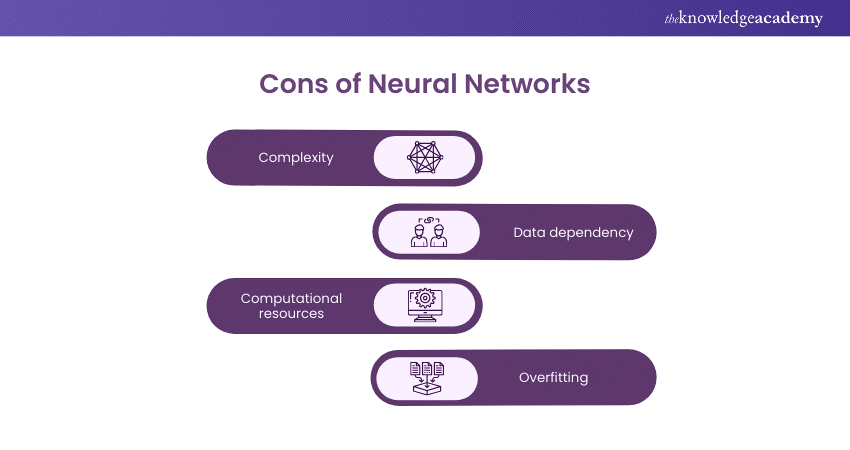

Cons of Neural Networks

a) Complexity: The inherent complexity of neural networks challenges understanding their decision-making processes. This lack of interpretability can be a significant drawback, particularly in critical applications where transparency is crucial.

b) Data dependency: Neural networks heavily rely on extensive, high-quality datasets for training. Inadequate or biased data can result in model inaccuracies and reinforce existing biases, limiting their reliability.

c) Computational resources: Training large neural networks demands substantial computational resources. This can be a barrier for smaller organisations or projects with limited access to powerful hardware.

d) Overfitting: Neural networks may overfit, meaning they become too closely tailored to the training data and need help generalising to new, unseen data. Balancing model complexity to prevent overfitting is a constant challenge.

Develop skills in statistics with our Probability and Statistics for Data Science Training. Register now!

Conclusion

Neural Networks come in various architectures, each suited for different tasks and complexities. From basic models like Perceptrons and Feed Forward Networks to advanced structures like CNNs, RNNs, and Seq2Seq models, these networks offer powerful solutions across AI applications. Understanding their unique features, advantages, and limitations is crucial for leveraging them effectively in real-world problems.

Master Data Science with R programming. Register now for our Data Science with R Training

Frequently Asked Questions

CNNs are better than MLPs for image-related tasks because they efficiently capture spatial hierarchies and local patterns using convolutional layers, reducing the number of parameters. This makes CNNs more effective in handling high-dimensional data like images, unlike MLPs.

Neural Networks are used for their ability to learn complex patterns and relationships from data. They excel in tasks like image recognition, speech processing, natural language understanding, and decision-making, making them valuable for solving diverse problems across AI and Machine Learning.

The Knowledge Academy takes global learning to new heights, offering over 30,000 online courses across 490+ locations in 220 countries. This expansive reach ensures accessibility and convenience for learners worldwide.

Alongside our diverse Online Course Catalogue, encompassing 19 major categories, we go the extra mile by providing a plethora of free educational Online Resources like News updates, Blogs, videos, webinars, and interview questions. Tailoring learning experiences further, professionals can maximise value with customisable Course Bundles of TKA.

The Knowledge Academy’s Knowledge Pass, a prepaid voucher, adds another layer of flexibility, allowing course bookings over a 12-month period. Join us on a journey where education knows no bounds.

The Knowledge Academy offers various Data Science Courses, including the Decision Tree Modeling Using R Training, Python Data Science Course and Advanced Data Science Certification Course. These courses cater to different skill levels, providing comprehensive insights into Clustering in Data Mining.

Our Data, Analytics & AI Blogs cover a range of topics related to Data Science, offering valuable resources, best practices, and industry insights. Whether you are a beginner or looking to advance your Data Analytics Skills, The Knowledge Academy's diverse courses and informative blogs have got you covered.

Upcoming Data, Analytics & AI Resources Batches & Dates

Date

Data Mining Training

Data Mining Training

Thu 27th Feb 2025

Thu 10th Apr 2025

Thu 26th Jun 2025

Thu 28th Aug 2025

Thu 23rd Oct 2025

Thu 4th Dec 2025

Top Rated Course

Top Rated Course

If you wish to make any changes to your course, please

If you wish to make any changes to your course, please