We may not have the course you’re looking for. If you enquire or give us a call on +1800812339 and speak to our training experts, we may still be able to help with your training requirements.

Training Outcomes Within Your Budget!

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

As organisations increasingly utilise the power of AI to solve complex problems, the demand for AI talent has remarkably grown. Whether you're a job seeker aiming for a role in AI or an interviewer looking to identify top AI candidates, these Artificial Intelligence Interview Questions will give you a good understanding of AI.

Machine learning, natural language processing and ethics, these topics will equip you with the knowledge you need to excel in AI interviews and make informed hiring decisions. In this blog, we will go through the 40 most Important Artificial Intelligence Interview Questions from basic to advanced level. Read more to learn!

Table of Contents

1) Artificial Intelligence basic interview question

2) Machine learning questions

3) Deep learning questions

4) NLP questions

5) Ethics and impact questions

6) AI tools and frameworks questions

7) Conclusion

Artificial Intelligence basic interview question

Artificial Intelligence (AI) is a transformative field that continues to reshape industries and the way we interact with technology. In AI interviews, candidates often face fundamental questions that assess their understanding of key concepts, principles, and their problem-solving abilities. Following are some of the fundamental questions and answers on AI:

1) What is Artificial Intelligence?

Artificial Intelligence is an extended field of computer science that aims to create machines and software that perform assigned tasks that usually require human intelligence. These tasks cover a wide range of activities, including problem-solving, pattern recognition, decision-making, and understanding natural language. AI systems are usually designed to simulate human cognitive functions, learning from data and improving their performance over time.

AI can be categorised into different levels of intelligence. Narrow or Weak AI refers to systems designed for specific tasks, such as voice assistants or recommendation engines. In contrast, General or Strong AI aims to possess human-level intelligence, allowing machines to handle a broad spectrum of tasks as proficiently as humans.

Unlock the potential of Artificial Intelligence; Register for our Introduction to Artificial Intelligence Training. Enrol now for a brighter future in technology

2) How does robotics relate to Artificial Intelligence?

Robotics is a field that often integrates Artificial Intelligence to create intelligent machines known as robots. AI plays a pivotal role in robotics by enabling robots to perceive their environment, process sensory information, make decisions, and execute tasks autonomously.

Through AI algorithms, robots can interpret sensor data from their surroundings, allowing them to navigate, avoid obstacles, and interact with their environment effectively. Machine learning and computer vision are often employed to help robots learn from their experiences and adapt to changing conditions.

3) Explain the relationship between Artificial Intelligence and Machine Learning.

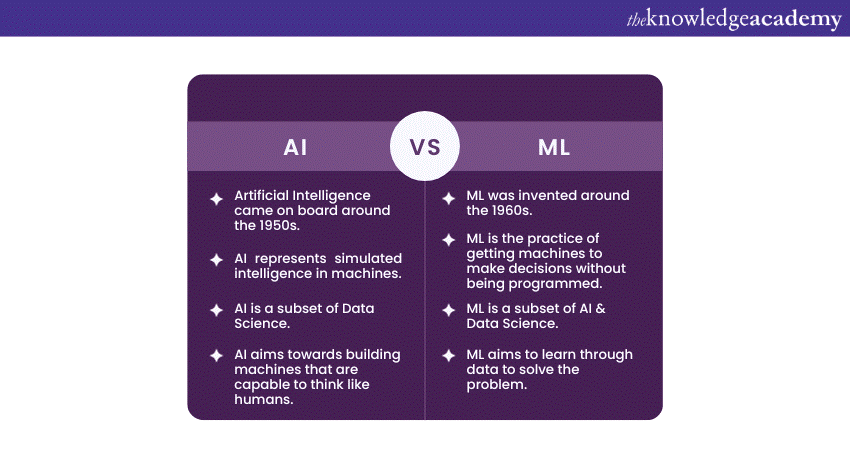

AI and ML are closely related concepts, with ML being a subset of AI. AI encompasses a broader scope, encompassing any computer system that can perform tasks requiring human intelligence.

On the other hand, Machine Learning techniques specifically focus on the development of algorithms and statistical models that enable computer devices to improve their performance on a given task through experience or data. ML algorithms are designed to learn patterns, make predictions, and adapt based on feedback from the data they process.

4) What is Artificial Intelligence software?

Artificial Intelligence Software refers to a category of applications and programs that utilise AI techniques and algorithms to perform specific tasks or solve complex problems. These software systems are designed to replicate human-like cognitive functions and can encompass a wide range of applications, including NLP, Computer Vision, speech recognition, and data analysis.

AI software can be found in various domains, from virtual personal assistants like Siri or Google Assistant to advanced analytics tools that can process massive datasets and identify insights that may not be apparent to humans. It's crucial to note that AI software often relies on machine learning models to make predictions or decisions based on patterns and data.

5) What are the different types of Artificial Intelligence?

Artificial Intelligence can be categorised into several different types based on its capabilities and characteristics. Some of these types are as follows:

Narrow or weak AI (ANI): This type of AI is designed for specific tasks and operates within a limited domain. Examples include voice assistants like Siri and recommendation systems used by streaming platforms.

General or strong AI (AGI): AGI represents a form of AI that possesses human-level intelligence and can learn and apply knowledge across a range of tasks, similar to human capabilities. AGI, as of now, remains a theoretical concept.

Artificial Narrow Intelligence (ANI): ANI refers to AI systems that excel in a particular area or task. These systems are highly specialised and lack the ability to transfer knowledge to other domains.

Artificial General Intelligence (AGI): AGI, sometimes referred to as "true AI" or "full AI," aims to achieve human-level intelligence, enabling machines to understand and perform tasks across diverse domains.

Artificial Superintelligence (ASI): ASI represents AI that surpasses human intelligence in every aspect, including creativity, problem-solving, and decision-making. This concept remains speculative and is the subject of philosophical debate.

6) What are some common applications of Artificial Intelligence in various industries?

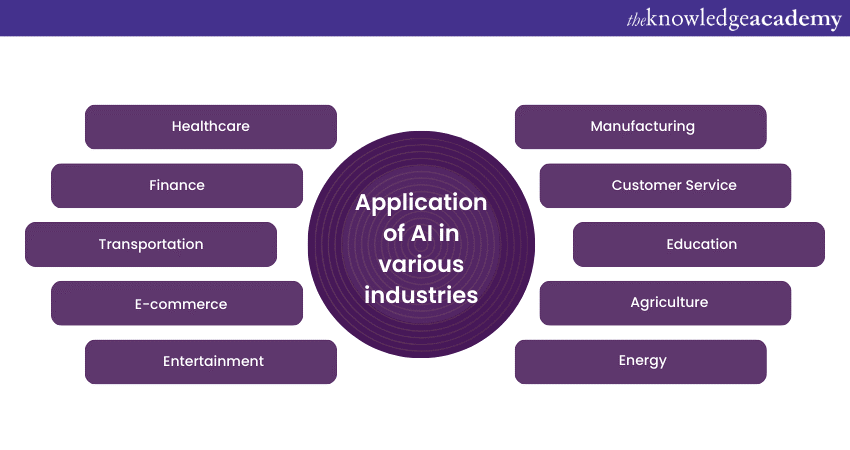

Artificial Intelligence has found extensive applications across diverse industries, transforming the way businesses operate and improving efficiency and decision-making. Some common applications include:

a) Healthcare: AI is used for disease diagnosis, drug discovery, personalised medicine, and medical image analysis.

b) Finance: AI is employed in algorithmic trading, fraud detection, credit scoring, and customer service chatbots.

c) Transportation: AI plays a role in autonomous vehicles, traffic management, and route optimisation.

d) E-commerce: AI is used for product recommendations, chatbots, and supply chain optimisation.

e) Entertainment: AI-driven content recommendation systems personalise user experiences in streaming platforms.

f) Manufacturing: AI is utilised for quality control, predictive maintenance, and automation in production lines.

g) Customer Service: Chatbots and virtual assistants provide automated customer support.

h) Education: AI can personalise learning experiences through adaptive learning platforms.

i) Agriculture: AI helps optimise crop management, pest control, and yield prediction.

j) Energy: AI optimises energy consumption in smart grids and predicts equipment maintenance needs.

The versatility of AI continues to expand, with new applications emerging as technology advances. AI is expected to have a profound impact on various sectors, enhancing productivity and innovation.

7) How does Artificial Intelligence differ from human intelligence?

Artificial Intelligence (AI) and human intelligence differ significantly across various dimensions. AI derives its intelligence from programmed algorithms and data, while human intelligence arises from the intricate biological structure of the brain and a lifetime of experiences. AI processes information at remarkable speeds, with consistent accuracy, while human intelligence varies in speed depending on the context and individual abilities.

AI excels at processing vast datasets swiftly, whereas human intelligence is limited in its data processing capacity. Furthermore, AI lacks true creativity and insight, relying on predefined rules, whereas human intelligence demonstrates profound creativity, emotional intelligence, and common-sense reasoning. Human intelligence adapts through learning and growth, possesses self-awareness, morality, and empathy, whereas AI lacks consciousness and ethical values.

8) What is the relationship between Artificial Intelligence and cybersecurity?

Artificial Intelligence (AI) and cybersecurity are two distinct but increasingly intertwined fields within the realm of technology and information management. Here's an exploration of their key differences:

a) Nature and purpose

AI is a broader field encompassing the development of algorithms and systems that can mimic human-like intelligence, perform tasks, and make decisions based on data. It aims to enhance automation, data analysis, and problem-solving across various domains.

Cybersecurity, on the other hand, is a specialised domain focused solely on safeguarding digital systems, networks, and data from unauthorised access and breaches. Its primary purpose is to ensure the confidentiality, integrity, and availability of information.

b) Functionality

AI uses machine learning, deep learning, and natural language processing to enable systems to learn from data and adapt to evolving circumstances.

In cybersecurity, AI is increasingly employed to detect and respond to threats more effectively by analysing vast datasets and identifying patterns indicative of malicious activities.

c) Goal

AI seeks to optimise processes, enhance user experiences, and create intelligent, autonomous systems. It may not inherently prioritise security unless applied within a cybersecurity context.

Cybersecurity's primary goal is to protect sensitive information, networks, and systems from various threats, including cyberattacks, data breaches, and vulnerabilities. It is fundamentally focused on risk mitigation.

d) Implementation

AI can be deployed across various industries and sectors, including healthcare, finance, manufacturing, and more, to improve efficiency and decision-making.

Cybersecurity is specifically implemented within organisations and institutions to safeguard their digital assets and operations.

e) Overlap

There is an increasing overlap between AI and cybersecurity, with AI being used to bolster cybersecurity measures. AI-driven tools can identify and respond to security threats in real-time, enhancing overall cybersecurity posture.

Machine learning questions

Machine learning, a component of Artificial Intelligence, has transformed how computers acquire knowledge from data and make predictions or choices. Here are some key interview questions in the field of machine learning:

9) What is supervised learning? Give an example.

Supervised learning within the realm of machine learning involves training algorithms using labelled datasets, where input-output pairs (comprising features and their corresponding target values) are provided. The objective is to acquire a mapping function capable of predicting target outputs for unseen data. Supervised learning can be classified into two primary categories: classification and regression.

Example: Consider a spam email filter. In this case, the algorithm is trained on a dataset of emails where each email is labelled as either "spam" or "not spam" (binary classification). It learns to identify patterns and characteristics of spam emails based on features like keywords, sender information, and email content. Once trained, it can classify incoming emails as either spam or not spam based on the learned patterns.

10) Explain the bias-variance trade-off in machine learning.

The bias-variance trade-off is a basic concept in machine learning that relates to a model's ability to generalise from the training data to unseen data. It represents a trade-off between two sources of error:

a) Bias: High bias occurs when a model is too simple and makes strong assumptions about the data. This can lead to underfitting, where the model cannot capture the patterns in the data which results in poor performance of training and test datasets.

b) Variance: High variance happens when a model is too complex and overly flexible. Such models can fit the noise in the training data which results in good performance on the training dataset but poor generalisation to new data, leading to overfitting.

Balancing bias and variance is essential to create a model that generalises well. The goal is to find the right level of model complexity and flexibility to minimise both bias and variance, ultimately achieving good performance on new, unseen data.

11) What is the feature of engineering in Machine Learning?

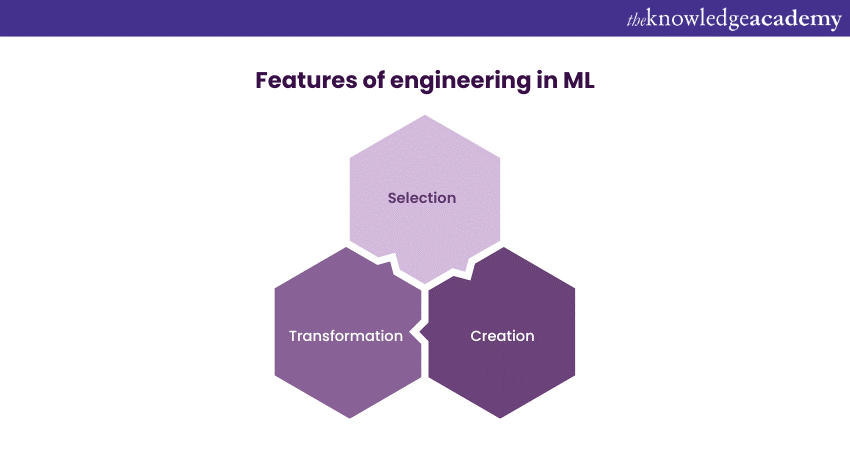

Feature engineering transforms or creates input features (variables) for a machine learning model to improve its performance. It involves identifying relevant information within the raw data and representing it in a way that the model can better understand and utilise.

Key aspects of feature engineering include:

a) Selection: Choosing the most relevant features to include in the model, discarding irrelevant or redundant ones to simplify the model and reduce noise.

b) Transformation: Applying mathematical operations like scaling, normalisation, or logarithmic transformations to make the data more suitable for modelling.

c) Creation: Generating new features based on domain knowledge or by combining existing features to capture meaningful patterns and relationships in the data.

Effective feature engineering can significantly enhance a model's performance by providing it with the right information to make accurate predictions.

12) Differentiate between classification and regression in ML.

Classification and regression are two different types of supervised learning tasks in machine learning:

Classification: Its goal is to predict a categorical or discrete target variable. The algorithm assigns input data points to predefined classes or categories. Common examples include spam detection (binary classification - spam or not spam) and image classification (multi-class classification - recognising different objects in images).

Regression: Regression, on the other hand, deals with predicting a continuous numerical target variable. It aims to estimate a real-valued output based on input features. Examples include predicting house prices based on features like square footage, number of bedrooms, and location, or forecasting stock prices over time.

The main difference lies in the nature of the target variable: classification deals with categories, while regression deals with numerical values. Different algorithms and evaluation metrics are used for each type of task.

13) What is the purpose of cross-validation in machine learning?

Cross-validation is a crucial technique in machine learning used to assess a model's performance, generalisation capability, and robustness. Its primary purpose is to provide a more reliable estimate of a model's performance on unseen data than traditional single-split validation.

The key steps in cross-validation are as follows:

a) Data splitting: The dataset is divided into multiple subsets or folds. Typically, it's divided into k equal-sized folds.

b) Training and testing: In the training and testing process, the model undergoes training using k-1 of these folds while being evaluated on the remaining fold. This cycle is iterated k times, ensuring that each fold takes a turn as the test set once.

c) Performance evaluation: The model's performance is evaluated for each fold, resulting in k performance scores (e.g., accuracy, mean squared error). These scores are then averaged to obtain a more reliable estimate of the model's performance.

Deep learning questions

Deep learning is a crucial component of machine learning which has sparked remarkable advancements in AI. Some of the most important questions and answers on this topic are as follows:

14) What is a Neural Network?

A Neural Network is a computational framework inspired by the human brain's structure and function. It comprises interconnected nodes, often called artificial neurons or perceptron, arranged in layers, including an input layer, hidden layers, and an output layer. Neural Networks find application in diverse machine learning endeavours, such as classification, regression, pattern recognition, and various other tasks.

In a Neural Network, information flows through these interconnected neurons. Each neuron processes input data, applies weights to the inputs, and passes the result through an activation function to produce an output. The network learns by adjusting the weights while training to minimise the difference between its predictions and the actual target values, a process known as training or learning.

Understand the importance of Deep Learning and how it works with our Deep Learning Training course!

15) Explain backpropagation in deep learning.

Backpropagation, or "backward propagation of errors," is a key algorithm in training Neural Networks, especially deep Neural Networks. It's used to adjust the weights of neurons in the network to minimise the error between predicted and actual target values.

The process can be broken down into the following steps:

Forward pass: During the forward pass, input data is passed through the network, and predictions are generated.

Error calculation: The error or loss between the predicted output and the actual target is computed using a loss function (e.g., mean squared error for regression tasks or cross-entropy for classification tasks).

Backward Pass (Backpropagation): The computation of the loss gradient concerns each weight within the network. This involves propagating the error in reverse, moving from the output layer to hidden layers, and employing the principles of the chain rule in calculus.

Weight Adjustments: These are based on these gradients, pushing them in the opposite direction to minimise the loss. Typically, widely used optimisation techniques such as stochastic gradient descent (SGD) or variations like Adam are utilised for these weight updates.

Iterative process: Steps 1 to 4 are repeated iteratively for a fixed number of epochs or until the loss converges to a satisfactory level.

Backpropagation allows deep Neural Networks to learn and adapt to complex patterns in data by iteratively adjusting their weights. This process enables them to make accurate predictions and representations for a wide range of tasks.

16) What is an activation function in a Neural Network?

In a Neural Network, an activation function is applied to the output of individual neurons within the network's hidden and output layers. Its principal role is to introduce non-linear characteristics to the network, enabling it to capture intricate patterns and derive insights from data.

Common activation functions in Neural Networks include:

Sigmoid: It squashes the input into a range between 0 and 1 which makes it suitable for binary classification problems.

ReLU (Rectified Linear Unit): ReLU is the most popular activation function. It outputs the input if it's positive or zero; otherwise, it introduces sparsity and accelerates training.

Tanh (Hyperbolic Tangent): Tanh squashes the input into a range between -1 and 1, making it suitable for regression problems and hidden layers.

Softmax: Softmax is used in the output layer for multi-class classification tasks. It converts a vector of raw scores into a probability distribution over classes.

17) What are Convolutional Neural Networks used for?

Convolutional Neural Networks are a special type of Neural Network designed for processing and analysing grid-like data, such as images and videos. They have gained immense popularity in computer vision due to the ability to automatically learn hierarchical features from visual data.

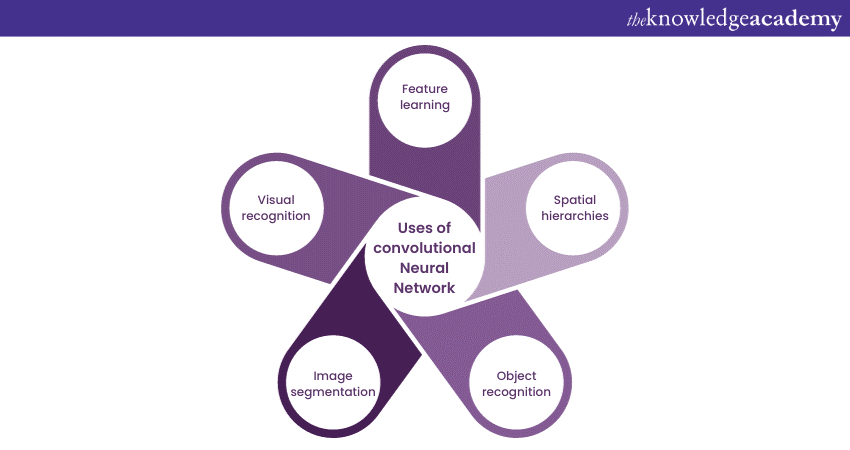

CNNs are structured with convolutional layers, pooling layers, and fully connected layers. The key features and applications of CNNs are as follows:

Feature learning: CNNs use convolutional layers to detect local patterns and features in input data automatically. These layers apply convolution operations to the input data, effectively learning filters that highlight relevant features like edges, textures, and shapes.

Spatial hierarchies: CNNs capture spatial hierarchies of features by stacking multiple convolutional layers. Lower layers learn simple features, while higher layers learn more complex and abstract features by combining lower-level information.

Object recognition: CNNs excel in object recognition and classification tasks. They can identify objects, animals, and various elements within images with high accuracy.

Image segmentation: CNNs can segment images into regions of interest, making them valuable for tasks like medical image analysis, autonomous driving, and scene parsing.

Visual recognition: CNNs are used in facial recognition, object detection, image captioning, and many other computer vision applications.

18) Define recurrent Neural Networks (RNNs) and their applications.

RNNs are a type of Neural Network architecture designed for handling sequential data, where the order of input elements matters. Unlike feedforward Neural Networks, RNNs have connections that loop back on themselves, allowing them to maintain internal states and capture dependencies over time.

Key characteristics and applications of RNNs include:

Sequential Modelling: RNNs can process sequences of data, such as time series, natural language text, and audio, by maintaining hidden states that capture information from previous time steps.

Natural Language Processing (NLP): RNNs are widely used in NLP tasks, including language modelling, sentiment analysis, machine translation, and speech recognition, where the context of previous words or phonemes is crucial for understanding the current one.

Time series prediction: RNNs are effective in time series forecasting, where they can model and predict trends in financial data, weather patterns, and stock prices.

Speech recognition: RNNs are employed in speech recognition systems to transcribe spoken language into text.

Video analysis: RNNs can analyse video data by processing frames sequentially, making them useful for tasks like action recognition, gesture recognition, and video captioning.

Despite their capabilities, standard RNNs suffer from vanishing gradient problems, which limit their ability to capture long-range dependencies. This led to the development of more advanced RNN variants like Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU), which alleviate these issues.

NLP questions

Natural Language Processing is the AI subfield dedicated to teaching machines to understand and generate human language. Some of the important Interview Questions and Answers on the NLP are as follows:

19) What is Natural Language Processing (NLP)?

NLP is a component of Artificial Intelligence dedicated to the interplay between computers and human language. It empowers machines to grasp, interpret, and produce human language in a manner that carries significance and context. NLP encompasses a broad spectrum of functions, spanning text analysis, language generation, sentiment assessment, machine translation, speech recognition, and various other applications.

It combines techniques from linguistics, computer science, and machine learning to bridge the gap between human communication and computer understanding. NLP plays a pivotal role in applications like chatbots, virtual assistants, information retrieval, and text analysis, making it a fundamental technology for natural and intuitive human-computer interaction.

Unlock the language of tomorrow; Register for our Natural Language Processing (NLP) Fundamentals With Python Course. Join us to decode the AI secrets behind human communication!

20) Explain tokenisation in NLP.

Tokenisation is a fundamental preprocessing step in Natural Language Processing (NLP) that involves splitting a text or a sentence into individual units, typically words or subwords. These units are referred to as tokens. Tokenisation is crucial because it breaks down raw text into smaller, manageable pieces, making it easier to analyse and process.

The tokenisation process can vary depending on the level of granularity required:

Word tokenisation: In this common form, text is split into words, using spaces and punctuation as separators. For example, the sentence "Tokenisation is important!" would be tokenised into ["Tokenisation", "is", "important"].

Subword tokenisation: This approach splits text into smaller units, such as subword pieces or characters. It is often used in languages with complex morphology or for tasks like machine translation and text generation.

Tokenisation serves several purposes in NLP:

a) Text preprocessing: It prepares text data for further analysis, such as text classification, sentiment analysis, and named entity recognition.

b) Feature extraction: Tokens become the basic building blocks for creating features in NLP models.

c) Vocabulary management: Tokenisation helps build a vocabulary, which is essential for tasks like word embedding (e.g., Word2Vec, GloVe) and language modelling.

21) What is sentiment analysis, and why is it used?

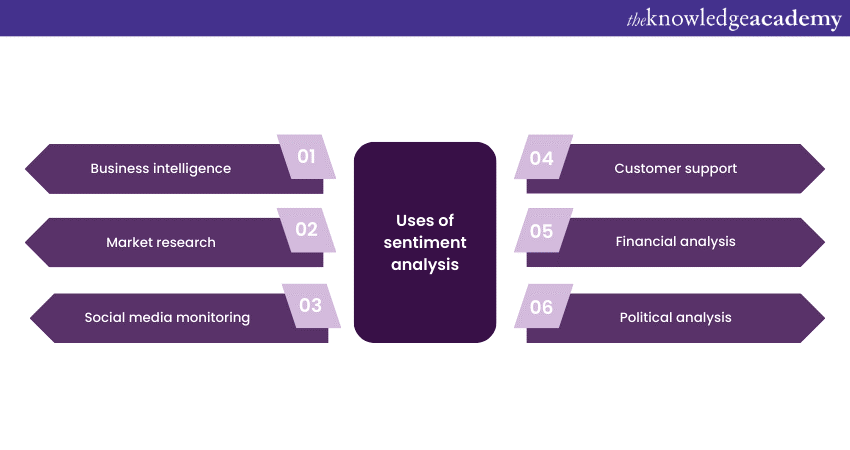

Sentiment analysis, also known as opinion mining, is a Natural Language Processing (NLP) technique that involves determining the emotional tone, attitude, or sentiment expressed in text data. It aims to categorise text as positive, negative, or neutral and, in some cases, quantifies the intensity of sentiment. Sentiment analysis is used for various reasons:

Business intelligence: Companies use sentiment analysis to gauge public opinion about their products, services, or brands. Analysing customer feedback and social media comments helps businesses make data-driven decisions and improve customer satisfaction.

Market research: Sentiment analysis provides insights into consumer preferences and market trends, helping businesses identify emerging opportunities and potential threats.

Social media monitoring: Organisations and individuals monitor social media sentiment to track public perception, respond to customer feedback, and manage online reputation.

Customer support: Sentiment analysis automates the triage of customer support requests by categorising messages based on sentiment, allowing companies to prioritise and respond more efficiently.

Financial analysis: Sentiment analysis is used in finance to analyse news articles, social media posts, and other text data for insights into market sentiment and potential impacts on stock prices.

Political analysis: Sentiment analysis is applied to political discourse to gauge public sentiment and monitor shifts in public opinion during elections and policy discussions.

Sentiment analysis applications span various domains, making it a valuable tool for understanding and responding to public sentiment and opinion.

22) What is named entity recognition (NER) in NLP?

Named Entity Recognition (NER) is a natural language processing (NLP) technique used to identify and categorise named entities within text. Named entities are real-world objects with specific names, such as people, organisations, locations, dates, monetary values, and more. NER involves extracting and classifying these entities into predefined categories.

NER serves several important purposes:

a) Information extraction: NER helps in extracting structured information from unstructured text, making it easier to organise and analyse data.

b) Search and retrieval: It enhances search engines by identifying and indexing named entities, allowing users to find specific information more efficiently.

c) Content summarisation: NER is used to identify key entities in a document, which can aid in generating concise and informative document summaries.

d) Question answering: NER plays a role in question-answering systems by identifying entities relevant to a user's query.

e) Language translation: It assists in language translation by identifying and preserving named entities during the translation process.

NER is typically approached as a supervised machine learning task. Annotated datasets are used to train models that can recognise and classify named entities.

23) What is the purpose of the TF-IDF algorithm?

The TF-IDF (Term Frequency-Inverse Document Frequency) algorithm is a fundamental technique in Natural Language Processing (NLP) used for information retrieval, text mining, and document ranking. Its purpose is to assess the importance of a term (word or phrase) within a document relative to a collection of documents.

TF-IDF helps identify and rank words or phrases based on their significance in a specific document while considering their prevalence across a corpus of documents. Here's how it works:

Term Frequency (TF): This component measures how frequently a term appears in a specific document. It is calculated as the number of times the term occurs in the document divided by the total number of terms in the document. TF represents the local importance of a term within a document.

Inverse Document Frequency (IDF): IDF measures how unique or rare a term is across the entire corpus of documents. It is calculated as the logarithm of the total number of documents divided by the number of documents containing the term. Terms that appear in many documents receive a lower IDF score, while terms appearing in fewer documents receive a higher IDF score.

TF-IDF is used for various NLP tasks, including:

a) Information retrieval: It helps rank documents by relevance when performing keyword-based searches. Documents containing rare and important terms receive higher rankings.

b) Text classification: In text classification tasks, TF-IDF can be used as features to represent documents. It helps capture the discriminative power of terms for classifying documents into categories.

c) Keyword extraction: TF-IDF is used to identify important keywords or phrases within documents, aiding in document summarisation and topic modelling.

Ethics and impact questions

Ethics and impact of Artificial Intelligence are of much importance as a concept in the Artificial Intelligence Interview Questions. Let’s learn about some of the vital questions and their answers:

24) What is AI bias, and how can it be mitigated?

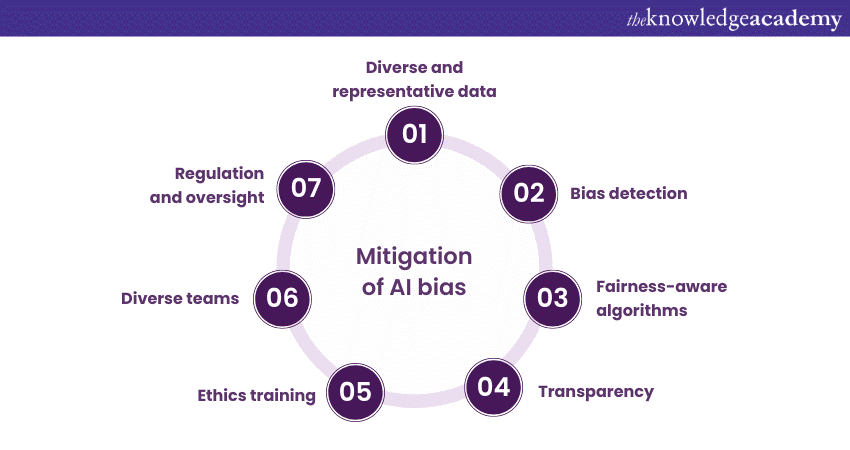

AI bias refers to the presence of unfair or discriminatory outcomes in Artificial Intelligence systems due to biased training data or biased algorithms. Bias can result from historical disparities, unrepresentative training data, or algorithmic shortcomings.

Mitigating AI bias requires a multi-faceted approach:

Diverse and representative data: Ensuring training data is diverse and representative of the population it serves to reduce bias. Data should be regularly audited and updated.

Bias detection: Implementing bias detection techniques to identify and measure bias in AI systems during development and deployment.

Fairness-aware algorithms: Developing algorithms that consider fairness metrics and minimise disparate impact. This includes using techniques like re-sampling, re-weighting, or adversarial training.

Transparency: Making AI systems more transparent and explainable to understand how decisions are made and detect and correct bias.

Ethics training: Training data scientists and engineers in ethics to raise awareness about potential bias and its consequences.

Diverse teams: Building diverse teams involved in AI development to bring different perspectives and reduce the likelihood of unconscious bias.

Regulation and oversight: Governments and industry bodies can enforce regulations and standards to address AI bias and hold organisations accountable.

Addressing AI bias is crucial to ensure fairness, equity, and accountability in AI systems.

25) Discuss the ethical considerations in AI, particularly regarding privacy and security.

Ethical considerations in AI encompass a range of issues, including privacy and security. These considerations in terms of privacy are discussed as follows:

Data privacy: AI systems often require access to large datasets, raising concerns about data privacy. Protecting personal information and ensuring consent for data usage are critical.

Surveillance: AI-powered surveillance technologies can infringe on individuals' privacy rights. Ethical guidelines and legal frameworks are needed to regulate their use.

Data ownership: Determining who owns and controls data generated by AI systems, especially in IoT (Internet of Things) applications, is an ethical challenge.

The considerations in terms of security are as follows:

Cybersecurity: AI systems can be vulnerable to attacks and manipulation, posing security risks. Ensuring robust security measures to protect AI systems is imperative.

Bias and discrimination: Bias in AI systems can have ethical implications, leading to unfair and discriminatory outcomes. Addressing bias in algorithms is essential to prevent harm.

Autonomous weapons: The development of AI-powered autonomous weapons raises ethical concerns about accountability, decision-making, and the potential for misuse.

Job displacement: The impact of AI on employment and job displacement is an ethical consideration, requiring strategies for retraining and supporting affected workers.

Balancing the benefits of AI with ethical considerations requires clear regulations, ethical guidelines, public dialogue, and responsible AI development practices.

26) How can AI be used to address societal challenges?

AI has the potential to address numerous societal challenges across various domains. These challenges according to the industries they are relevant to, are as follows:

a) Healthcare: AI can improve disease diagnosis, drug discovery, and personalised treatment plans, enhancing healthcare outcomes and accessibility.

b) Education: AI-powered tutoring systems, adaptive learning platforms, and educational chatbots can provide personalised learning experiences and bridge educational gaps.

c) Climate change: AI-driven models can analyse climate data, optimise energy usage, and develop strategies for mitigating climate change and disaster management.

d) Agriculture: AI can optimise crop management, predict crop diseases, and improve food supply chain efficiency to combat hunger and enhance agricultural sustainability.

e) Disaster response: AI can assist in disaster prediction, early warning systems, and resource allocation during natural disasters.

f) Accessibility: AI-driven assistive technologies like speech recognition and computer vision can empower individuals with disabilities by providing accessibility solutions.

g) Public safety: AI can improve law enforcement by analysing crime patterns, aiding in predictive policing, and enhancing surveillance for public safety.

h) Urban planning: AI can optimise city infrastructure, traffic management, and public transportation systems, reducing congestion and improving urban living.

i) Poverty alleviation: AI can assist in identifying poverty-stricken areas, optimising resource allocation, and developing targeted interventions.

However, ethical considerations, transparency, and responsible AI deployment are crucial to prevent unintended consequences and ensure equitable outcomes when using AI to address societal challenges.

27) Explain the potential impact of AI on the job market.

AI's impact on the job market is complex and defined in various aspects. Some of the most significant impact of growing popularity of AI are:

a) Job displacement: Automation and AI can replace routine and repetitive tasks, leading to job displacement in certain industries, such as manufacturing and data entry.

b) Job transformation: AI can augment human capabilities, leading to the transformation of job roles. Workers may need to acquire new skills to adapt to changing job requirements.

c) Job creation: AI also has the potential to create new job opportunities, particularly in AI development, data analysis, and AI-related fields.

d) Productivity and efficiency: AI can enhance productivity and efficiency in the workplace, potentially leading to economic growth and increased job opportunities in associated industries.

e) Skill demands: The job market may increasingly demand skills related to AI, data science, and machine learning, necessitating upskilling and reskilling efforts.

f) Economic disparities: AI's impact on income inequality may become a concern if job displacement occurs faster than the creation of new jobs, potentially exacerbating economic disparities.

g) Ethical considerations: The ethical use of AI in employment decisions, such as hiring and performance evaluation, is essential to prevent bias and discrimination.

Governments, businesses, and educational institutions must work together to prepare the workforce for the AI-driven job market by providing education and training opportunities, promoting lifelong learning, and fostering ethical AI practices to ensure a balanced and inclusive future of work.

AI tools and frameworks questions

AI tools and frameworks form the backbone of modern Artificial Intelligence development. The essential tools and platforms that empower AI engineers and data scientists to create cutting-edge solutions.

28) Name some popular machine learning libraries and frameworks.

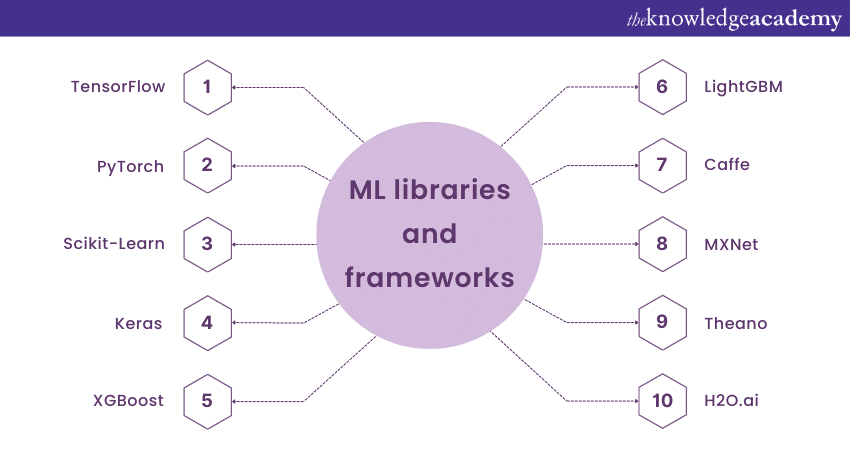

Several popular machine learning libraries and frameworks are widely used in AI development are as follows:

a) TensorFlow: Developed by Google, TensorFlow is an open-source deep learning framework that offers a comprehensive ecosystem for building and training various types of Neural Networks.

b) PyTorch: Developed by Facebook's AI Research lab (FAIR), PyTorch is known for its dynamic computation graph and is favored by researchers for its flexibility.

c) Scikit-Learn: Scikit-Learn is a versatile and user-friendly Python library for classical machine learning tasks such as classification, regression, clustering, and dimensionality reduction.

d) Keras: Keras is an easy-to-use high-level Neural Networks API that runs on top of TensorFlow and other deep learning frameworks, simplifying the model building process.

e) XGBoost: XGBoost is a popular gradient boosting library that excels in structured/tabular data problems and has won numerous Kaggle competitions.

f) LightGBM: LightGBM is another gradient boosting library designed for speed and efficiency, making it suitable for large datasets.

g) Caffe: Caffe is a deep learning framework developed by the Berkeley Vision and Learning Center (BVLC) known for its speed and efficiency.

h) MXNet: An open-source deep learning framework designed for flexibility and efficiency, with support for multiple programming languages.

i) Theano: While no longer actively developed, Theano was an influential deep learning library that contributed to the early growth of the field.

j) H2O.ai: H2O is an open-source machine learning platform that provides a range of algorithms and tools for data analysis and modelling.

29) What is the role of Jupyter Notebooks in AI development?

Jupyter Notebooks play a vital role in AI development for several reasons. Mentioned below are some of the primary roles of the Jupyter Notebooks:

a) Interactive coding: Jupyter Notebooks allow developers and data scientists to write and execute code interactively. This facilitates experimenting with algorithms, visualising data, and quickly prototyping machine learning models.

b) Documentation: Notebooks combine code, text, and visualisations in a single document, making it an effective tool for documenting and sharing research, analyses, and findings.

c) Data exploration: Notebooks provide a flexible environment for exploring and analysing data, enabling users to inspect datasets, clean data, and visualise patterns.

d) Visualisation: Jupyter Notebooks support the creation of rich, customisable visualisations, which are crucial for understanding data and model outputs.

e) Collaboration: Notebooks can be easily shared with colleagues or the broader community, facilitating collaboration and knowledge sharing in AI projects.

f) Education: Jupyter Notebooks are widely used in educational settings to teach AI concepts and demonstrate practical implementations.

g) Reproducibility: By combining code and documentation, Jupyter Notebooks contribute to the reproducibility of AI experiments, allowing others to replicate and verify results.

Overall, Jupyter Notebooks provide an interactive and versatile environment for AI development, research, and communication.

30) Explain the purpose of GPU acceleration in deep learning.

GPU acceleration is essential in deep learning for accelerating the training of Neural Networks. Here's why it is crucial:

a) Parallel processing: Deep learning involves numerous matrix computations, which can be highly parallelised. GPUs (Graphics Processing Units) are designed for parallel processing, allowing them to perform many calculations simultaneously, significantly speeding up training.

b) Model complexity: Deep Neural Networks often have millions of parameters, making training time-consuming. GPUs enable the efficient training of large and complex models.

c) Reduced training time: GPU acceleration can reduce training times from weeks to hours or even minutes, enabling faster experimentation and model development.

d) Cost efficiency: GPUs offer a cost-effective solution for deep learning tasks compared to traditional CPUs, as they provide higher performance for the price.

e) Real-time inference: GPUs are also used for real-time inference, allowing trained models to make predictions quickly, which is crucial for applications like autonomous vehicles and robotics.

GPU acceleration is a fundamental technology in deep learning that significantly improves the efficiency and speed of training Neural Networks, making it possible to tackle complex AI tasks and develop state-of-the-art models.

31) What are pre-trained models in AI, and why are they valuable?

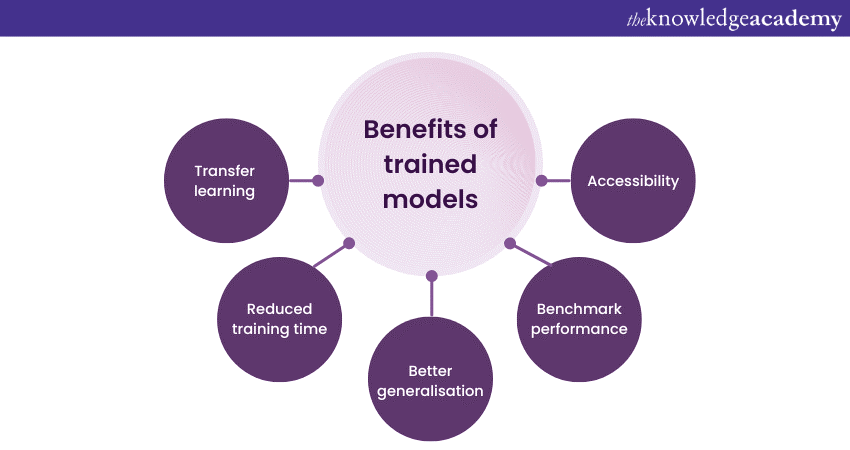

Pre-trained models in AI are Neural Network models that have been trained on large datasets and complex tasks by organisations or researchers and are made available to the broader community. These models are valuable for several reasons:

a) Transfer learning: Pre-trained models serve as a starting point for developing new AI applications. By fine-tuning these models on specific tasks or domains, developers can leverage the knowledge learned from the original training data.

b) Reduced training time: Training deep Neural Networks from scratch requires extensive computational resources and data. Pre-trained models can save time and resources by providing a head start, especially for smaller research teams or projects with limited resources.

c) Better generalisation: Pre-trained models have learned useful features and representations from diverse data sources. This knowledge often results in models that generalise better to new data and perform well on various tasks.

d) Benchmark performance: Pre-trained models often achieve state-of-the-art performance on benchmark datasets and tasks, providing a reliable baseline for comparison.

e) Accessibility: Access to pre-trained models democratises AI development by making advanced AI capabilities available to a broader audience, including researchers, startups, and developers.

32) What is the significance of cloud-based AI platforms like AWS, Asure, and Google Cloud?

Cloud-based AI platforms, such as Amason Web Services (AWS), Microsoft Asure, and Google Cloud, play a crucial role in AI development for several reasons:

a) Scalability: These platforms provide scalable computing resources, allowing users to access the computational power needed for training large Neural Networks, running simulations, and handling big data.

b) Data storage and management: Cloud platforms offer data storage and management solutions, making it easier to store, access, and preprocess datasets required for AI tasks.

c) Pre-built AI services: Cloud providers offer pre-built AI services and APIs, including speech recognition, computer vision, natural language processing, and recommendation systems, enabling developers to integrate AI capabilities into their applications without building models from scratch.

d) Machine learning frameworks: Cloud platforms support popular machine learning frameworks like TensorFlow, PyTorch, and scikit-learn, simplifying model development and deployment.

e) AI marketplace: Cloud providers host AI marketplaces where users can access pre-trained models, datasets, and AI tools created by experts and the community.

f) Collaboration and deployment: These platforms facilitate collaboration among team members and offer tools for deploying AI models to production environments, ensuring scalability and reliability.

g) Security and compliance: Cloud providers invest heavily in security and compliance measures, which is crucial when handling sensitive data in AI projects.

h) Cost efficiency: Cloud services offer a pay-as-you-go model, allowing users to control costs by only paying for the resources and services they use.

Conclusion

Artificial Intelligence is not just the future; it's already transforming the present. As organisations increasingly utilise the power of AI to solve complex problems, the demand for AI talent is soaring. These Artificial Intelligence Interview Questions will help you elevate your understanding in various AI and start your career efficiently.

Unlock the future with AI and Machine Learning. Register for our Artificial Intelligence & Machine Learning today and reshape your tomorrow!

Top Rated Course

Top Rated Course

If you wish to make any changes to your course, please

If you wish to make any changes to your course, please