We may not have the course you’re looking for. If you enquire or give us a call on +1800812339 and speak to our training experts, we may still be able to help with your training requirements.

Training Outcomes Within Your Budget!

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

Deep Learning With Python represents a formidable union in the realm of modern technology. Its intricate algorithms and vast neural networks can be credited for ushering in transformative changes across industries. Its frequent use in healthcare's diagnostic methods and finance's predictive models, has benefited the world significantly. This powerful technique, known for mastering complex tasks like image interpretation and voice modulation, has swiftly emerged as an invaluable asset in the digital age.

According to Statista, Python programming language is preferred by 49.28% of respondents. This goes to show how this language as vital in the success of these Deep Learning (DL), facilitating the creation and fine-tuning of advanced models. If you wish to understand this synergy and its collective potential is reshaping technological world, this blog is what you need. Keep reading this blog to learn more about the uses of Deep Learning With Python language in technology with examples.

Table of Contents

1) What is Deep Learning?

2) Why choose Python of all languages?

3) Deep Learning in Python code with example

4) Practical applications of Deep Learning With Python

5) Conclusion

What is Deep Learning?

Deep Learning, a notable branch of Artificial Intelligence (AI), distinguishes itself with its use of multi-layered neural networks, drawing inspiration from the human brain's complex structures. The term "deep" reflects the multiple layers that these networks encompass, which allow for profound data analysis beyond traditional Machine Learning (ML) methods.

Our brain, with its vast interlinked network of neurons, processes information in intricate ways. Similarly, Deep Learning seeks to emulate this organic brilliance. Each layer within its network meticulously sifts through the data, refining, filtering, and enhancing it. As this data flows from one layer to the next, the process isn't just a mechanical transfer. It’s an evolution, adding depth, context, and nuance, ultimately equipping machines with a sophisticated analytical lens reminiscent of human cognition.

Deep Learning's strength isn't just in its ability to mimic human processes, but also in its superior performance. As it delves deeper into data, it identifies patterns often missed by traditional algorithms. The real-world implications of this are staggering. Consider sectors like image processing or voice recognition, where precision is paramount.

Deep Learning doesn't just participate; it dominates data analysis and other tasks with its unparalleled accuracy, which allows it to consistently overshadow traditional methods. It is responsible for ushering in a revolutionary phase where machines don't just process data — they understand it, interpret it, and anticipate it.

Learn more about machines with our Artificial Intelligence & Machine Learning Courses!

Why choose Python of all languages?

Python has swiftly become the go-to language for data science, owing to its user-friendly syntax and versatile nature. Its suitability for both scripting and full-scale application development makes it a preferred choice for data enthusiasts, cementing its position as the lingua franca in data analytics and Deep Learning.

The union of Python's adaptability and its robust library ecosystem forms the bedrock for Deep Learning innovation. This synergy in Deep Learning With Python has led to significant strides in the field, enabling swift translation of ideas into functional DL models, paving the way for further advancements.

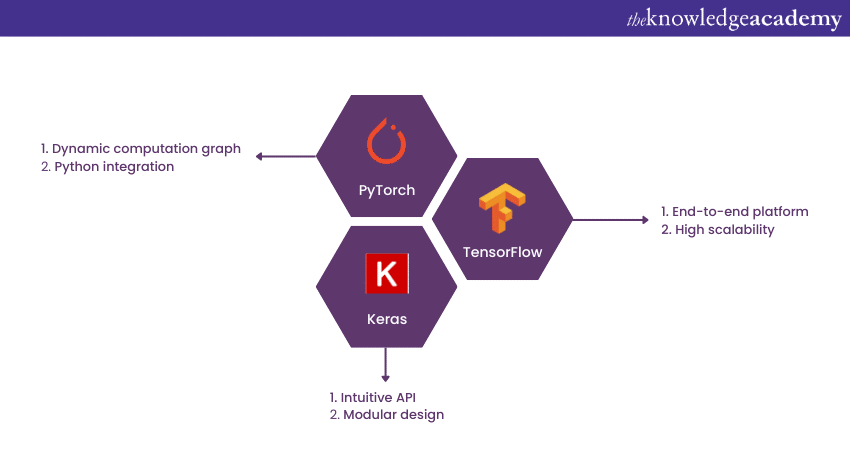

What sets Python apart is its rich assortment of libraries tailored for varied computational tasks. In the DL sphere, these libraries simplify development, allowing developers to push Deep Learning frontiers. Some of these libraries are as follows:

TensorFlow: Comprehensive and scalable

TensorFlow, deeloped by Google Brain, stands as one of the most sought-after open-source libraries for high-performance numerical computations. It is especially tailored for creating Deep Learning models. One of its defining features is the use of data flow graphs where nodes represent operations, and the edges signify data arrays (tensors) that flow between them.

This structure allows for both flexibility and scalability, making it possible to run computations on CPUs, GPUs, or even TPUs with minimal code alterations. TensorFlow’s extensive toolset, which includes TensorBoard for visualisation, and TensorFlow Lite for mobile, gives developers a complete package for both prototyping and deploying ML models.

Interested in the TensorFlow framework? Try our Deep Learning With TensorFlow Training!

Keras: Streamlined and intuitive neural network design

Keras started as an independent project but has since become an integral interface for TensorFlow. Its primary objective is to offer a fast and straightforward way to design and experiment with neural networks. Keras abstracts many of the complexities found in Deep Learning frameworks, making it a favourite for beginners and experts who want to prototype quickly.

Keras model-building process is highly intuitive; developers can easily stack layers, choose optimisers, and define loss functions, all in just a few lines of code. Furthermore, its modularity ensures that components can be interchanged with ease, providing flexibility in model design without sacrificing usability.

PyTorch: Dynamic and flexible Deep Learning

Originating from Facebook's AI Research lab, PyTorch is known for its dynamic computational graph, which sets it apart from TensorFlow’s static graph. This dynamic nature, often referred to as 'define-by-run', means that the graph is constructed on-the-fly with each operation. It allows for more intuitive debugging and a style of programming that is more Pythonic in nature.

PyTorch is lauded for its tensor computation capabilities with strong GPU acceleration support. It’s particularly favoured in the academic and research community because it facilitates easier and more flexible experimentations. With extensions like TorchVision for computer vision tasks, PyTorch provides tools to cater to various Deep Learning applications.

Learn everything about neural networks with our Deep Learning Training today!

Deep Learning in Python code with example

Getting started with any coding project is the crux of being efficient in it. Here is a python code with a concise representation of a DL model using Keras framework designed for a binary classification task. Here is a classification of each segment of the code to perform Deep Learning With Python.

|

A sample code for Deep Learning With Python |

|

import numpy as np from keras.models import Sequential from keras.layers import Dense # Generate dummy data ata = np.random.random((1000, 20)) labels = np.random.randint(2, size=(1000, 1)) # Build a simple model model = Sequential() model.add(Dense(64, activation='relu', input_dim=20)) model.add(Dense(1, activation='sigmoid')) # Compile the model model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy']) # Train the model model.fit(data, labels, epochs=10, batch_size=32) |

Bringing in essential libraries

The initial steps in a project set the tone. When one begins to paint, choosing the right palette is pivotal. Similarly, import numpy as np marks our entry into the world of Deep Learning. Just as paints are fundamental for a painting, numpy is indispensable for handling and manipulating numerical data, acting as our mathematical palette.

Next, consider the building of a skyscraper. The architect first chooses a blueprint. from keras.models import Sequential is that blueprint for our Deep Learning model. With the Sequential method, our model has an organised structure, like floors in a building, allowing subsequent layers to be added in an orderly manner.

But what are these layers made of? from keras.layers import Dense brings in the raw materials. Think of Dense layers as the bricks and mortar in our skyscraper. They're the primary building blocks where data undergoes transformation, moving, and adapting as it ascends through the structure.

import numpy as np

from keras.models import Sequential

from keras.layers import Dense

Creating practice data

A chef tests a recipe before serving it to guests. In the same vein, before diving into real-world data, we craft a controlled dataset for testing. data = np.random.random((1000, 20)) is this experimental kitchen. Using numpy, we create 1000 sets of 20 random numbers, allowing us to simulate potential data scenarios.

What's a test without results? labels = np.random.randint(2, size=(1000, 1)) pairs our data with corresponding answers. It's akin to the chef tasting and adjusting the dish. These binary '0' or '1' labels serve as reference points, providing our model with a means to evaluate its own performance.

In essence, this segment of code offers our model a sandbox, a safe space. This crafted data doesn’t mirror the complexities of real-life scenarios but offers an environment to understand the fundamental mechanics without distractions.

data = np.random.random((1000, 20))

labels = np.random.randint(2, size=(1000, 1))

Setting the neural network model

Much like a sculptor with a block of marble, we start with a blank slate in Deep Learning. model = Sequential() provides that untouched canvas. With this, we communicate our intent to initiate the model-building process, ready for the subsequent design and detail.

Dive deeper with model.add(Dense(64, activation='relu', input_dim=20)). Here, the foundation of our masterpiece is set. These 64 processing units become the initial interpreters of our data, acting like scouts gathering preliminary insights. The activation function, relu, moulds this raw information into meaningful patterns, ensuring the data is positively valued and optimised for subsequent layers.

Our finishing touch is model.add(Dense(1, activation='sigmoid')). This is the crown of our structure, the final interpreter. It aggregates wisdom from preceding layers and makes the final call, culminating the myriad processes into a simple binary output. The sigmoid activation refines this process, ensuring the output stays within our binary framework.

model = Sequential()

model.add(Dense(64, activation='relu', input_dim=20))

model.add(Dense(1, activation='sigmoid'))

Compiling the model

An orchestra tunes its instruments before the concert. In Deep Learning, tuning is equally crucial. With model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy']), we're refining our model's performance. The choice of adam as an optimizer is akin to choosing the rhythm for the orchestra—guiding how our model should learn and adjust from its errors.

The concept of 'loss' is paramount. loss='binary_crossentropy' acts as a critic, a discerning judge. It calculates the difference between our model’s predictions and the real answers, producing a score that drives improvement.

Parallelly, metrics=['accuracy'] provides validation. It's a pat on the back or a nudge for improvement, letting us know how often our model’s predictions align with the actual answers. Akin to an audience's applause, it provides real-time feedback on the model’s performance.

model.compile(optimizer='adam',

loss='binary_crossentropy',

metrics=['accuracy'])

Training the model

Finally, the spotlight is on our model. The line model.fit(data, labels, epochs=10, batch_size=32) marks the beginning of the actual learning performance. It's like releasing a bird into the wild, watching it learn and navigate. By feeding it our sandbox data and labels, we're observing its evolution, step by step.

Iteration fosters improvement. With epochs=10, we're allowing our model to re walk its path multiple times, reassessing and refining its understanding, much like rehearsing a play until each act is perfected.

Finally, the concept of batches, as denoted by batch_size=32, is our model's learning strategy. It divides its tasks, taking them on in manageable chunks. Instead of gobbling up the entire dataset, it savours it piece by piece, ensuring a comprehensive grasp on each segment before moving to the next.

model.fit(data, labels, epochs=10, batch_size=32)

Learn more with our Natural Language Processing (NLP) Fundamentals With Python Course!

Practical applications of Deep Learning With Python

The immense potential of Deep Learning, powered by the versatility of the Python, has ushered in a new age of technological innovation. From visual understanding to interpreting human language, the applications are both profound and transformative, reshaping industries and our daily lives alike. Some of these applications are as follows:

Computer vision

The realm of computer vision seeks to endow machines with an ability that's second nature to humans - sight. But not just sight in the conventional sense; it aims for an understanding derived from visual inputs. Deep Learning With Python emerges as a protagonist in this narrative. Through intricate neural networks, it learns from countless images, discerning patterns, and nuances that might escape even the keenest human observers.

One of the stellar applications of computer vision is in the innovative world of self-driving cars. Imagine vehicles equipped with myriad cameras and sensors, absorbing visual data every split second. Deep Learning models, having been trained on vast datasets, process this data in real-time, enabling the vehicle to make crucial decisions—from identifying a pedestrian crossing the road to distinguishing between a plastic bag and a rock. Python's extensive libraries further streamline this image processing.

Healthcare is another arena reaping the benefits of computer vision. Gone are the days when medical professionals solely relied on their trained eyes for diagnostics. Today, with Deep Learning-driven computer vision, intricate medical images, be it X-rays, MRIs, or CT scans, are analysed with astounding precision. Minute anomalies, which might go unnoticed or are too complex for human interpretation, are highlighted by these models, potentially revolutionising early diagnosis and treatment pathways.

Natural Language Processing (NLP)

Language is the essence of human expression, layered with emotion, context, and cultural nuances. Translating this intricate tapestry into binary for machines has long been a challenge. Deep Learning With Python allows it to venture beyond mere word recognition, delving deep into syntax, semantics, and sentiment, unlocking a fuller understanding of human communication.

Chatbots are perhaps the most visible face of NLP's achievements. These digital conversationalists, driven by DL algorithms, can emulate human-like interactions, answer queries, or even provide therapeutic conversations. The backbone of these bots, particularly their capacity to understand context, sarcasm, or emotions, is Deep Learning. When paired with Python's computational and coding prowess, it's no wonder chatbots today feel more human-like than ever.

Beyond chatbots, consider the marvel of translation services. The challenges here are manifold: linguistic nuances, idiomatic expressions, and cultural context. DL rises to this challenge, training on vast corpuses of multilingual data. The result? Real-time translations that are contextually relevant, syntactically accurate, and semantically rich. Python, with its extensive NLP libraries, acts as the bedrock, supporting these Deep Learning architectures.

Voice recognition systems

Voices are as unique as fingerprints. The subtle variations in tone, the inflections in pitch, and the rhythm of speech make voice recognition a daunting task. However, with the advent of Deep Learning With Python, the field has witnessed revolutionary advancements. Machines now not only recognise voices but also understand the underlying emotions and intentions.

Virtual assistants like Alexa, Siri, and Google Assistant have seamlessly woven themselves into the fabric of our daily routines. But their smooth operations conceal the complexities beneath. Every time you interact, Deep Learning models spring into action, dissecting your voice for clarity and content. It's a dance of algorithms, where your voice's features are extracted, compared to vast datasets, and then processed for appropriate actions.

The beauty of these systems is their continuous learning curve. Every interaction they have, helps them evolve, refining their understanding of individual voice idiosyncrasies. Python plays an invaluable role here. Its libraries and frameworks provide the scaffolding upon which these intricate Deep Learning models stand, ensuring that the voice recognition systems of today approach near-human levels of comprehension and responsiveness.

Conclusion

Even as this blog concludes, the journey of Deep Learning with Python has only just begun. Marrying Python's versatility with DL's capabilities, will let you witness a transformative alliance. As you progress through the world of AI and ML, this combination promises to shape the technological landscape and enrich your daily experiences.

Interested in neural networks? Try our Neural Networks With Deep Learning Training!

Frequently Asked Questions

Upcoming Data, Analytics & AI Resources Batches & Dates

Date

Introduction to AI Course

Introduction to AI Course

Fri 17th Jan 2025

Fri 7th Mar 2025

Fri 23rd May 2025

Fri 18th Jul 2025

Fri 12th Sep 2025

Fri 12th Dec 2025

Top Rated Course

Top Rated Course

If you wish to make any changes to your course, please

If you wish to make any changes to your course, please