We may not have the course you’re looking for. If you enquire or give us a call on +1800812339 and speak to our training experts, we may still be able to help with your training requirements.

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

Do you know What is Docker? Imagine having a magic box where you could neatly pack your app, its favourite tools, and everything it needs to run smoothly—then carry it anywhere without a hitch. That’s Docker! This robust medium takes the hassle out of the “it works on my machine” drama by using containers—lightweight, portable environments that keep your app cosy and consistent.

Whether you're a developer building dreams or an operations wizard keeping things afloat, Docker simplifies workflows, speeds up deployments, and transforms how we build, ship, and run software in today’s fast-moving digital world.

Table of Contents

1) Understanding What is Docker Container

2) Comparing Containers and Virtual Machines

3) Key components of Docker Container

4) Getting started with Docker Containers

5) Advantages of Docker Container

6) Is Docker a Virtual Machine?

7) What is a Docker for Dummies?

8) Conclusion

Understanding What is Docker Container

Containers are the runnable instances created from Docker Images. They encapsulate the application and all its dependencies, providing a consistent and isolated runtime environment.

Containers are designed to be portable, allowing them to run on any host system that has Docker installed. Each Container shares the host system's kernel but operates in a separate user space. This ensures that Containers do not interfere with each other or with the host system. Docker Containers are immutable, meaning that any changes made during runtime are not persisted unless explicitly committed to a new image, which further clarifies the distinction between Docker Image and Container.

Comparing Containers and Virtual Machines

Containers and Virtual Machines offer comparable advantages regarding resource isolation and allocation, yet their operational mechanisms differ significantly. Meanwhile, Virtual Machines, hardware, and Containers virtualise the Operating System. This fundamental distinction results in Containers being renowned for their portability and efficiency. Here’s a brief comparison between the two:

Containers

Containers operate as an abstraction layer at the application level, bundling code and its dependencies into a unified package. Multiple Containers can coexist on a single machine, sharing the host's OS kernel while executing as isolated processes within user space. In comparison to Virtual Machines, Containers are more specific and efficient, with Container images typically occupying mere megabytes in size. This efficiency allows Containers to accommodate a broader range of applications, reducing the necessity for multiple Virtual Machines and distinct Operating Systems.

Virtual Machines (VMs)

Virtual Machines serve as an abstraction layer over physical hardware, transforming a single server into many virtual servers. This visualisation is made possible through a hypervisor, permitting the simultaneous operations of multiple VMs on a single host machine. Each VM compares a complete copy of an Operating System, the associated application, essential applications, essential binaries, and libraries, which collectively consume significant storage, often in the order of gigabytes. Docker and VM solutions differ in resource efficiency, as VMs may exhibit slower boot times due to their comprehensive nature.

Interested to gain deeper knowledge about docker, refer to our blog on "Podman vs Docker"

Key Components of Docker Container

Understanding the key components of Docker Container is crucial for working with them effectively. They form the foundation of Docker's functionality and enable developers to harness the full potential of Containerisation, making application development and deployment more efficient and scalable. Let's discuss these components one by one:

Docker Engine

The Docker Engine is the most crucial part of the platform. It is a lightweight, powerful runtime and orchestration tool that allows developers to build, run, and manage containers. It consists of several components, including the following:

a) Docker Daemon: The Docker daemon is responsible for running and managing containers on the host system.

b) REST API: REST API allows remote access and control of the Docker Engine.

c) Command-Line Interface (CLI): The CLI provides a user-friendly interface for interacting with Docker and executing commands to manage Containers, images, networks, and volumes.

Docker Images

Docker Images serve as the foundation for creating Containers. They are standalone and executable packages that encapsulate the application code, runtime environment, system tools, libraries, and dependencies required to run an application.

Docker Images follow the "layered" approach, where each layer represents a specific component or modification. This layered architecture enables efficient sharing and reusability of common components among different images. Thus, it minimises storage space and accelerates Container deployment.

Docker Registry

The Docker Registry serves as a central repository for storing and distributing Docker Images. It serves as a cloud-based service or an on-premises solution, allowing developers to share their custom-built images with others or pull pre-built images from the registry.

Docker Hub is the most popular public Docker Registry, offering a vast collection of official and community-contributed images. Organisations may also set up private Docker Registries for enhanced security and control over their Container images.

The hub serves as a centre for container images, acting as a vital component in the Docker ecosystem. It offers many features designed to simplify the containerisation process and enhance developer productivity.

One of the most significant advantages of Docker Hub is its vast collection of official and verified images. Official images are maintained directly by the software's original creators, ensuring their authenticity. Verified images are tested and trusted by Docker, assuring users of their quality.

Docker File

Docker File is a text file containing a series of instructions for developing a Docker Image. It serves as a blueprint for the following:

a) Image

b) Detailing the Base Image

c) Application Code

d) Dependencies

e) Environment Variables

f) Other Configurations

Developers use Docker Files to automate the image creation process, ensuring consistency and reproducibility. The Docker Engine reads the Docker File and executes the instructions step by step, generating the final Docker Image.

Docker Volumes

Docker Volumes provide a mechanism for data persistence beyond the Container's lifecycle. By default, containers are temporary, and any data written to the Container's file system is lost when the Container is removed.

Volumes allow storing data externally from the Container. This ensures that critical data remains intact even if the container is stopped, destroyed, or recreated. Moreover, volumes can be used to share data among Containers or between Containers and the host system.

Docker Architecture

The Docker Architecture comprises three primary components: the Docker client, responsible for initiating Docker Commands; the Docker Host, which runs the Docker Daemon; and the Docker Registry, where Docker Images are stored. Within this framework, the Docker Daemon, operating on the Docker Host, manages both images and containers.

Learn how to use traditional security tools and the variation by joining our Certified DevOps Security Professional (CDSOP) Course now!

Getting started with Docker Containers

Getting started with Docker Containers is an exciting journey that opens up a world of possibilities for developers and DevOps professionals. Whether you are new to Docker or looking to refresh your knowledge, this section will guide you through the essential steps to begin working with Docker Containers:

Installing Docker

The first step is to install Docker on your machine. Docker provides installation packages for various Operating Systems, including Windows, macOS, and various Linux distributions. Visit the official Docker website and follow the installation instructions specific to your platform. Once installed, Docker will be accessible from the command line, enabling you to interact with Containers and images.

Docker Hub Account

To get the most out of Docker, consider creating a Docker Hub account. It is a cloud-based repository for Docker Images, where you can find a vast collection of pre-built images contributed by the community. Having an account allows you to collaborate, share your custom images, and access official images maintained by Docker.

Running the first Docker Container

With Docker successfully installed, you can now run your first Container. Open your terminal or command prompt; further, enter the following command:

docker run hello-world

This command pulls the "hello-world" image from Docker Hub and runs it as a container. The output will verify that your Docker installation is functioning correctly.

Exploring Docker Images

Docker Images form the basis of containers. To explore the images available on your system, use the following command:

docker images

This command will display a list of locally available images, including their names, tags, sizes, and creation dates.

Running Custom Containers

Beyond "hello-world," you can run various other Containers to explore different applications and services. To run a Container, you need to specify an image and any required options. For example:

|

docker run -d -p 8080:80 nginx:latest |

This command runs an instance of the latest Nginx web server image in detached mode (-d) and maps port 8080 of the host system to port 80 of the Container.

Creating Custom Docker Images

To build a Docker Image, you need to write a Dockerfile, which contains instructions for assembling the image. The Dockerfile defines the base image, sets up the application environment, and specifies any dependencies required. Once you have a Dockerfile ready, use the following command to build the image:

|

docker build -t |

Replace with the desired name for your image and

Publishing Images to Docker Hub

If you have created a custom image that you wish to share with others, you can publish it to Docker Hub. Before pushing the image, ensure you are logged in to Docker Hub. Tag your image with your Docker Hub username and the desired repository name:

|

docker tag Finally, push the image to Docker Hub: docker push |

Managing Docker Containers

Docker provides several commands to manage Containers effectively. You can start, stop, and restart Containers using straightforward commands. Monitoring Container performance is also possible through Docker's built-in tools, providing valuable insights into resource usage and bottlenecks.

Learn about the basic concepts of Docker with our Introduction to Docker Training. Join today!

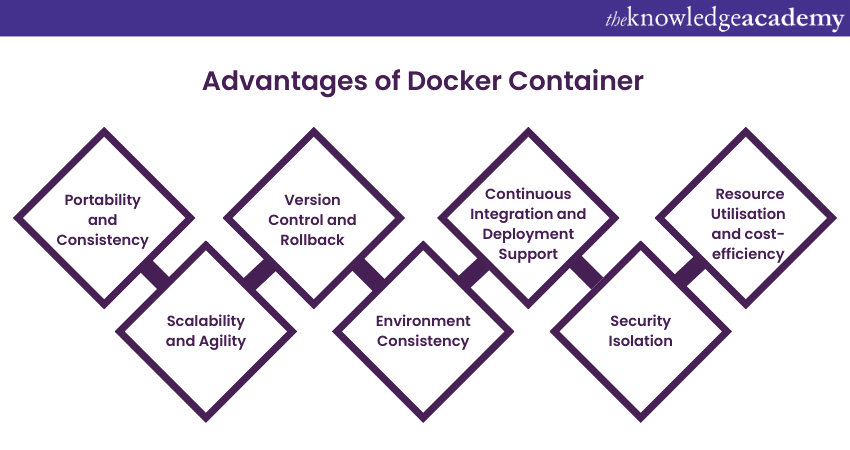

Advantages of Docker Container

Docker Containers provide many advantages. Let's have a detailed look at each one of them:

Portability and Consistency

Docker Containers encapsulate the application, its dependencies, and the runtime environment in a self-sufficient package. This encapsulation ensures that Containers are highly portable, as they can be run consistently across different environments, regardless of the underlying infrastructure.

Developers can create a container on their local development machine and confidently deploy it to various platforms, such as testing, staging, or production, with minimal configuration changes. The consistency achieved through Containerisation streamlines the development and deployment process, reducing the chances of "works on my machine" issues.

Scalability and Agility

Docker Containers facilitate easy scaling of applications. Since Containers are lightweight and start quickly, developers can rapidly replicate and deploy multiple instances of the same container to handle increased demand.

This horizontal scaling approach allows applications to scale out efficiently, responding dynamically to fluctuations in user traffic. Docker's agility in scaling and provisioning new Containers enables developers to iterate and update applications seamlessly. This promotes a DevOps culture of Continuous Integration and Continuous Delivery.

Version Control and Rollback

Docker Images serve as immutable snapshots of application code and dependencies. This immutability allows developers to version their images, maintaining a history of changes made to the application.

Moreover, Version Control of Docker Images ensures that any change can be tracked and reverted if necessary. This feature is invaluable for rolling back to previous versions in case of issues or bugs, promoting a robust and controlled release process.

Environment Consistency

Docker Containers eliminate the "it works on my machine" problem by providing consistent environments for development, testing, and production. Developers can package all the required dependencies within the container. This ensures that the application behaves consistently across various environments. Moreover, this consistency minimises the risk of environment-related bugs and ensures a smooth deployment process.

Continuous Integration and Deployment (CI/CD) Support

Docker's compatibility with CI/CD pipelines enables seamless integration into modern DevOps practices. Developers can automate the build, testing, and deployment processes using Docker Containers. This enables faster, more reliable, and error-free releases. Additionally, the ability to run the same container locally and in production ensures that any issues identified during the testing phase can be quickly resolved before deployment.

Security Isolation

Docker Containers utilise several built-in security mechanisms, such as user namespaces, to enforce separation between the host system and Containers. Isolation reduces the risk of security breaches, as malicious activities within a container are confined to that specific Container. As a result, it protects the host system and other Containers from potential threats.

Resource Utilisation and Cost-efficiency

Docker's lightweight nature ensures that containers share the host system's resources efficiently, reducing hardware and infrastructure costs. The ability to run multiple containers on a single host system maximises resource utilisation. This ability makes Docker an economical choice for deploying applications at scale.

Is Docker a Virtual Machine?

No, Docker is not a virtual machine. It is a containerization platform that isolates applications and their dependencies using lightweight containers that share the host operating system.

What is Docker for Dummies?

Docker simplifies application deployment by packaging code and dependencies into portable containers. Think of it as a lightweight, portable mini-environment for running software consistently across different systems.

Conclusion

Docker revolutionises software development and deployment by enabling consistent, scalable application environments. Using lightweight containers, Docker streamlines workflows, reduces resource usage, and ensures reliability across different systems. Understanding What is Docker and its functionality empowers developers to simplify application management, enhance collaboration, and accelerate delivery in today’s fast-paced technological landscape.

Elevate your DevOps skills with our Certified Agile DevOps Professional Course!

Frequently Asked Questions

What are the Benefits of Using Docker?

Docker offers portability, scalability, and efficiency. It enables consistent environments across development, testing, and production, reduces resource usage compared to virtual machines, and streamlines software deployment and management.

Can Docker run on any Operating System?

Docker can run on major operating systems like Linux, Windows, and macOS. However, it uses different methods for containerisation, such as native support on Linux or a virtual machine backend on macOS and Windows.

What are the Other Resources and Offers Provided by The Knowledge Academy?

The Knowledge Academy takes global learning to new heights, offering over 3,000 online courses across 490+ locations in 190+ countries. This expansive reach ensures accessibility and convenience for learners worldwide.

Alongside our diverse Online Course Catalogue, encompassing 19 major categories, we go the extra mile by providing a plethora of free educational Online Resources like News updates, Blogs, videos, webinars, and interview questions. Tailoring learning experiences further, professionals can maximise value with customisable Course Bundles of TKA.

What is The Knowledge Pass, and How Does it Work?

The Knowledge Academy’s Knowledge Pass, a prepaid voucher, adds another layer of flexibility, allowing course bookings over a 12-month period. Join us on a journey where education knows no bounds.

What are Related Courses and Blogs Provided by The Knowledge Academy?

The Knowledge Academy offers various DevOps Certification, including Puppet Training, Kubernetes Training and Chef Fundamentals Training. These courses cater to different skill levels, providing comprehensive insights into How to Clear Cache on iPhone.

Our Programming & DevOps Blogs, cover a range of topics related to programming, offering valuable resources, best practices, and industry insights. Whether you are a beginner or looking to advance your Programming skills, The Knowledge Academy's diverse courses and informative blogs have you covered.

Upcoming Programming & DevOps Resources Batches & Dates

Date

Docker Course

Docker Course

Fri 28th Mar 2025

Fri 23rd May 2025

Fri 4th Jul 2025

Fri 5th Sep 2025

Fri 5th Dec 2025

Top Rated Course

Top Rated Course

If you wish to make any changes to your course, please

If you wish to make any changes to your course, please