We may not have the course you’re looking for. If you enquire or give us a call on + 1-866 272 8822 and speak to our training experts, we may still be able to help with your training requirements.

Training Outcomes Within Your Budget!

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

Imagine a bustling database where multiple transactions juggle data like acrobats on a tightrope. But fear not! Concurrency Control in DBMS steps in as the ringmaster, ensuring a flawless performance without data inconsistencies.

This blog dives into the key aspects of Concurrency Control in DBMS, detailing its importance, key principles, methods and more! Read on and learn how this process keeps your Database Management Systems (DBMS) operations running like a well-oiled machine.

Table of Content

1) Understanding Concurrency

2) What is Concurrency Control in DBMS?

3) The Need for Concurrency Control

4) Key Principles of Concurrency Control

5) Concurrency Control Methods in DBMS

6) Concurrent Execution in DBMS

7) Challenges in Concurrency Control

8) Advantages of Concurrency

9) Conclusion

Understanding Concurrency

Concurrency is a problem that occurs in transactions when multiple users simultaneously try to perform operations like reading or writing data or information. Concurrency arises when the user accesses or modifies data in a consistent state. However, after performing some operations, it leads to an inconsistent data state with potential conflicts and errors.

What is Concurrency Control in DBMS?

Concurrency Control is a process of managing and executing simultaneous transactions or manipulating data by multiple processes or by users without data loss, data integrity, or data inconsistency. It provides a way for users to execute simultaneous operations in DBMS. In DBMS, two types of operations are mainly for a transaction: read (R) and write (W). We will cover each of these operations in detail next.

Concurrency Control's main goal is to ensure that the transactions executed in a DBMS do not lead to data inconsistency. This is achieved through the concept of serializability, a clear and effective tool for managing Concurrency. However, if not managed effectively, concurrent execution of transactions can lead to a host of issues, including:

1) Inconsistent data

2) Lost updates

3) Uncommitted data

4) Inconsistent retrievals

The Need for Concurrency Control

Concurrency Control is essential in DBMS for many reasons, including the following:

1) Ensuring Database Consistency: Proper Concurrency Control ensures the database stays consistent even after numerous concurrent transactions.

2) Avoiding Conflicting Updates: When two transactions attempt to update the same data simultaneously, one update might overwrite the other without proper control. Concurrency Control mitigates this issue.

3) Preventing Dirty Reads: Without Concurrency Control, one transaction might read data that another transaction is in the middle of updating (but hasn’t been finalised). This can lead to inaccurate reads, where the data doesn’t reflect the final, committed state.

4) Enhancing System Efficiency: Concurrency Control allows multiple transactions to be processed in parallel, improving system throughput and optimising resource use.

5) Protect Transaction Atomicity: In a series of operations within a transaction, it’s crucial that all operations succeed (commit) or none do (abort). Concurrency Control ensures that transactions are treated as a single indivisible unit, even when executed concurrently with others.

From gaining a deeper understanding of logical and conditional operators to learning how to connect with mainframe and network applications, our Teradata Training covers it all - Sign up now!

Key Principles of Concurrency Control

The principles of Concurrency Control are the ones upon which Concurrency is built, and these include:

1) Atomicity (A)- Transactions are atomic, meaning they are complete or not at all.

2) Consistency (C)- The database must transition from one consistent state to another, preserving data integrity.

3) Isolation (I)- Transactions should appear as though they are executed in isolation, meaning the operations of one transaction are not visible to other transactions until they are completed.

4) Durability (D)- Once a transaction is committed, its changes are permanent, even in a system failure.

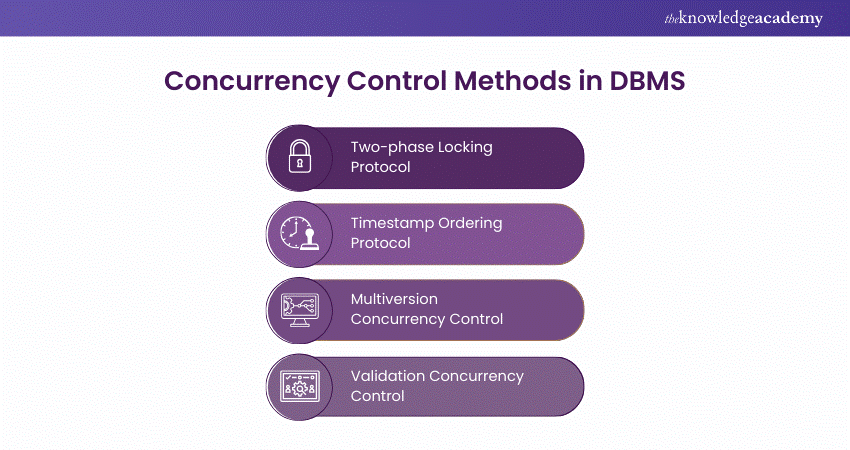

Concurrency Control Methods in DBMS

The various Concurrency Control methods include two-phase locking Protocol, timestamp ordering Protocol, multiversion Concurrency Control and validation Concurrency Control. We explore them in detail below:

1) Two-phase Locking Protocol

Two-phase locking (2PL) is a protocol used in Database Management systems to control Concurrency and ensure transactions are executed in a way that preserves a database's consistency. It’s called “two-phase” because each transaction has two distinct phases: the Growing phase and the Shrinking phase.

Here's a breakdown of the two-phase locking protocol:

1) Phases:

a) Growing Phase: During this phase, a transaction can obtain (acquire) any number of locks as required but cannot release any. This phase continues until the transaction acquires all the locks it needs and no longer requests.

b)Shrinking Phase: Once the transaction releases its first lock, it starts. The transaction can be released during this phase, but no more locks can be acquired.

2) Lock Point: The lock point is the date when the transaction switches from the growing phase to the shrinking phase (i.e., when it releases its first lock).

The primary purpose of this protocol is to ensure conflict-serializability. The protocol ensures that a transaction does not interfere with others in ways that give inconsistent results.

2) Timestamp Ordering Protocol

The Time stamp Ordering Protocol is a Concurrency Control method used in Database Management systems to maintain transaction serialisability. This method uses a timestamp for each transaction to determine its order compared to other transactions. Instead of using locks, it ensures transaction orders based on their timestamps.

Here's a breakdown of the Time stamp ordering protocol

a) Read Timestamp (RTS): This is the latest or most recent transaction timestamp that has read the data item. Every time a data item X is read by a transaction T with timestamp TS, the RTS of X is updated to TS if TS is more recent than the current RTS of X.

b) Write Timestamp (WTS): This is a transaction's latest or most recent timestamp that has written or updated the data item. Whenever a data item X is written by a transaction T with timestamp TS, the WTS of X is updated to TS if TS is more recent than the current WTS of X.

This protocol uses these to determine whether a transaction’s request to read or write a data item should be granted. Based on their timestamps, the protocol ensures a consistent ordering of operations, preventing the formation of cycles and deadlocks.

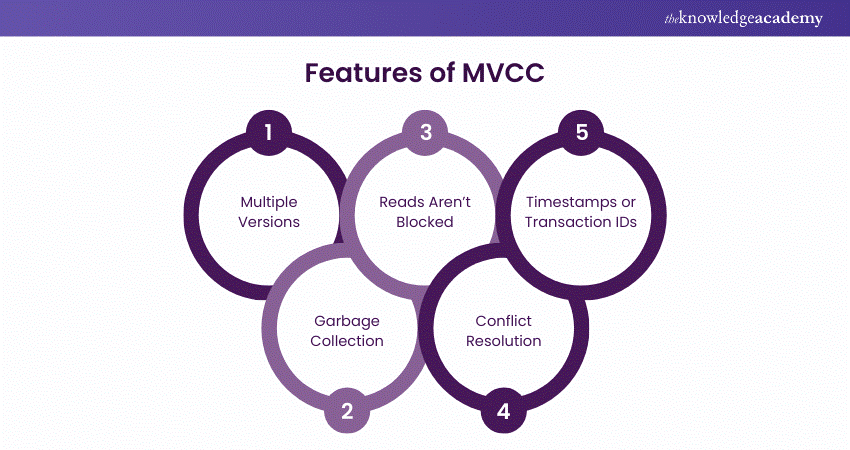

3) Multiversion Concurrency Control

Multi-version Concurrency Control (MVCC) is a method used in Database Management systems to handle concurrent operations without conflicts. It employs multiple versions of a data item. Instead of locking the items for write operations (which can reduce Concurrency and lead to bottlenecks), MVCC creates a separate version of the data item being modified.

Here are some essential points to remember about this protocol:

a) Multiple Versions: When a transaction modifies a data item, instead of changing the item in place, it creates a new version of that item. This means that multiple versions of a database object can exist simultaneously.

b) Reads aren’t Blocked: One significant advantage of MVCC is that write operations do not block read operations. When a transaction reads a data item, it sees a version of that item consistent with the last time it began a transaction or issued a read, even if other transactions are currently modifying that item.

c) Timestamps or Transaction IDs: Each version of a data item is tagged with a unique identifier, typically a timestamp or a transaction ID. This identifier determines which version of the data item a transaction sees when it accesses that item. A transaction will always see its writes, even if they are uncommitted.

d) Garbage Collection: As transactions create newer versions of data items, older versions can become obsolete. A background process typically cleans up these old versions. This procedure is often referred to as “garbage collection.”

e) Conflict Resolution: If two transactions try to modify the same data item concurrently, the system will need to resolve the conflict. Different systems have different conflict resolution methods. A common one is that the first transaction to commit will succeed, and the other transaction will be rolled back.

Acquire deeper knowledge of logical data structure, database constraints and more in our Relational Databases & Data Modelling Course - Sing up now!

4) Validation Concurrency Control

Validation Concurrency Control (VCC) is an advanced database Concurrency Control technique. Instead of acquiring locks on data items, as in most traditional (pessimistic) Concurrency Control techniques, validation Concurrency Control allows transactions to work on private copies of database items and validates the transactions only at the time of commit.

The central idea behind VCC is that conflicts between transactions are exceptionally rare, providing reassurance that it’s better to let transactions run to completion and only check for conflicts at commit time.

The essential features of VCC to remember include:

1) Phases: Each transaction in VCC goes through three distinct phases:

a) Read Phase: The transaction reads values from the database and changes its private copy, a local, temporary version of the data that only the transaction can see and modify without affecting the actual database.

b) Validation Phase: Before committing, the transaction rigorously checks if the changes made to its private copy can be safely written to the database without causing conflicts, ensuring the system's reliability and your peace of mind.

c) Write Phase: If validation succeeds, the transaction updates the actual database with the changes made to its private copy.

2) Validation Criteria: The system checks for potential conflicts with other transactions during the validation phase. For instance, if two transactions attempt to update the same data item, a conflict is detected. If a conflict is found, the system can either roll back the transaction or delay it for a retry, depending on the strategy implemented.

Concurrent Execution in DBMS

Concurrent execution in DBMS involves the following:

1) In a multi-user system, the concept of concurrent execution allows multiple users to access and use the same database at the same time. This enhances the system's efficiency and facilitates collaborative work, as different users can execute the same database simultaneously.

2) While working on database transactions, multiple users may be required to use the database to perform different operations, in which case the database is executed concurrently.

3) The simultaneous execution should be done in an interleaved manner, and no operation should affect the other executing operations. This maintains the consistency of the database. Thus, in the concurrent execution of the transaction operations, several challenging problems occur that need to be solved.

Lay a strong foundation in the field of Database Management through our Introduction To Database Course – Register now!

Challenges in Concurrency Control

Several challenges arise when numerous transactions execute simultaneously in a random manner, which are referred to as Concurrency Control Problems. We explore some of them below:

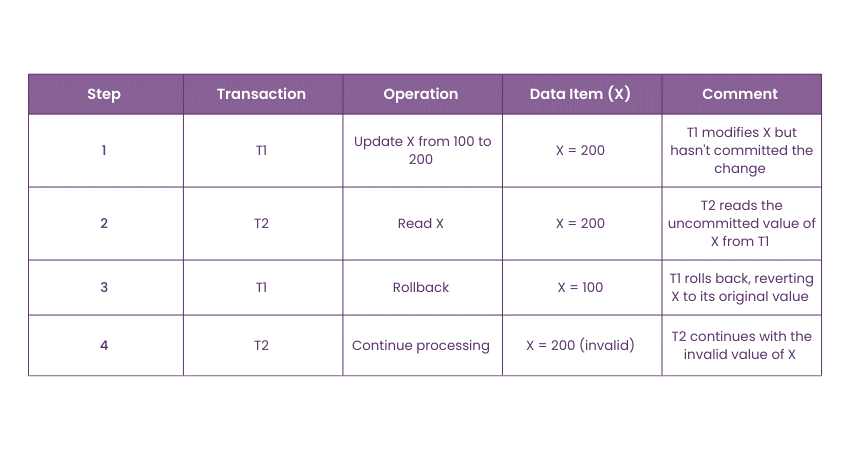

Dirty Read Issue

The dirty read issue in DBMS occurs when a transaction reads the data updated by another uncommitted transaction. It arises when multiple uncommitted transactions execute simultaneously. Here’s an example of a dirty read issue:

This summarises highlights the inconsistency caused by reading uncommitted data.

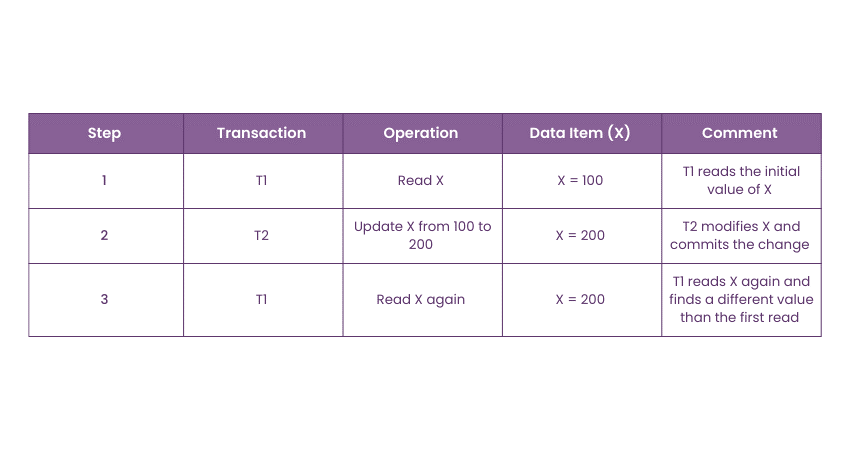

Unrepeatable Read Issue

An unrepeatable read issue occurs when a transaction reads the same data item multiple times and finds different values because another transaction has modified and committed the data between the reads. Here’s an example:

As you can see, T1 reads the value of X twice and finds different values due to T2's commitment between the two reads.

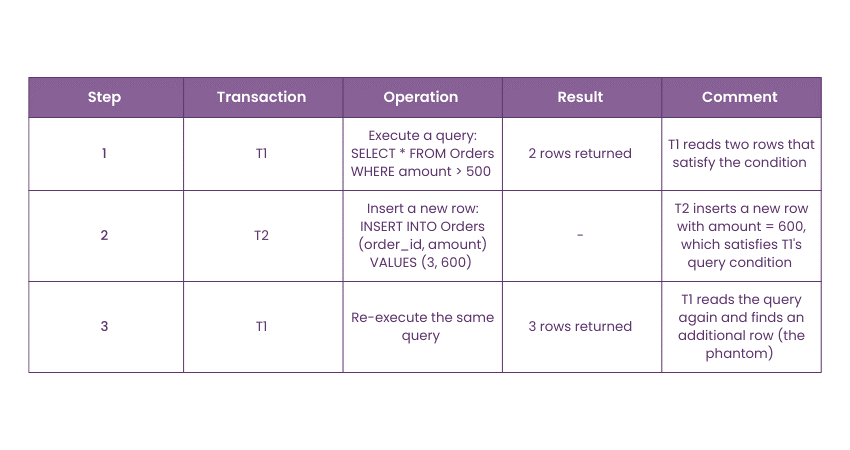

Phantom Read Issue

In the phantom read issue, data is read through two different read operations in the same transaction. A data value is obtained in the first read operation, but in the second operation, an error is obtained saying the data does not exist. Here's an example:

As this example shows, T1 executes a query, and T2 inserts a new row that matches the query condition, causing T1 to see different results when the query is re-executed.

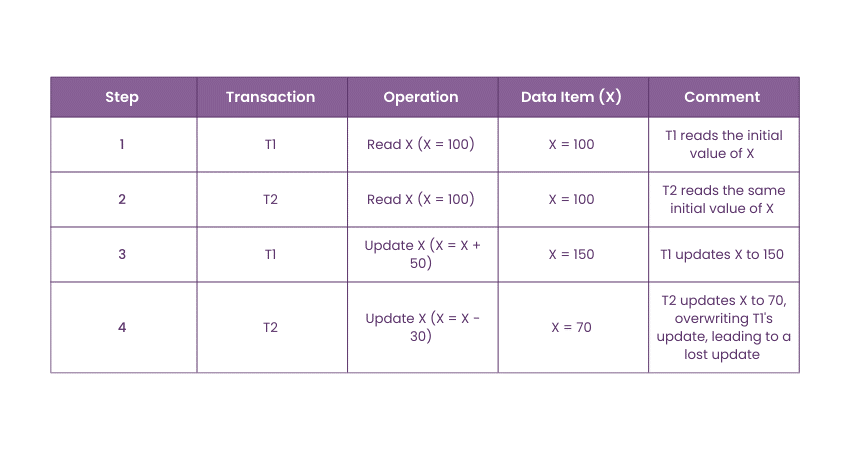

Lost Update Issue

A lost update issue occurs when two transactions read the same data and then update it based on the value read, while one of the updates gets overwritten by the other. Here’s an example to illustrate this issue:

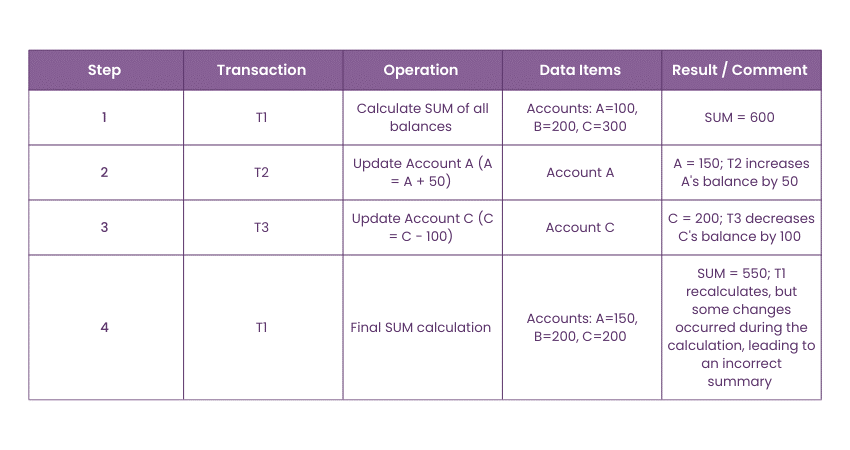

Incorrect Summary Issue

The Incorrect summary problem occurs when the sum of the two data is incorrect. This happens when a transaction tries to sum two data using an aggregate function, and the value of any data gets changed by another transaction. The following example illustrates this issue:

Here, T1 tries to calculate the total balance of accounts. Meanwhile, T2 and T3 make updates. These updates caused T1 to produce an incorrect total because it did not account for all the changes that happened during its calculation.

Advantages of Concurrency

Concurrency generally means that more than one transaction can work on a system. The advantages of a concurrent system are:

1) Waiting Time: When a process is ready to execute but is delayed by system constraints, it results in waiting time. Concurrency significantly reduces this waiting time, enhancing system performance.

2) Response Time: The time wasted getting the response from the CPU for the first time is called response time. So, Concurrency leads to less Response Time.

3) Resource Utilisation: The amount of resource utilised in a particular system is called Resource Utilisation. Multiple transactions can run parallel in a system. So, Concurrency leads to more Resource Utilisation.

4) Efficiency: The output produced compared to the given input is called Efficiency. So, Concurrency leads to more Efficiency.

Conclusion

In conclusion, mastering Concurrency Control in DBMS is essential for ensuring data integrity and efficient operations across multi-user environments. The right techniques can help avoid data anomalies, prevent conflicts, and optimise database performance. Keep these principles in mind, and you'll be well-equipped to handle the challenges of concurrent transactions confidently.

Elevate your understanding of Database Management with our comprehensive InfluxDB Training – Sign up now!

Frequently Asked Questions

The four points of Concurrency are atomicity, consistency, isolation and durability

The major factor for Concurrency Control is ensuring data consistency while allowing multiple transactions to execute simultaneously.

The Knowledge Academy takes global learning to new heights, offering over 30,000 online courses across 490+ locations in 220 countries. This expansive reach ensures accessibility and convenience for learners worldwide.

Alongside our diverse Online Course Catalogue, encompassing 19 major categories, we go the extra mile by providing a plethora of free educational Online Resources like News updates, Blogs, videos, webinars, and interview questions. Tailoring learning experiences further, professionals can maximise value with customisable Course Bundles of TKA.

The Knowledge Academy’s Knowledge Pass, a prepaid voucher, adds another layer of flexibility, allowing course bookings over a 12-month period. Join us on a journey where education knows no bounds.

The Knowledge Academy offers various Database Courses, including Redis Cluster Database Training and the Teradata Course. These courses cater to different skill levels, providing comprehensive insights into What is DBMS.

Our Programming & DevOps Blogs cover a range of topics related to Database Management, offering valuable resources, best practices, and industry insights. Whether you are a beginner or looking to advance your DBMS skills, The Knowledge Academy's diverse courses and informative blogs have got you covered.

Upcoming Programming & DevOps Resources Batches & Dates

Date

Introduction to Database Training

Introduction to Database Training

Fri 10th Jan 2025

Fri 14th Feb 2025

Fri 11th Apr 2025

Fri 23rd May 2025

Fri 8th Aug 2025

Fri 26th Sep 2025

Fri 21st Nov 2025

Top Rated Course

Top Rated Course

If you wish to make any changes to your course, please

If you wish to make any changes to your course, please