We may not have the course you’re looking for. If you enquire or give us a call on +1 6474932992 and speak to our training experts, we may still be able to help with your training requirements.

Training Outcomes Within Your Budget!

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

Within the ever-evolving landscape of Data Management, the significance of "what is Data Ingestion" and its meaning cannot be overstated. Data Ingestion serves as the essential gateway through which organisations gather, process, and make sense of the vast and diverse streams of data at their disposal.

In this blog, you will learn What is Data Ingestion, its different types, tools and benefits. Let's delve in deeper to learn more!

Table of Contents

1) Understanding What is Data Ingestion

2) Different types of Data Ingestion

3) Advantages of Data Ingestion

4) Challenges of Data Ingestion

5) Tools for Data Ingestion

6) Difference between Data Ingestion and ETL

7) Difference between Data Ingestion and Data Integration

8) Data Ingestion framework

9) Data Ingestion Best Practices

10) Conclusion

Understanding What is Data Ingestion

Data Ingestion is a fundamental concept in the realm of Big Data Management and analytics. At its core, Data Ingestion involves the process of collecting, importing, and transferring data from various sources into a storage or computing system. The primary goal of Data Ingestion is to make data accessible and usable for analysis, reporting, and decision-making.

Data Ingestion meaning: In simpler terms, Data Ingestion is akin to the entry point for data into a broader data ecosystem. It sets the stage for data processing and utilisation, making it a pivotal initial step in data workflows. In order to understand the significance of Data Ingestion, it's essential to delve into its various types and methods, each tailored to specific data processing needs.

Whether in real-time scenarios, batch-based processing, or complex Lambda architectures, Data Ingestion is the gateway to harnessing the power of data. This section will explore these various types in detail, shedding light on their unique characteristics and applications.

Different Types of Data Ingestion

Data Ingestion comes in various forms, each tailored to different data processing needs. Understanding these types is essential for selecting the right approach for specific use cases. Here, we delve into three primary types of Data Ingestion:

1) Real-time Data Ingestion

Real-time Data Ingestion is designed for processing data as it arrives, enabling immediate analysis and decision-making. In this method, data is collected and delivered in real time, making it ideal for applications that require up-to-the-minute insights. For instance, stock market data, social media feeds, and IoT sensor data benefit greatly from real-time Data Ingestion. It's all about capturing data at the moment of creation, allowing businesses to respond swiftly to changing conditions.

2) Batch-based Data Ingestion

In contrast to real-time ingestion, batch-based Data Ingestion collects and transfers data in predefined sets or batches. This approach is particularly useful when real-time analysis isn't necessary or when dealing with historical data. It's commonly used for periodic data updates, like daily sales reports or monthly financial summaries. Batch-based ingestion is a cost-effective and efficient way to handle large volumes of data without the need for real-time processing.

3) Lambda architecture-based Data Ingestion

The Lambda architecture combines real-time and batch processing, allowing businesses to leverage both approaches based on specific requirements. It offers the advantages of immediate data processing and the ability to store and analyse historical data. This is especially valuable for applications with varying data processing needs, ensuring flexibility and efficiency.

Each type of Data Ingestion has its unique characteristics and benefits, making them suitable for different use cases. Understanding these distinctions empowers organisations to design data workflows that align with their specific data processing goals.

Advantages of Data Ingestion

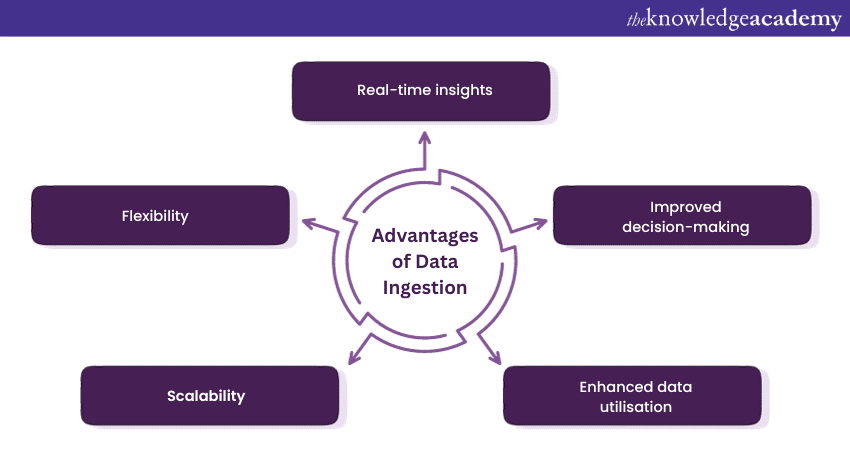

Data Ingestion plays a pivotal role in modern Data Management, offering a range of advantages that are essential for organisations seeking to harness the full potential of their data. Here are some of the key benefits of effective Data Ingestion:

1) Real-time insights: One of the most significant advantages of Data Ingestion is the ability to acquire and process data in real time. This enables organisations to gain immediate insights into their operations, customer behaviour, and other critical metrics. Real-time Data Ingestion is invaluable for decision-making, especially in dynamic industries like finance, e-commerce, and cybersecurity.

2) Improved decision-making: By providing up-to-date and relevant data, Data Ingestion enhances the decision-making process. Decision-makers can rely on accurate, current information to make informed choices, driving efficiency and effectiveness in operations and strategies.

3) Enhanced data utilisation: Effective Data Ingestion ensures that data is available for analysis, reporting, and other data-driven activities. This maximises the utility of data, allowing organisations to derive value from it in a variety of ways, from improving customer experiences to optimising business processes.

4) Scalability: Data Ingestion processes can be designed to scale seamlessly with growing data volumes. Whether it's a sudden influx of data or gradual growth, the right Data Ingestion system can adapt to handle the load, ensuring that Data Management remains efficient.

5) Flexibility: Different types of Data Ingestion (real-time, batch-based, and Lambda architecture-based) offer flexibility in how data is collected and processed. Organisations can choose the approach that best fits their data processing needs, ensuring that they can adapt to evolving requirements.

Effective Data Ingestion is a linchpin in data-driven organisations, facilitating better decision-making, improving operations, and maximising the potential of data assets. It's a critical component of modern data ecosystems that empowers businesses to thrive in a data-rich world.

Challenges of Data Ingestion

While Data Ingestion brings numerous advantages to the table, it also comes with its own set of challenges and complexities. Understanding these challenges is crucial for organisations to navigate the Data Ingestion process effectively. Here are some of the key hurdles associated with Data Ingestion:

1) Data Quality: Ensuring the quality of ingested data is a persistent challenge. Data may contain errors, duplications, or inconsistencies that need to be addressed to prevent inaccurate insights.

2) Data volume: In an era of Big Data, managing large volumes of data can be overwhelming. Ensuring that Data Ingestion processes can handle the scale of incoming data is essential.

3) Data variety: Data comes in various formats and structures, including structured, semi-structured, and unstructured data. Ingesting and integrating these diverse data types can be complex.

4) Data velocity: Real-time Data Ingestion must handle data streams that arrive rapidly and continuously. Meeting the demands of real-time processing can be challenging.

5) Data Security: Protecting data during the ingestion process is paramount. Data breaches or unauthorised access can occur if Data Security measures are not robust.

Understanding and addressing these challenges is crucial for organisations seeking to make the most of their data. Effective Data Ingestion strategies, combined with robust data governance and security measures, are key to overcoming these obstacles and harnessing the power of data for informed decision-making.

Tools for Data Ingestion

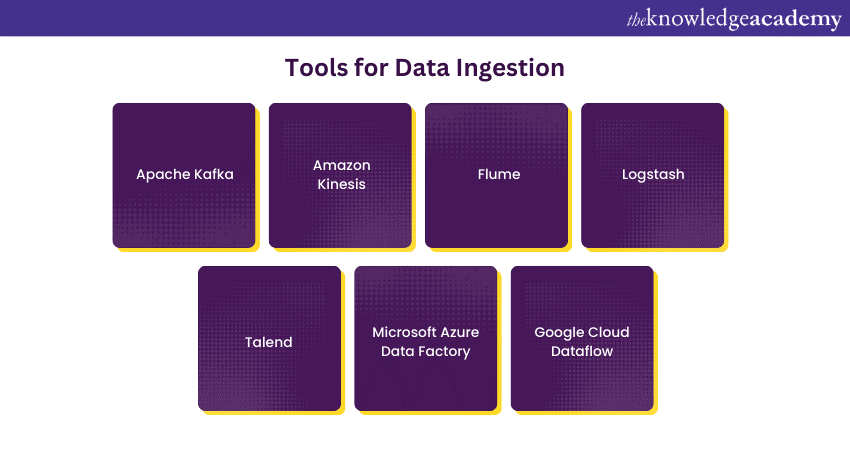

Effective Data Ingestion often requires the use of specialised tools and platforms to streamline the process and ensure data is collected, transformed, and made available for analysis. These tools come in various forms, each designed to meet specific Data Ingestion requirements. Here are some popular tools for Data Ingestion:

1) Apache Kafka: A distributed streaming platform that excels in ingesting, processing, and distributing high-throughput, real-time data streams. It's widely used for Data Ingestion in event-driven architectures.

2) Amazon Kinesis: A cloud-based platform that simplifies real-time data streaming and ingestion on Amazon Web Services (AWS). It's ideal for applications that require real-time analytics.

3) Flume: An open-source tool by Apache designed for efficiently collecting, aggregating, and moving large volumes of log data or event data from various sources to a centralised repository.

4) Logstash: Part of the Elastic Stack, Logstash is a versatile tool for ingesting and transforming data. It's commonly used in combination with Elasticsearch and Kibana for log analysis.

5) Talend: An open-source Data Integration tool that offers a wide range of Data Ingestion and transformation features. It's known for its user-friendly interface and extensive connectivity options.

6) Microsoft Azure Data Factory: A cloud-based Data Integration service on Microsoft Azure that allows users to create data-driven workflows for Data Ingestion, transformation, and analytics.

7) Google Cloud Dataflow: Part of the Google Cloud Platform, Dataflow is a fully managed stream and batch data processing service that facilitates Data Ingestion and processing at scale.

The choice of Data Ingestion tool depends on the specific needs and the existing data infrastructure of an organisation. Many tools offer features like data transformation, error handling, and support for various data formats to make the Data Ingestion process as efficient and flexible as possible.

When selecting a tool, it's essential to consider factors like scalability, ease of use, and compatibility with the organisation's existing technology stack.

Difference Between Data Ingestion and ETL

Data Ingestion and ETL (Extract, Transform, Load) are both essential processes in the world of Data Management and analytics. While they share some common objectives, they serve distinct purposes and exhibit notable differences.

Data Ingestion primarily focuses on collecting and transporting raw data from various sources to a centralised storage or processing system. It ensures that data is available for analysis.

ETL is a more comprehensive process that involves extracting data from source systems, transforming it to meet specific business requirements, and loading it into a target data warehouse or repository.

Difference Between Data Ingestion and Data Integration

Data Ingestion and Big Data Integration are critical processes in managing and utilising data effectively. While they are related, they serve different purposes and involve distinct actions.

Data Ingestion focuses on the initial step of collecting and importing raw data from various sources into a central repository or processing system. Its primary goal is to make data available for analysis.

Data Integration is a more comprehensive process that involves combining, enriching, and transforming data from various sources to create a unified, holistic view of data. Its primary goal is to provide a consolidated and meaningful dataset.

Seamlessly merge the data world with our Data Integration and Big Data Using Talend – Sign up now!

Data Ingestion Framework

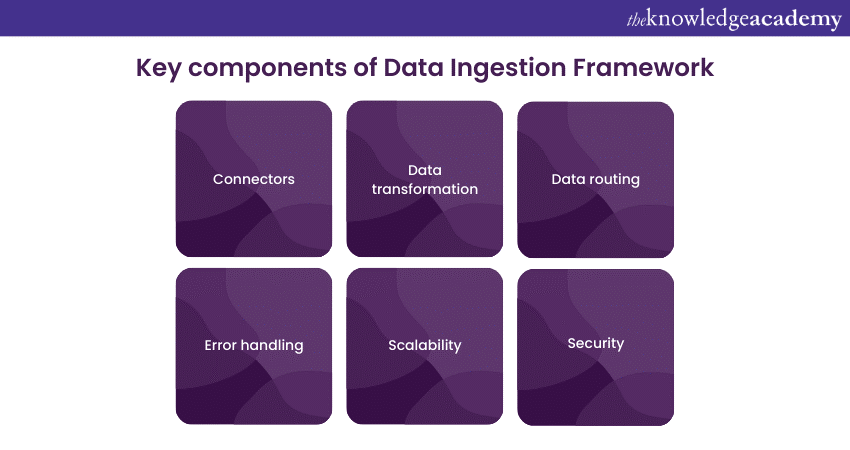

A Data Ingestion framework is a structured approach or set of tools that simplifies and enhances the process of collecting, transporting, and making data available for analysis. It serves as the backbone for efficient Data Ingestion, ensuring that data is seamlessly and securely moved from source systems to data storage or processing platforms. Let’s explore the key components of a Data Ingestion Framework:

1) Connectors: These enable the framework to interact with various data sources, including databases, cloud services, applications, and more.

2) Data transformation: The framework may include features for basic data transformation, such as format conversion, to ensure data compatibility.

3) Data routing: It provides rules and mechanisms for directing data to the appropriate destinations or repositories based on predefined criteria.

4) Error handling: Robust frameworks include error detection and recovery mechanisms to manage issues that may arise during Data Ingestion.

5) Scalability: The framework should be capable of handling data at scale to accommodate growing data volumes.

6) Security: Security measures like encryption and access controls are integral to protect data during ingestion.

Popular Data Ingestion frameworks include Apache Nifi, Apache Kafka, and Amazon Kinesis. The choice of framework depends on an organisation's specific Data Ingestion needs, scalability requirements, and existing technology stack. These frameworks streamline Data Ingestion processes, ensuring that data is efficiently collected and ready for analysis.

Harness the power of Big Data analysis with our Big Data Analysis – Sign up today!

Data Ingestion Best Practices

Efficient Data Ingestion is crucial for accurate and timely Data Analysis. To ensure a smooth and reliable Data Ingestion process, consider the following best practices:

1) Data source profiling: Before initiating Data Ingestion, thoroughly profile and understand your data sources. This includes examining the data structure, formats, quality, and potential data anomalies. A deep understanding of your data sources ensures a more efficient and successful Data Ingestion process.

2) Data validation and cleaning: Implement data validation and cleaning routines to maintain Data Quality during ingestion. This includes checks for missing values, data type inconsistencies, and duplicate entries. By ensuring data integrity from the start, you minimise the risk of introducing errors into your data pipeline.

3) Automated error handling: Develop automated error detection and handling mechanisms to promptly address any issues that may arise during Data Ingestion. Implement monitoring systems, logging, and alerts to identify and respond to errors, ensuring the continuity of data flow.

4) Protects data: Prioritise Data Security throughout the ingestion process. Use encryption to protect sensitive data during transfer and implement secure access controls. A strong focus on Data Security prevents data breaches and unauthorised access.

5) MetaData Management: Maintain detailed metadata records related to ingested data. Metadata includes information about data sources, data lineage, and transformations applied during the ingestion process. Comprehensive MetaData Management facilitates data governance, lineage tracking, and auditing.

Following these best practices ensures a smooth and reliable Data Ingestion process, laying the foundation for accurate and meaningful Data Analysis.

Conclusion

We hope you read and understand everything about What is Data Ingestion. It is the foundation of effective Data Management. By understanding its types, advantages, and challenges, selecting appropriate tools, and following best practices, organisations can ensure the seamless flow of data for informed decision-making and innovation. A well-executed Data Ingestion strategy is paramount in today's data-driven landscape.

Elevate your data mastery with our Big Data and Analytics Training – Sign up now!

Frequently Asked Questions

Upcoming Data, Analytics & AI Resources Batches & Dates

Date

Hadoop Big Data Certification

Hadoop Big Data Certification

Thu 30th Jan 2025

Thu 27th Feb 2025

Thu 20th Mar 2025

Thu 29th May 2025

Thu 3rd Jul 2025

Thu 4th Sep 2025

Thu 20th Nov 2025

Top Rated Course

Top Rated Course

If you wish to make any changes to your course, please

If you wish to make any changes to your course, please