We may not have the course you’re looking for. If you enquire or give us a call on +45 89870423 and speak to our training experts, we may still be able to help with your training requirements.

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

In Software Development and deployment, new technologies continually emerge to streamline the process and enhance efficiency. One such groundbreaking innovation is Docker. But do you know What is Docker and why it has become important?

When you start as a Developer, you must know Docker in detail. It is one of the most popular open-source platforms that helps simplify the process of developing, shipping, and running applications. Read this blog to understand What is Docker, its importance, and its advantages.

Table of Contents

1) Understanding What is Docker

2) How is Docker used?

3) Key concepts of Docker

4) What is Docker architecture?

5) How does Docker work?

6) What do you understand by Docker Containers?

7) What does Docker Container contain?

8) Installation of Docker

9) Advantages of Docker

10) Future of Docker

11) Conclusion

Understanding What is Docker

Docker is an open-source Containerisation technology. It allows Developers to build, package, and distribute applications along with all their dependencies in a highly portable and self-sufficient unit, called a "Container."

A Docker Container is similar to a Virtual Machine (VM).However, unlike traditional VMs, each instance doesn't require a separate Operating System. Instead, Containers share the host system's kernel, making them lightweight, efficient, and blazingly fast to start and stop.

Each Docker Container is an isolated unit that encapsulates an application and all the libraries, dependencies, and configurations it needs to run. This encapsulation ensures that the application's environment remains consistent regardless of where it runs.

Moreover, Developers can create, test, and run applications within Containers on their development machines. They can also confidently move them to any other environment, such as testing servers, staging environments, or production servers, knowing they will behave the same.

How is Docker used?

Docker has several uses, which makes it a reliable platform for most Developers. Here are some points which will help you understand how you can use Docker:

a) Docker helps in streamlining the development lifecycle. It allows you to standardise environments using some local Containers. These Containers provide the best results when they are used for Continuous Integration and Continuous Delivery (CI/CD).

b) Docker provides a consistent delivery of your applications. You can write code locally and then share that same work with your team members or colleagues using Docker Containers.

c) Many professional Developers use it to push their applications in a test environment and run manual and automated tests.

d) Upon finding bugs, Developers can quickly fix them in the same developing environment and re-deploy them into the test environment.

e) This platform helps to deploy highly portable workloads. These Containers can run on a Developer’s personal laptop or Virtual Machine in a data centre.

f) It is lightweight, making it easy for Developers to manage workloads and scale up or down applications in real-time according to the demand.

g) Because it is lightweight, Docker can run fast. It provides a cost-effective alternative to VMs. It helps Developers use more of the server capacity to achieve their targets.

h) It is perfect for use in high-density environments and small and medium deployments.

Interested to gain deeper knowledge about docker, refer to our blog on "Podman vs Docker"

Key concepts of Docker

Docker Concepts are fundamental to understanding how Docker simplifies the deployment of applications within Containers, making them portable and easy to distribute across different environments.

Docker Container

A Docker Container is a lightweight, standalone, executable package that includes everything needed to run the software, including the code, runtime, system tools, libraries, and settings. Containers are isolated from each other and the host system but can communicate with each other through well-defined channels.

Docker Images

Docker Images are the blueprints of Containers. They are read-only templates from which Docker Containers are instantiated. An image can contain a complete Operating System with your applications and all necessary binaries and libraries.

Dockerfile

A Dockerfile is a text document that contains all the commands a user could call on the command line to assemble an image. Using Docker build, users can create an automated build that executes several command-line instructions in succession.

Docker Hub

Docker Hub is a service provided by Docker that helps find and share Container images with your team. It is the world’s most extensive library and community for Container images. Docker Hub provides a centralised resource for Container image discovery, distribution, change management, user and team collaboration, and workflow automation throughout the development pipeline.

Master Docker and elevate your tech career with our essential Docker Interview Questions blog - unlock success in every interview!

What is Docker architecture?

Docker uses a client-server model. It includes the Docker Client, Docker Daemon (Server), Docker Registries, and Docker Objects such as Images, Containers, Networks, and Volumes:

a) The Docker Daemon

The Docker Daemon ('dockerd') communicates with other daemons to help manage several Docker services. Its primary role is to listen to the Docker API requests and then execute the objects. Apart from listening to Docker API, it is also responsible for building, running and distributing several Docker Containers.

b) The Docker Client

The Docker Client ('docker') is the leading way many Docker users interact with Docker. So, you must use commands such as "docker run" when using Docker. This command sends commands to 'dockered', who then carries them out. The 'docker' command uses the Docker API, allowing the clients to communicate with the other daemons.

c) Docker Desktop

Docker Desktop is a straightforward application that can be installed on Mac, Windows or Linux. It helps in building and sharing Containerised applications and microservices.It also includes the Docker Daemon, the Docker Client, Docker Compose, Docker Content Trust, Kubernetes and Credential Helper.

d) Docker Registries

Docker Registries are storage spaces that store various Docker images. The registry also includes Docker Hub, which can be accessed by the public and used freely. By default, Docker actively looks for images on Docker Hub. However, you can also own your private registry.

If you want to pull any image from the Docker Hub, you can use the command "docker pull" or "docker run." These commands will pull the required images from the registry. Similarly, if you are interested in storing your image in the registry, you can use the "docker push" command to push your image to the registry.

e) Docker Objects

Docker Objects is a collection of images, Containers, networks, volumes, plugins and other objects. The images you create, and use are stored in Docker objects when using Docker. Let us have a look at some of these objects:

1) Images: Images in Docker refer to a read-only template with instructions on creating a Docker Container. It can also be defined as a lightweight, stand-alone, executable package with everything required to run a piece of software. This includes codes, a runtime, libraries, environment variables and configuration files. Sometimes, this image is based on another image with additional customisation.

2) Containers: Docker Containers are defined as the runnable instance of an image. You can connect these containers to one or more networks. Using the Docker API or CLI, you can create, start, stop, move, or delete a Container. You can also attach storage and create a new image based on the present state.

If you want, you can also isolate a Container from other Containers. You can also control how the isolated Container's storage, network, or any other subsystems will be from the isolated Containers or the host machine.

How does Docker work?

Docker helps to provision, package and run Containers smoothly. The Container technology available through the Operating System allows the Container to package the application service with its libraries, configuration files, dependencies, and other required parameters to operate. Each of these Containers shares the services of one or the other underlying Operating System.

Docker images also contain all the dependencies required to execute code inside a Container. Therefore, Containers that move quickly between the Docker environments of the same OS work with hardly any changes. Docker Image and Container work together seamlessly, as Docker uses resource isolation in the OS kernel, allowing several containers to run on the same OS. Docker also uses resource isolation in the OS kernel, allowing several containers to run on the same OS.

What do you understand by Docker Containers?

Docker Containers are one of the most critical aspects of the Docker platform. It includes everything that is required for an application to run. Docker also uses resource isolation in the OS kernel, allowing several containers to run on the same OS. All Docker Containers are isolated from each other and the host system.

There are three main parts of a Docker Container:

a) The Dockerfile - which is used to build the image

b) The image is a read-only template with instructions on how a Docker Container can be created

c) The Container - which is a runnable instance that is created from an image.

It would be best to remember that a Container also shares the kernel with other Containers and its host machine. This is what makes it lightweight in nature.

What does Docker Container contain?

Docker Containers contain everything that is necessary for running an application. These components are:

a) The code

b) A runtime

c) Libraries

d) Environment variables

e) Configuration tables

Anticipate attacks, refine strategies, and engage the right DevOps practices with our SAFe® DevOps Certification Course. Sign up today!

Installation of Docker

Installing Docker can vary slightly depending on the Operating System you are using. Docker provides installation packages for Windows, macOS, and various Linux distributions, making it accessible to many Developers and System Administrators. Here's a step-by-step guide on how to install Docker on different platforms:

Windows

Here, we will learn how to install Docker on Windows by downloading Docker Desktop and following the installation guide.

a) Check System Requirements: Docker Desktop for Windows requires Windows 10 64-bit: Pro, Enterprise, or Education (Build 15063 or later) or Windows 11 64-bit.

b) Download Docker Desktop: Visit the Docker website and download the Docker Desktop installer.

c) Install Docker Desktop: Run the installer and follow the on-screen instructions. You must decide whether to use Windows Subsystem for Linux 2 (WSL 2) or Hyper-V as the backend. WSL 2 is recommended for better performance.

d) Start Docker Desktop: Run Docker Desktop from the Start menu once installed. You should log out and back in or reboot your computer to complete the installation.

macOS

Here, we will learn How to install Docker on macOS by obtaining Docker Desktop, moving it to the Applications folder, and completing the setup process.

a) Check the macOS version: Docker Desktop for macOS is supported on the most recent and previous releases.

b) Download Docker Desktop: Go to the Docker website and download Docker Desktop for macOS.

c) Install Docker Desktop: Open the downloaded ".dmg" file and drag the Docker icon to your Applications folder.

d) Start Docker Desktop: Open Docker from the Applications folder. You must accept the terms and authorise the installation using your system password.

Linux (General)

Here, we will learn How to install Docker on Linux distributions using the package manager to install and manage its service.

a) Update package index: Open a terminal and update your package manager with "sudo apt-get update" or the equivalent command for your distribution.

b) Install Docker: Install Docker using the package manager with a command like "sudo apt-get install docker-ce".

c) Manage Docker service: Start the service with "sudo systemctl start Docker" and enable it to run on boot with "sudo systemctl enable Docker".

d) Post-installation steps: Add your user to the docker group to run Docker Commands without "sudo" by executing "sudo usermod -aG docker your-username".

Ubuntu

Here, we will understand How to install Docker on Ubuntu by preparing the repository, installing Docker Engine, and configuring the service.

a) Uninstall old versions: If you have older versions of Docker, remove them with "sudo apt-get remove docker docker-engine docker.io containerd runc".

b) Set up the repository: Update the "apt" package index, install packages to allow "apt" to use a repository over HTTPS, and add Docker’s official GPG key.

c) Install Docker Engine: Install the latest version of Docker Engine and containerd with "sudo apt-get install docker-ce docker-ce-cli containerd.io".

d) Start Docker: Start Docker with sudo "systemctl start Docker" and enable it to run on boot with "sudo systemctl enable Docker".

For each step, especially for Linux and Ubuntu, the terminal will execute commands. Following the official Docker documentation for the most accurate and up-to-date instructions is essential. The steps here are a general guide and may vary slightly depending on your OS version and configuration. Always ensure that your system meets the requirements before proceeding with the installation.

Join now for our Continuous Integration Training with TeamCity and learn how to build dynamic applications!

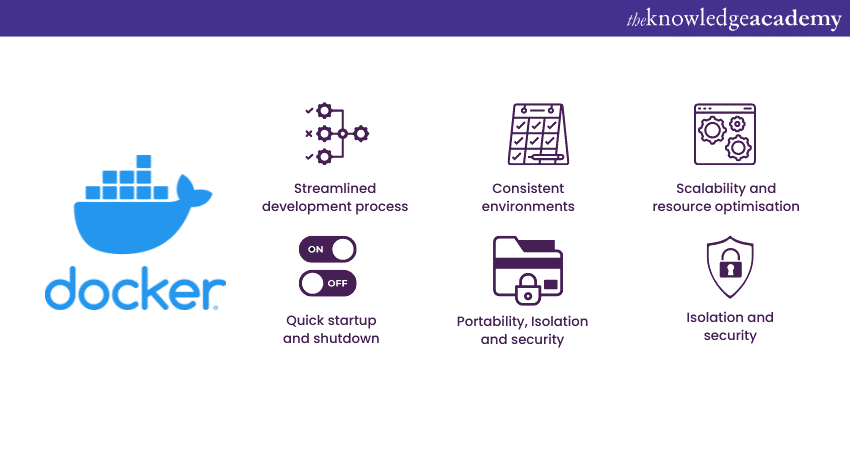

Advantages of Docker

Docker has rapidly gained popularity due to its numerous advantages and features. Some of the key advantages of Docker have been enumerated below:

1) Streamlined development process

With Docker, Developers can create Containers locally on their machines, ensuring that the application works flawlessly before moving it to other environments. This consistency between development and production environments reduces the likelihood of encountering issues during deployment. This helps save time and effort in the development process.

2) Consistent environments

Docker contains everything needed to run the application, including its code, libraries, and configurations.This consistency ensures that the application behaves the same way across different environments, eliminating compatibility issues and facilitating smooth deployments.

3) Scalability and resource optimisation

With Docker, applications can be easily replicated by creating multiple Containers. This allows efficient resource utilisation. Moreover, Docker's scalability ensures that the application can handle increased loads without compromising performance.

4) Quick startup and shutdown

Containers in Docker start and stop rapidly compared to traditional Virtual Machines, further emphasising the Difference between Docker and VM in terms of speed and efficiency. Since its Containers share the host system's Kernel, they require minimal overhead. This results in significantly reduced boot times. This quick startup and shutdown process enhances the overall agility of development and deployment.

5) Portability

Docker Containers are highly portable, enabling seamless movement between different environments. Developers can create Containers locally and confidently deploy them on servers, cloud platforms, or other Docker-enabled systems, knowing they will run consistently without modification.

6) Isolation and security

Docker Containers provide high isolation from the host system and other Containers. Each Container runs in an isolated environment, preventing conflicts and ensuring that applications remain secure. Additionally, it has built-in security features and follows best practices for secure Containerisation.

7) Easy management and versioning

Docker simplifies the management of applications and their dependencies. Encapsulating applications within Containers allows you to manage different versions of the same application without conflicts easily. This versioning capability enables efficient rollbacks and updates.

8) Community support

Docker Hub serves as a vast repository of Docker images shared by the community. It offers an extensive collection of pre-built images for popular applications and services. You can leverage Docker Hub to streamline your development process and access a wealth of resources created by other community members.

9) Integration

Docker integrates with Continuous Integration and Continuous Deployment (CI/CD) pipelines. Automation tools can build, test, and deploy Docker Containers, enabling faster and more reliable software releases.

10) Microservices

Docker is vital in the adoption of microservices architecture. Containers enable the decoupling of services, making it easier to develop, deploy, and manage individual components. Container Orchestration tools like Kubernetes and Docker Swarm allows you to scale and manage large, complex applications efficiently.

Future of Docker

The future of Docker looks promising and dynamic, with continuous advancements and updates to meet the evolving needs of developers and organisations. Here’s a summary of what’s on the horizon for Docker:

a) Performance improvements: Docker is committed to enhancing the performance of its tools, with significant improvements already made in startup times, upload and download speeds, and build times.

b) Docker Desktop updates: Regular updates to Docker Desktop have introduced new features and enhancements, ensuring better performance and a more seamless development experience.

c) Software Supply Chain Management: Docker Scout has been introduced to simplify Software Supply Chain Management, allowing teams to identify and address software quality issues early in development.

d) Docker extensions: The Docker extension marketplace has expanded, offering a variety of new extensions that enhance Docker’s functionality and integration with other tools.

e) Cloud-based builds: The Docker Build Early Access Program aims to speed up builds by utilising large, cloud-based servers and team-wide build caching, potentially speeding up builds by up to 39 times.

f) Community and roadmap: Docker continues to engage with its community of developers, Docker Captains, and Community Leaders to steer the platform's future. The public roadmap outlines the planned milestones and updates.

Learn How to Install Kubernetes on Ubuntu in just a few steps!

Conclusion

We hope that after reading this blog, you have understood What is Docker and how tools like Dockerfile vs Docker Compose play crucial roles in containerised application management. Docker has emerged as a pivotal tool in modern Software Development, helping you create, deploy, and manage applications within isolated environments known as Containers.

Level up your potential for programming with our Certified DevOps Security Professional (CDSOP) Course!

Frequently Asked Questions

What is Virtualisation?

Virtualisation is creating a virtual version of something, such as hardware, Operating Systems, storage devices, or network resources. It allows multiple Virtual Machines (VMs) to run on a single physical machine, improving resource efficiency and enabling Cloud Computing.

What can run in Docker?

Docker can run various applications, including web servers, databases, microservices, etc. Virtually any software on a physical machine or a Virtual Machine can run within a Docker Container. It includes both Linux and Windows-based applications, making Docker highly versatile for various deployment scenarios.

What is the Knowledge Pass, and how does it work?

The Knowledge Academy’s Knowledge Pass, a prepaid voucher, adds another layer of flexibility, allowing course bookings over a 12-month period. Join us on a journey where education knows no bounds.

What are the other resources and offers provided by The Knowledge Academy?

The Knowledge Academy takes global learning to new heights, offering over 3,000 online courses across 490+ locations in 190+ countries. This expansive reach ensures accessibility and convenience for learners worldwide.

Alongside our diverse Online Course Catalogue, encompassing 19 major categories, we go the extra mile by providing a plethora of free educational Online Resources like News updates, Blogs, videos, webinars, and interview questions. Tailoring learning experiences further, professionals can maximise value with customisable Course Bundles of TKA.

What are related DevOps Training and blogs provided by The Knowledge Academy?

The Knowledge Academy offers various DevOps Certification Courses, including Certified DevOps Professional (CDOP), Certified SecOps Professional (CSOP), and Certified DevOps Security Professional (CDSOP). These courses cater to different skill levels, providing comprehensive insights into Segmentation in Operating Systems.

Our Programming and DevOps Blogs cover a range of topics related to the DevOps Lifecycle, offering valuable resources, best practices, and industry insights. Whether you are a beginner or looking to advance your Programming and DevOps skills, The Knowledge Academy's diverse courses and informative blogs have you covered.

Upcoming Programming & DevOps Resources Batches & Dates

Date

Docker Course

Docker Course

Fri 11th Apr 2025

Fri 13th Jun 2025

Fri 15th Aug 2025

Fri 10th Oct 2025

Fri 12th Dec 2025

Top Rated Course

Top Rated Course

If you wish to make any changes to your course, please

If you wish to make any changes to your course, please