We may not have the course you’re looking for. If you enquire or give us a call on +08000201623 and speak to our training experts, we may still be able to help with your training requirements.

Training Outcomes Within Your Budget!

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

Ever wondered how your computer plays music, browses the internet, and downloads files simultaneously? The magic lies in threading, a powerful concept that can transform how your programs operate. But what exactly is threading, and how does it work in Python? Dive into this blog to unlock the secrets of Python Threading and discover how it can optimise your programs for efficiency and performance.

In this blog, we will delve into the basics and advanced concepts of Python Threading. You'll learn to create and manage threads, understand thread synchronisation, and explore the practical benefits of multithreading. Get ready to dive deep into the world of concurrent programming and elevate your coding skills to new heights!

Table of Contents

1) What is Python Threading – an overview

2) Starting a Thread in Python

3) Multiple Threading in Python

4) Thread synchronisation in Python

5) Concept of Producer-Consumer in Python Threading

6) Exploring the different objects in Threading

7) Advantages of Threading

8) Conclusion

What is Python Threading- an overview

A thread is an independent execution process within a program, allowing for multiple operations to occur simultaneously. In many Python 3 environments, threads seem to run concurrently but take turns executing due to the Global Interpreter Lock (GIL). This creates the illusion of parallelism, where, in reality, only one thread executes at a time.

Threading is beneficial for I/O-bound tasks that wait for external events rather than CPU-bound tasks, which may not see performance gains. For CPU-intensive tasks, the multiprocessing module is recommended. While threading may not always speed up execution, it can lead to cleaner, more manageable code architecture.

Concept of a Thread in Python

Threads are the smallest units of execution in a program, allowing multiple tasks to run seemingly simultaneously. Threads in Python often appear to run concurrently but execute one at a time due to the Global Interpreter Lock, which permits only one thread to control the Python interpreter at any given time.

Threads are well-suited for tasks that wait for external events, such as I/O operations. However, CPU-bound tasks that require significant computation may not benefit from threading because of the GIL. For true concurrency in CPU-bound tasks, consider using the multiprocessing module.

Using threading can enhance the design clarity of programs, making them easier to manage and understand, even if it doesn't always improve performance. Understanding the stages of a thread's lifecycle—from creation to termination—can help in effectively utilise threading in Python applications.

Stages of a Thread’s lifecycle in Python:

a) New: The ‘new’ stage is where the thread is created and its state before it executes.

b) Ready: The ‘ready’ stage is where the thread is in the queue to be scheduled by the Operating System (OS).

c) Running: The ‘running’ stage is where the thread is being executed.

d) Blocked: The ‘blocked’ stage is where the thread is waiting for an available resource.

e) Terminated: The ‘terminated’ stage is where the thread has successfully completed its execution and is not active.

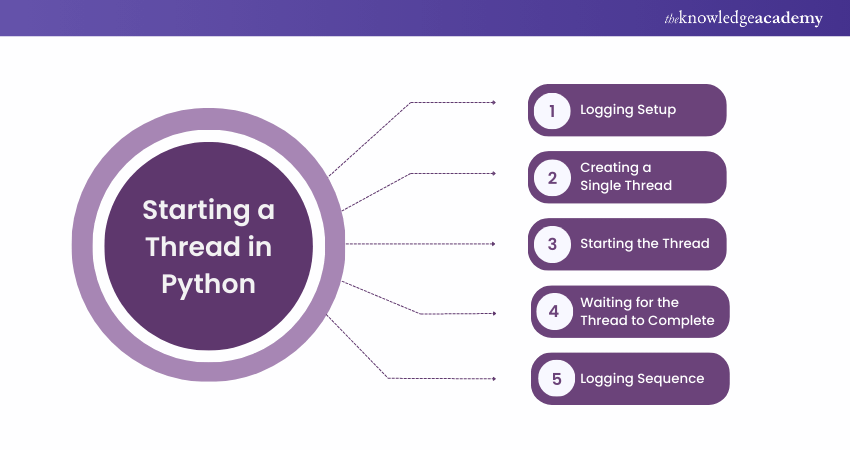

Starting a Thread in Python

Starting a thread in Python involves using the threading module, which provides a high-level way to create and manage threads. Here's a basic example to demonstrate how to start a thread:

|

import logging import threading import time def thread_function(name): logging.info("Thread %s: starting", name) time.sleep(2) logging.info("Thread %s: finishing", name) if __name__ == "__main__": format = "%(asctime)s: %(message)s" logging.basicConfig(format=format, level=logging.INFO, datefmt="%H:%M:%S") logging.info("Main : before creating thread") x = threading.Thread(target=thread_function, args=(1,)) logging.info("Main : before running thread") x.start() logging.info("Main : wait for the thread to finish") x.join() logging.info("Main : all done") |

Here’s an output:

|

Main: before creating thread Main: before running thread Thread 1: starting Main: wait for the thread to finish Thread 1: finishing |

Explanation:

1) Thread Function Definition: A function (‘thread_function’) is defined to simulate a task by logging its start, pausing for 2 seconds, and then logging its completion.

2) Logging Setup: Logging is configured to display timestamps and messages to track the sequence of events.

3) Creating a Single Thread: The main program creates a ‘Thread’ instance to run ‘thread_function’ with a specific argument, preparing it to run concurrently with the main program.

4) Starting the Thread: The thread is started using ‘.start()’, initiating the execution of ‘thread_function’ in a separate thread.

5) Waiting for the Thread to Complete: The main program calls ‘.join()’ to wait for the thread to finish, ensuring it pauses until the thread completes its task.

6) Logging Sequence: Logging statements provide insights into the flow of execution, indicating when the thread starts, runs, and finishes and when the main program resumes.

Daemon Threads

In computer science, a daemon is an operation that works in the background. In Python Threading, a daemon thread shuts down immediately when the program exits. Think of a daemon thread as running in the background without needing explicit shutdown.

If a program is running non-daemon threads, it will wait for those threads to complete before terminating. However, daemon threads are killed instantly when the program exits.

Consider the output of the previous program. Notice the 2-second pause after ‘__main__’ prints "all done" and before the thread finishes. This pause occurs because Python waits for non-daemon threads to complete. During program shutdown, Python’s threading module walks through all running threads and calls ‘.join()’ on those that are not daemons.

This behaviour is often desired, but there are alternatives. For example, you can create a daemon thread by setting ‘daemon=True’ when constructing the ‘Thread’:

|

x = threading.Thread(target=thread_function, args=(1,), daemon=True) |

Running the program with a daemon thread produces the following output:

|

$ ./daemon_thread.py Main: before creating thread Main: before running thread Thread 1: starting Main: wait for the thread to finish Main: all done |

The final line from the previous output is missing because the daemon thread was terminated when __main__ finished.

Join () a Thread

Daemon threads are useful, but sometimes, you need to wait for a thread to finish without exiting the program. To do this, use the .join() method. Uncommenting line twenty in the original code allows the main thread to wait for x to complete:

|

# x.join() |

Whether the thread is a daemon or not, .join() will wait until it finishes, ensuring orderly completion before the program exits.

Learn about templates and controllers by signing up for our Python Django Training now!

Multiple Threading in Python

Multiple Threading or ‘Multithreading’ refers to the process of running many threads concurrently. The process is designed to improve the program’s performance. Here is an example to introduce the concept of Multiple Threading or ‘Multi-threading’ in Python:

|

import threading import time # Function to print numbers from 1 to 5 def print_numbers(): for i in range(1, 6): print(f"Number {i}") time.sleep(1) # Function to print letters from 'a' to 'e' def print_letters(): for letter in 'abcde': print(f"Letter {letter}") time.sleep(1) # Create two threads thread1 = threading.Thread(target=print_numbers) thread2 = threading.Thread(target=print_letters) # Start both threads thread1.start() thread2.start() # Wait for both threads to finish thread1.join() thread2.join() print("Both threads have finished.") |

Here’s an output:

|

Number 1 Letter a Number 2 Letter b Number 3 Letter c Number 4 Letter d Number 5 Letter e Both threads have finished. |

Explanation: In this example, the code imports the ‘threading’ and ‘time’ modules. It also contains the definitions of two functions, namely ‘print_numbers’ and ‘print_letters’. Each of these functions prints a series of numbers or letters, and a one-second delay is also programmed between each print.

The code contains the instantiation of two ‘Thread’ variables, namely ‘thread1’ and ‘thread2’. You can then assign the functions ‘print_numbers’ and ‘print_letters’ as their respective targets.

Now, both these thread variables can be executed with the start() method. You can also utilise the join() method for both threads while waiting for them to commence execution before printing the text “Both threads have finished”.

When the code is executed, both threads will execute concurrently, displaying the numbers and letters while giving a one-second delay between every print. There will be variance in the order of execution, though both threads will keep running parallel until they finish their tasks.

Work with and utilise objects by signing up for our Object-Oriented Programming (OOP's) Course now!

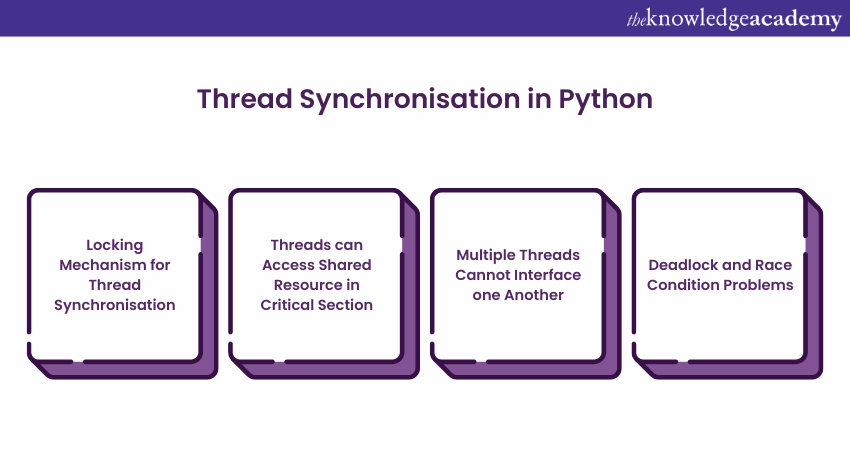

Thread Synchronisation in Python

Python’s ‘threading’ module offers a locking mechanism that is easy to implement and allows users to synchronise threads. A lock can be newly created by calling the Lock() method, which is intended to return the new lock.

Now, the concept in Python helps ensure that two or more concurrent threads do not parallelly have access to a program segment, also called the critical section. It is also important to note that the program’s critical section is where the threads can access the shared resource.

Synchronisation in Python basically refers to the process of ensuring that two or more threads are not interfacing with one another by gaining access to the same resource simultaneously. For example, consider a situation where two or more threads attempt to add the objects contained in a list at the same time. This action will be a failed attempt as it will drop one or all of the objects in the list. There is a possibility that the state of the list may also get corrupted.

Furthermore, there are two problems that can be encountered during the implementation of executing concurrent programs, which are:

1) Deadlock

A deadlock in Python is quite a time-consuming and hectic problem faced by developers as they design concurrent systems. Deadlock situations can be illustrated by considering the example of the ‘Dining Philosopher’ problem, which was introduced by Dutch Computer Scientist, Software Engineer and Science Essayist Edsger Wybe Dijkstra.

Now, the problem dictates that there are five philosophers seated at a round table while eating food from their plates. There are also five forks which can be used by all five of them to eat their food. However, the philosophers chose two forks rather than one at the same time to consume their food.

There are two main conditions dictated to all five philosophers. The first entails that each philosopher can only either be in an eating or thinking state. The second entails that they need to acquire both available forks. The issue now arises at the moment when each of these philosophers simultaneously attempts to pick up the fork on the left.

They all now have to wait for the right fork to be available, but neither of them will give up their right fork until they finish eating their food. This situation means that the right fork will most likely never be available to pick up by another philosopher, which translates to a state of deadlock at the dinner table.

Such a situation can very well arise in concurrent systems, too. The forks mentioned in the example above denote the system resources, and each of the five philosophers represents the process, which is competing to gain access to the resource.

Here is a code example to demonstrate the state of deadlock in multi-threading in Python:

|

# Create two locks lock1 = threading.Lock() lock2 = threading.Lock() # Function that acquires lock1 and then lock2 def thread1_function(): print("Thread 1: Acquiring lock1...") lock1.acquire() print("Thread 1: Acquired lock1. Waiting to acquire lock2...") lock2.acquire() print("Thread 1: Acquired lock2.") lock2.release() lock1.release() # Function that acquires lock2 and then lock1 def thread2_function(): print("Thread 2: Acquiring lock2...") lock2.acquire() print("Thread 2: Acquired lock2. Waiting to acquire lock1...") lock1.acquire() print("Thread 2: Acquired lock1.") lock1.release() lock2.release() # Create two threads thread1 = threading.Thread(target=thread1_function) thread2 = threading.Thread(target=thread2_function) # Start both threads thread1.start() thread2.start() # Wait for both threads to finish (this will never happen due to the deadlock) thread1.join() thread2.join() print("Both threads have finished.") |

Explanation: The code first creates two new locks, namely ‘lock1’ and ‘lock2’, with the use of ‘threading.Lock()’. This is then followed by the definition of two functions, namely ‘thread1_function’ and ‘thread2_function’. These defined functions represent the actions of both threads, and they are also programmed to attempt access to the locks in a specific order.

The order in this case is first ‘lock1’ followed by ‘lock2’ for ‘thread1_function’, and vice versa for ‘thread2_function’. You can then create two new threads named ‘thread1’ and ‘thread2’ and assign both their respective functions as targets.

These newly created threads can be initiated by using the ‘start()’ method. Considering that both threads attempt to gain access to the locks in a different sequence, a deadlock situation occurs, which prevents either of them from progressing. This will result in the program crashing or hanging and will not reach the point in the code where the message “Both threads have finished”.

2) Race condition

A race condition in Python is basically a failure case wherein the program’s behaviour is reliant on the order by which two or more threads are executed. This entails the unpredictability of the program’s behaviour because it changes with each execution cycle.

Moreover, such conditions are not problematic in Python with the use of threads, despite the presence of the Global Interpreter Lock or the GIL. There exist various types of race conditions, grouped under two primary categories which are:

a) Race conditions involving shared variables: A common race condition involves two or more threads attempting to make modifications to the same data variable. Consider the example where a thread may assign more values to a variable while a second thread is taking away values from the said variable.

These threads can be referred to consecutively as ‘Adder thread’ and ‘Subtractor thread’. The Operating System may switch the execution context from the Adder thread to the Subtractor thread during the update process of the variable.

Now, the addition or subtraction of values from the variable comprises three key steps, namely reading the variable’s current value, calculating the variable’s new value and writing a new value to the variable.

b) Race conditions involving the coordination of multiple threads: The second type of race condition entails the attempt of two threads to coordinate their behaviour. For example, two threads can coordinate their behaviour by using ‘threading.Condition’, where one thread could wait to be notified of some change in the application’s state through the wait function.

An example of coordinated thread behaviour could be where the first thread calls the wait() function once the second thread has called the notify() function to execute before the first thread.

Build applications with more speed by signing up for our Data Structure and Algorithm Training now!

Concept of Producer-Consumer in Python Threading

The producer-consumer problem is a fundamental concept in concurrent programming, illustrating the synchronisation challenges between two interdependent processes.

Producer-Consumer Problem:

a) A classic synchronisation problem in computer science.

b) Involves two main components: The producer and the consumer.

Producer Role:

a) Generates data or messages.

b) Writes messages to a shared resource, such as a buffer or database.

c) Operates continuously, often generating messages in bursts.

Consumer Role:

a) Reads and processes messages from the shared resource.

b) Writes processed messages to another resource, like a local disk.

c) Must efficiently handle incoming bursts of messages to avoid data loss.

Message Handling:

a) Messages do not arrive at regular intervals; they come in bursts.

b) Requires a mechanism to manage the flow of messages from producer to consumer.

Pipeline/Buffers:

a) Used to hold messages temporarily.

b) Smoothes out message bursts and ensures orderly processing.

c) Acts as an intermediary, balancing speed differences between producer and consumer.

Synchronisation Mechanisms:

a) Ensure that the producer and consumer do not interfere with each other.

b) Prevents data corruption and ensures all messages are processed correctly.

c) Common mechanisms include locks, semaphores, and conditions.

Performance Optimisation:

a) Optimises handling of peak loads and irregular message bursts.

b) Involves tuning the buffer size, message generation and consumption frequency, and synchronisation mechanisms.

c) Ensures the system runs efficiently under varying load conditions.

Join our Python Course to enhance your skills and tackle complex problems with simple solutions!

Exploring the different objects in Threading

Python offers users more modules to work with in Threading, depending on the use cases they need to work on. Here are some of the other modules involved:

1) Semaphore

A semaphore in Python threading is a counter module designed with a few distinct properties, one of which is its atomic counting, meaning that the OS does not swap the thread upon an increment or decrement in the counter. Semaphores basically protect resources that have a limited capacity.

The counter is of two types, namely internal and external, where the internal counter increments upon calling ‘.release()’ and decrements upon calling ‘.acquire()’. If the thread calls the latter when the counter is zero, the thread is blocked until another thread calls ‘.release()’.

2) Timer

The Timer module in Python Threading is utilised for scheduling functions that need to be called after a fixed period has commenced. Users need to wait for a few seconds and call a function to create a Timer, which is started by invoking the ‘.start()’ function and terminated by calling the ‘.cancel()’ function.

3) Condition

The Condition object in Python Threading is utilised for the synchronisation of Threads. The condition variable is linked to a shared resource as it is typically coupled with a lock that is specified or generated automatically.

4) Event

The Event object in Python Threading offers users a great mechanism for communication among the threads, wherein one thread signals the initiation of an event and other threads wait for it to occur. The waiting thread is activated once the first thread designed for signal generation produces said signal. The Event object also has an internal flag, which is set to true or false, using the set() and clear() methods accordingly.

Advantages of Threading

The following are the advantages of Threading:

1) Performance: Runs multiple tasks simultaneously, improving speed on multi-core processors.

2) Responsiveness: Keeps user interfaces (UI) responsive by handling background tasks.

3) Efficiency: Shares memory between threads for faster data exchange and uses fewer resources than processes.

4) Simplified Design: Breaks tasks into smaller, manageable threads, enabling parallel execution.

5) Real-time Execution: Ensures timely completion of high-priority tasks.

6) Scalability: Leverages multi-core processors for enhanced performance and scalability.

Transform your data insights with our expert-led R Programming Course sign up now to boost your skills!

Conclusion

Mastering Python Threading is key to boosting your programming efficiency and performance. By effectively managing threads and synchronising processes, you can unlock the full potential of multithreading. Embrace Threading in Python to elevate your coding skills and transform your applications!

Learn major programming languages and their frameworks by signing up for our Programming Training now!

Frequently Asked Questions

Common challenges with threading in Python include managing the Global Interpreter Lock and avoiding race conditions and deadlocks. Additionally, ensuring proper thread synchronisation and balancing multithreading complexity with performance gains, particularly for CPU-bound tasks, can be difficult.

Python multithreading enhances GUI responsiveness, handles web server requests, speeds up encryption/decryption, processes database queries, and improves audio/video rendering. It also aids in robotics automation and modern game development by managing multiple tasks simultaneously.

The Knowledge Academy takes global learning to new heights, offering over 30,000 online courses across 490+ locations in 220 countries. This expansive reach ensures accessibility and convenience for learners worldwide.

Alongside our diverse Online Course Catalogue, encompassing 17 major categories, we go the extra mile by providing a plethora of free educational Online Resources like News updates, Blogs, videos, webinars, and interview questions. Tailoring learning experiences further, professionals can maximise value with customisable Course Bundles of TKA.

The Knowledge Academy’s Knowledge Pass, a prepaid voucher, adds another layer of flexibility, allowing course bookings over a 12-month period. Join us on a journey where education knows no bounds.

The Knowledge Academy offers various Programming Training, including Python Course, PHP Course, Python Django Training, R Programming Course and Visual Basic Course. These courses cater to different skill levels, providing comprehensive insights into Python in Excel.

Our Programming & DevOps Blogs cover a range of topics related to Python, offering valuable resources, best practices, and industry insights. Whether you are a beginner or looking to advance your programming skills, The Knowledge Academy's diverse courses and informative blogs have got you covered.

Upcoming Programming & DevOps Resources Batches & Dates

Date

Python Course

Python Course

Thu 9th Jan 2025

Thu 13th Mar 2025

Thu 12th Jun 2025

Thu 7th Aug 2025

Thu 18th Sep 2025

Thu 27th Nov 2025

Thu 18th Dec 2025

Top Rated Course

Top Rated Course

If you wish to make any changes to your course, please

If you wish to make any changes to your course, please