We may not have the course you’re looking for. If you enquire or give us a call on 800600725 and speak to our training experts, we may still be able to help with your training requirements.

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

Ever wondered how huge amounts of data are processed efficiently? The answer lies in MapReduce, a powerful tool for handling big data. In this blog, we discuss What is MapReduce, tracing its evolution from its origins at Google to its widespread use today. We cover how MapReduce works, highlight its key features, and discuss its top uses.

Additionally, we compare it with Databricks Delta Engine to understand their differences. Whether you're a data enthusiast or just curious to know about it, this blog gives you a clear and engaging overview of MapReduce and its importance in the world of big data.

Table of Contents

1) What is MapReduce?

2) The Evolution of MapReduce

3) Working on MapReduce

4) Key Features of MapReduce

5) Top 5 Uses of MapReduce

6) Difference Between MapReduce and Databricks Delta Engine

7) Conclusion

What is MapReduce?

MapReduce is a programming model used for processing large data sets across a distributed system of computers. Developed by Google, it allows for efficient, scalable, and fault-tolerant processing of data. This model is widely used in big data processing frameworks like Hadoop. It simplifies the complexity of writing parallel and distributed processing code, making it easier to process vast amounts of data efficiently. The model is divided into two main functions:

a) Map: The map function takes an input dataset and converts it into a set of key-value pairs. It processes each data item individually and outputs intermediate key-value pairs.

b) Reduce: The reduce function takes the intermediate key-value pairs produced by the map function, merges them, and processes them to produce the final output.

The Evolution of MapReduce

MapReduce has transformed from a pioneering data processing model by Google to a cornerstone of the modern big data ecosystem. Here are the key milestones in the evolution of MapReduce.

1) Early Beginnings (2004)

a) Google's Paper: Google publishes the foundational paper on MapReduce, introducing the concept and detailing its architecture for processing large data sets.

b) Inspiration: Inspired by the need to handle vast amounts of data efficiently across Google's infrastructure.

2) Hadoop Emerges (2006)

a) Apache Hadoop: The Apache Software Foundation releases Hadoop, an open-source implementation of MapReduce, making the model widely accessible.

b) Community Growth: Rapid adoption and growth of the Hadoop ecosystem as developers contribute to its development.

3) Improvements in Hadoop (2008-2012)

a) Hadoop 1.0: Introduction of more robust features, including better fault tolerance, scalability, and a distributed file system (HDFS).

b) Ecosystem Expansion: Development of related projects like Hive (data warehousing), Pig (data flow scripting), and HBase (NoSQL database).

4) Rise of Alternative Frameworks (2013-2015)

a) Spark: Apache Spark emerges, offering in-memory processing and faster performance compared to Hadoop MapReduce.

b) Flink and Storm: Other frameworks like Apache Flink and Apache Storm introduce real-time data processing capabilities.

5) Integration and Hybrid Approaches (2016-2018)

a) Hadoop 2.x (YARN): Introduction of Yet Another Resource Negotiator (YARN), decoupling the resource management from MapReduce, allowing other processing models to run on Hadoop.

b) Hybrid Solutions: Increased integration of Hadoop with other big data tools, combining batch processing (MapReduce) with real-time processing (Spark, Flink).

6) Modern Big Data Ecosystem (2019 Onwards)

a) Cloud Adoption: Major cloud providers like AWS, Google Cloud, and Azure offer managed Hadoop and Spark services, simplifying deployment and scaling.

b) Kubernetes and Containers: Shift towards containerised environments and Kubernetes for orchestrating big data workloads.

c) AI and Machine Learning: Integration of MapReduce frameworks with AI and machine learning tools to handle complex data processing tasks.

7) Future Trends (Beyond 2023)

a) Serverless Computing: Potential for serverless MapReduce Architectures to further simplify big data processing.

b) Edge Computing: Expansion of big data processing to edge devices, reducing latency and improving performance.

c) Advanced AI Integration: Continued integration with advanced AI and machine learning models to enhance data processing capabilities.

Working on MapReduce

MapReduce works through a series of phases to process large datasets efficiently. Here’s a simple explanation of each phase, which may come up in MapReduce Interview Questions to test your understanding of the process:

1) Mapper

a) In the first phase, data is filtered and transformed into key-value pairs

b) The "key" is the record's address, and the "value" contains the record's content

2) Shuffle

a) In the second phase, the output from the mapper is sorted and grouped by key

b) Similar keys are grouped together, and duplicate values are discarded

c) The output is still in key-value pairs, but now the values represent a range rather than individual records

3) Reducer

a) In the third phase, the grouped key-value pairs from the shuffle phase are processed

b) Values are aggregated for each key and combined into a single output

4) Combiner (Optional)

a) This phase optimises performance by combining duplicate key-value pairs at the node level before the shuffle phase

b) This reduces the amount of data transferred during the shuffle, making the process faster and more efficient

Key Features of MapReduce

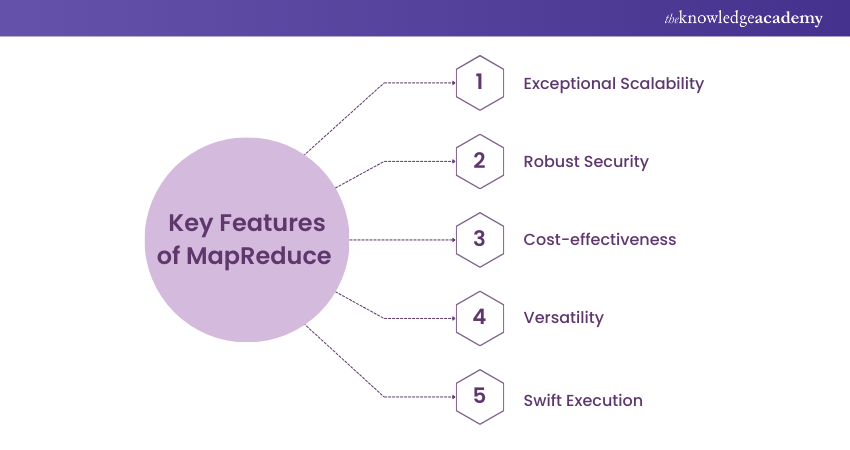

MapReduce has exceptional scalability, robust security, cost-effectiveness, versatility, and swift execution. Here are some of the key features of MapReduce:

a) Exceptional Scalability: MapReduce exhibits exceptional scalability, rendering it well-suited for processing substantial data volumes. Its ability to efficiently distribute tasks across multiple nodes ensures seamless expansion to handle the ever-growing demands of Big Data applications.

b) Robust Security: MapReduce ensures robust security measures, safeguarding data against unauthorised access. The framework incorporates stringent protocols and access controls, providing a secure environment for processing and managing sensitive information within large-scale data operations.

c) Cost-effectiveness: MapReduce boasts cost-effectiveness, leveraging the capacity to operate on commodity hardware. This inherent characteristic reduces infrastructure expenses, making it an economical choice for organisations seeking efficient data processing without requiring high-end hardware investments.

d) Versatility: MapReduce demonstrates versatility by accommodating a broad spectrum of applications. From data warehousing to social media analytics and fraud detection systems, its adaptable nature allows for the seamless execution of diverse tasks, contributing to its widespread use across various domains.

e) Swift Execution: MapReduce is engineered for swift execution, making it particularly advantageous for time-sensitive applications. The framework's ability to process tasks in parallel ensures efficient and rapid execution, catering to the dynamic needs of applications requiring quick turnaround times.

Get ready to tackle any challenge in your interview with these expert MapReduce Interview Questions and Answers. Grab your guide today!

Top 5 Applications of MapReduce

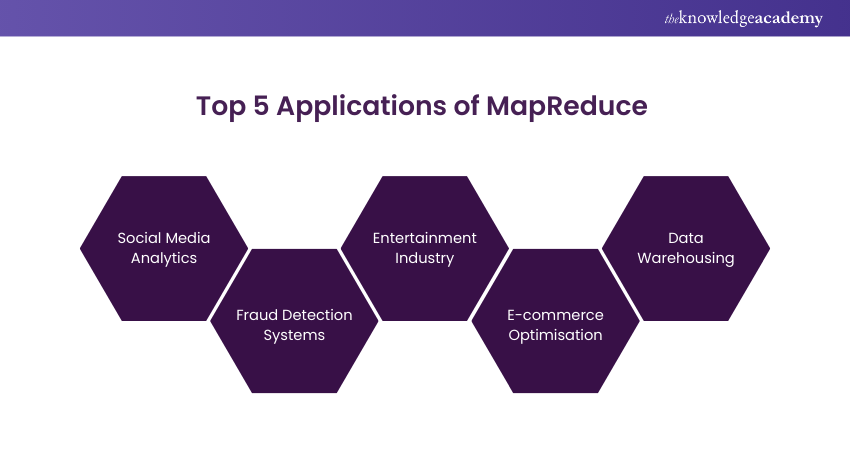

MapReduce has a wide range of applications in various industries. Here are the top 5 uses of MapReduce:

a) Social Media Analytics: MapReduce is used to analyse social media data to find trends and patterns. This analysis, facilitated by MapReduce, empowers organisations to make data-driven decisions and tailor their strategies to better engage with their target audience.

b) Fraud Detection Systems: MapReduce is used to detect fraudulent activities in financial transactions. By leveraging this technology, organisations can enhance their fraud detection capabilities, mitigate risks, and safeguard the integrity of economic systems.

c) Entertainment Industry: MapReduce is used to analyse user preferences and viewing history to recommend movies and TV shows. By analysing this information, the industry can deliver personalised recommendations for movies and TV shows, enhancing user experience and satisfaction.

d) E-commerce Optimisation: MapReduce evaluates consumer buying patterns based on customers’ interests or historical purchasing patterns. This personalised approach enhances the overall shopping experience for consumers while improving the efficiency of e-commerce operations.

e) Data Warehousing: MapReduce is used to process large volumes of data in data warehousing applications. In this way, organisations can derive actionable insights from their data, supporting informed decision-making processes across various business functions.

Learn to improve business processes with our Hadoop Big Data Certification – Join today!

Differences Between MapReduce and Databricks Delta Engine

Discussed below are a few differences between MapReduce and Databricks Delta Engine.

Architecture

Here’s a breakdown based on their architecture.

a) MapReduce: MapReduce is a programming model used within the Hadoop framework. It divides data into smaller chunks for parallel processing on a distributed computing cluster.

b) Databricks Delta Engine: Databricks Delta Engine is part of the Databricks Unified Analytics Platform. It leverages Apache Spark and provides a storage layer optimised for analytics workloads.

Data Processing Paradigm

Here’s a breakdown based on their Data processing paradigm.

a) MapReduce: MapReduce follows a two-phase processing paradigm, consisting of the Map phase for data transformation and the Reduce phase for aggregation.

b) Databricks Delta Engine: Databricks Delta Engine utilises the Apache Spark processing engine, which supports in-memory data processing and iterative algorithms, offering a more flexible and efficient paradigm than MapReduce.

Dive into the world of Big Data Analysis – Enrich your skillset with our Big Data Analysis Course. Sign up today!

Real-time Processing

Here’s a breakdown based on their real-time processing.

a) MapReduce: MapReduce is primarily designed for batch processing and may not be well-suited for real-time processing requirements.

b) Databricks Delta Engine: Databricks Delta Engine integrates with Apache Spark, which supports both batch and real-time processing, making it more versatile for modern data processing needs.

Storage Management

Here’s a breakdown based on their Storage Management.

a) MapReduce: MapReduce typically relies on distributed file systems like Hadoop Distributed File System (HDFS) for storage management.

b) Databricks Delta Engine: Databricks Delta Engine introduces features like ACID transactions and time travel, providing efficient data versioning and management directly within its storage layer.

Ease of Use

Here’s a breakdown based on their ease of use.

a) MapReduce: Developing and managing MapReduce jobs can be complex, requiring a deep understanding of the programming model and the Hadoop ecosystem.

b) Databricks Delta Engine: Databricks Delta Engine simplifies data processing tasks by providing a unified platform with notebooks, collaboration features, and an interactive environment that abstracts some of the complexities associated with MapReduce. To prepare for related job roles, explore Databricks Interview Questions.

Learn how to use Lua in your applications with our Lua Programming Language Training – Join today!

Conclusion

MapReduce is an essential tool for Big Data processing and analysis, and it has a wide range of applications in various industries. It provides exceptional scalability, robust security, cost-effectiveness, versatility, and swift execution. With the rise of Big Data, knowing “What is MapReduce?” is becoming increasingly important for businesses looking to gain insights from their data. Hope you enjoyed reading this blog.

Learn about different phases of MapReduce with our MapReduce Programming Model Training – Join today!

Frequently Asked Questions

Which Businesses can Benefit from MapReduce?

Businesses that handle large volumes of data, like tech companies, e-commerce platforms, and financial institutions, can benefit from MapReduce. Any company needing to manage and gain insights from vast datasets can use MapReduce to improve decision-making and operations.

Are There Alternatives to MapReduce?

Yes, there are alternatives to MapReduce. Tools like Apache Spark, Apache Flink, and Databricks Delta Engine offer similar data processing capabilities with added features like in-memory computing and real-time data processing. These alternatives can sometimes be faster and more flexible.

What are the Other Resources and Offers Provided by The Knowledge Academy?

The Knowledge Academy takes global learning to new heights, offering over 3,000 online courses across 490+ locations in 190+ countries. This expansive reach ensures accessibility and convenience for learners worldwide.

Alongside our diverse Online Course Catalogue, encompassing 19 major categories, we go the extra mile by providing a plethora of free educational Online Resources like News updates, Blogs, videos, webinars, and interview questions. Tailoring learning experiences further, professionals can maximise value with customisable Course Bundles of TKA.

What is The Knowledge Pass, and How Does it Work?

The Knowledge Academy’s Knowledge Pass, a prepaid voucher, adds another layer of flexibility, allowing course bookings over a 12-month period. Join us on a journey where education knows no bounds.

What are Related Courses and Blogs Provided by The Knowledge Academy?

The Knowledge Academy offers various Programming Training courses, including MapReduce Programming Model Training, Spring Framework Training, etc. These courses cater to different skill levels, providing comprehensive insights into Big Data Management methodologies.

Our Programming & DevOps Blogs cover a range of topics related to MapReduce, offering valuable resources, best practices, and industry insights. Whether you are a beginner or looking to advance your Programming skills, The Knowledge Academy's diverse courses and informative blogs have you covered.

Upcoming Programming & DevOps Resources Batches & Dates

Date

MapReduce Programming Model Training

MapReduce Programming Model Training

Fri 27th Jun 2025

Fri 29th Aug 2025

Fri 24th Oct 2025

Fri 5th Dec 2025

Top Rated Course

Top Rated Course

If you wish to make any changes to your course, please

If you wish to make any changes to your course, please