We may not have the course you’re looking for. If you enquire or give us a call on +852 2592 5349 and speak to our training experts, we may still be able to help with your training requirements.

Training Outcomes Within Your Budget!

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

Amidst the era of exponential data growth, Hadoop stands tall as a pioneering open-source framework. It is continuously reshaping how we manage, process, and derive insights from massive datasets. From its distributed storage capabilities to its innovative data processing framework, Hadoop's features have transformed the landscape of Big Data.

Are you eager to know how? In this blog, you will learn everything about the critical Features of Hadoop and how they help store, manage, and process data.

Table of Contents

1) What is Hadoop?

2) Features of Hadoop

a) Distributed storage

b) Scalability

c) Fault tolerance

d) Data processing framework

e) MapReduce

3) Conclusion

What is Hadoop?

Hadoop is an open-source, Java-based platform that manages a lot of data storage and processing for applications. It relies on distributed storage and parallel processing to handle big data and analytics jobs. This is achieved by breaking down workloads into smaller ones that can run at one time.

The Hadoop framework is divided into four modules, which collectively form the Hadoop ecosystem:

Hadoop Distributed File System (HDFS): As the primary component of the Hadoop ecosystem, HDFS is a distributed file system where individual Hadoop nodes operate on locally stored data. This removes network latency, providing high-throughput access to application data. In addition, administrators don’t need to define schemas up front.

Yet Another Resource Negotiator (YARN): YARN is a resource-management platform responsible for managing computer resources in clusters and using them to schedule users’ applications. It performs scheduling and resource allocation across the Hadoop system.

MapReduce: MapReduce is a programming model for large-scale data processing. In the this model, subsets of larger datasets and instructions for processing the subsets are dispatched to multiple different nodes, where each subset is processed by a node in parallel with other processing jobs. After processing the results, individual subsets are combined into a smaller, more manageable dataset.

Hadoop Common: Hadoop Common includes the libraries and utilities used and shared by other Hadoop modules.

Beyond HDFS, YARN, and MapReduce, the entire Hadoop open-source ecosystem continues to grow and includes many tools and applications to help collect, store, process, analyse, and manage big data. These include Apache Pig, Apache Hive, Apache HBase, Apache Spark, Presto, and Apache Zeppelin.

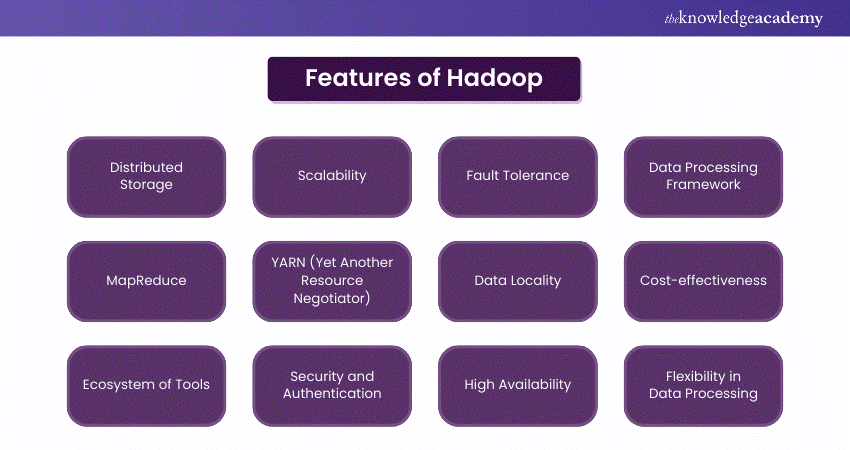

Features of Hadoop

The Hadoop is equipped with several features. The top features and characteristics of the Hadoop framework are described below:

Distributed storage

Hadoop's distributed storage, powered by the Hadoop Distributed File System (HDFS), has revolutionised data storage. It is one of the robust Features of Hadoop that breaks down large files into smaller blocks and distributes them across multiple machines in a cluster. This approach offers several advantages.

Firstly, it enables massive storage capacity by utilising the combined resources of the cluster. Secondly, data redundancy is achieved through replication to ensure data remains accessible even during hardware failures. This distributed storage concept forms the foundation for Hadoop's fault tolerance and scalability, making it an ideal solution for managing vast datasets.

Scalability

Scalability is a cornerstone of Hadoop Architecture. As data volume grows, traditional systems might struggle to keep pace. However, Hadoop's design is made to facilitate horizontal scaling. This means that instead of upgrading to more powerful, costly hardware, you can simply add more commodity machines to the cluster.

This horizontal scalability ensures that the optimal performance of the system, regardless of the dataset's size. Furthermore, while dealing with terabytes or petabytes of data, Hadoop can accommodate your needs by seamlessly incorporating new resources into the existing cluster.

Fault tolerance

In the realm of Big Data, hardware failures are not uncommon. Hadoop addresses this challenge through its fault tolerance mechanism. Since data is stored in a distributed manner across the cluster, the loss of a single machine doesn't signify the loss of data.

Hadoop Architecture replicates data across multiple nodes, ensuring that even if a node fails, data remains accessible and intact. This redundancy guarantees data reliability and availability, which is crucial in critical applications where data loss is unavoidable. Moreover, the automated data replication mechanism seamlessly maintains fault tolerance without manual intervention.

Data processing framework

Hadoop serves as a robust data processing framework capable of efficiently handling complex analytical tasks. Due to their scale, traditional data processing systems often struggle to cope with today's Big Data requirements. However, Hadoop's architecture is designed for distributed computing, enabling parallel processing of tasks across multiple nodes.

This means that data-intensive operations can be divided into smaller tasks that are processed simultaneously. This parallelism significantly reduces processing time, allowing organisations to gain insights from their data more quickly and effectively.

MapReduce

MapReduce is one of the most powerful features of Hadoop's programming model that facilitates the parallel processing of vast datasets. It comprises of two main phases:

a) Map phase: In the map phase, data is broken down into smaller chunks and processed in parallel across the cluster.

b) Reduce phase: In the reduce phase, the results of the map phase are aggregated to produce the final output.

This approach allows efficient large-scale data processing by distributing tasks across multiple nodes, thereby improving processing speed and resource utilisation. MapReduce is the driving force behind Hadoop's ability to process massive datasets efficiently.

Unlock the power of Big Data with our comprehensive Hadoop Big Data Certification Course - join now to master the essential tools for effective data management!

YARN (Yet Another Resource Negotiator)

YARN, or Yet Another Resource Negotiator, is a vital component of the Hadoop ecosystem. It's responsible for managing and allocating resources across different applications running on the cluster. YARN's resource management capabilities ensure optimal resource utilisation, preventing one application from consuming all available resources and causing performance bottlenecks.

By intelligently allocating resources based on application requirements, YARN enhances overall cluster efficiency and performance. This dynamic allocation of resources supports a wide range of applications, from batch processing to interactive queries, making Hadoop clusters versatile and adaptable to various workloads.

Data locality

Data locality, being the cornerstone of Hadoop's architecture, plays a pivotal role in optimising data processing efficiency. In the conventional data processing approach, data is often moved across the network to where the processing occurs. Hadoop, however, flips this approach. With data locality, the processing is brought to the data, and not vice-versa. This minimises the overhead of data transfer, reducing network congestion and latency.

In practice, when a job is executed on a Hadoop cluster, the system strives to process data on the same node where it resides. This proximity ensures faster access, as data retrieval doesn't involve crossing the network. By intelligently distributing tasks based on data location, Hadoop harnesses the power of parallel processing while minimising data movement.

This enhances proces sing speed andoptimises resource utilisation, making Hadoop a powerhouse for large-scale data processing tasks.

Cost-effectiveness

Hadoop's cost-effectiveness stems from its ability to run on commodity hardware. Unlike traditional systems that might require expensive specialised infrastructure, Hadoop can operate on relatively affordable, off-the-shelf hardware. This significantly lowers the cost of entry for organisations seeking to manage and process large datasets.

Additionally, Hadoop's scalability allows businesses to expand their infrastructure as data volume grows without incurring enormous expenses. This cost-efficient approach simplifies Big Data, enabling businesses of all sizes to harness the power of data analytics and insights without breaking the bank.

Ecosystem of tools

Hadoop's capabilities are further enhanced by its extensive ecosystem of tools and frameworks. These tools address various aspects of data processing, analysis, and management. For instance, tools like Hive provide a SQL-like interface for querying data stored in Hadoop, making it accessible to users familiar with relational databases.

In another example, Pig offers a high-level platform for processing,analysing large datasets and simplifying complex tasks. While, HBase provides a NoSQL database option for real-time read/write access to data.

Further, the Spark tool introduces in-memory processing for faster analytics. These tools extend Hadoop's functionality, enabling a wide range of data-driven applications and scenarios tailored to different business needs.

Security and authentication

Security and automation are pivotal aspects of modern technology landscapes. In contrast, security ensures the protection of sensitive data from unauthorised access and breaches, and automation streamlines processes and enhances efficiency. Some robust security measures, including authentication, authorisation, encryption, and audit logging, are essential to safeguard valuable information.

Simultaneously, automation optimises workflows, reduces human errors, and accelerates tasks, leading to increasedproductivity. The big data integration of security and automation not only fortifies digital ecosystems against threats but also empowers organisations to operate with agility and confidence. This further helps foster growth and innovation in today's interconnected world.

High availability

Hadoop incorporates high availability features to offer uninterrupted data processing. In a distributed environment, hardware failures and downtime are possibilities. Hadoop's NameNode High Availability (HA) configuration mitigates this risk by maintaining a standby NameNode that takes over in case the primary NameNode fails.

This seamless transition ensures constant access to data and minimises downtime, crucial for mission-critical applications. With Hadoop's HA, businesses can rely on consistent data availability, preventing disruptions and preserving operational continuity.

Unlock the power of Hadoop with expert guidance – Sign up for our Hadoop Administration Training today!

Flexibility in data processing

Hadoop's flexibility in data processing allows businesses to handle diverse data types and formats. Unlike traditional databases that require structured data, Hadoop facilitates unstructured and semi-structured data, such as text, images, and videos.

This versatility is attributed to Hadoop's schema-on-read approach, which lets users interpret data at the time of analysis rather than during ingestion. This flexibility facilitates the analysis of real-world, complex data, enabling comprehensive insights.

Hadoop's capability to handle different data sources without the need for data transformation is a significant advantage. This fosters a more holistic understanding of information for data-driven decision-making.

What Are The Two Main Purposes of Hadoop?

Hadoop is a versatile and scalable open-source framework that has helped many organisations to achieve their goals. The two primary purposes of Hadoop are described below:

Scalability:

Hadoop is important as one of the primary tools for storing and processing vast amounts of data quickly. It achieves this by using a distributed computing model, which enables the fast data processing that can be rapidly scaled by adding computing nodes.

Low Cost:

As an open-source framework that canrun on commodity hardware and has a large ecosystem of tools, Hadoop is an affordable option for storing and managing big data.

What is Replacing Hadoop?

The tech industry is adapting to the issues posed by Hadoop, such as complexity and lack of real-time processing. Other solutions have emerged that aim to address these issues. These alternatives offer various options and depend on your need for an on-premises or cloud infrastructure.

Google BigQuery:

Google's BigQuery is a platform designed to assist users to analyse large amounts of data without worrying about big data database or infrastructure management. It allows users to use SQL and utilises Google Storage for interactive data analysis.

Apache Spark:

Apache Spark is a popular and powerful computational engine used for Hadoop data. It is an upgrade from Hadoop that offers incredible speed and supports various applications.

Snowflake:

Snowflake is a cloud-based service designed to offer data services that include warehousing, engineering, science, and app development. It also enables the secure sharing and consumption of real-time data.

Conclusion

To sum up, the Features of Hadoop have redefined how we navigate the challenges caused by Big Data. From security and automation to its extensive toolset, Hadoop remains a key component in modern data management. It guides us towards a future where data-driven decision-making is not just a possibility but a formidable reality.

Unlock the power of data with our Big Data and Analytics Training – join now to gain essential skills for thriving in the world of data-driven insights!

Frequently Asked Questions

A Hadoop Distributed File System (HDFS) is a file system that can handle large amounts of data sets and run on commodity hardware. It is the most popular Hadoop data storage system that can upscale a single Apache Hadoop cluster to thousands of nodes. Furthermore, HDFS is ideal for complex data analytics in its big data management.

The Hadoop Ecosystem is not a programming language or a service; it is a framework or platform designed to address big data challenges. You can think of it as a suite with several services (ingesting, storing, analysing, and maintaining) inside it.

The Knowledge Academy takes global learning to new heights, offering over 30,000 online courses across 490+ locations in 220 countries. This expansive reach ensures accessibility and convenience for learners worldwide.

Alongside our diverse Online Course Catalogue, encompassing 17 major categories, we go the extra mile by providing a plethora of free educational Online Resources like News updates, Blogs, videos, webinars, and interview questions. Tailoring learning experiences further, professionals can maximise value with customisable Course Bundles of TKA.

The Knowledge Academy’s Knowledge Pass, a prepaid voucher, adds another layer of flexibility, allowing course bookings over a 12-month period. Join us on a journey where education knows no bounds.

The Knowledge Academy offers various Big Data and Analytics Trainings, including the Hadoop Big Data Certification and Practitioner Course, and Hadoop Administration Training. These courses cater to different skill levels, providing comprehensive insights into Top Big Data Technologies.

Our Data, Analytics & AI Blogs cover a range of topics related to Big Data and Analytics Training, offering valuable resources, best practices, and industry insights. Whether you are a beginner or looking to advance your Data Analytics skills, The Knowledge Academy's diverse courses and informative blogs have got you covered.

Upcoming Data, Analytics & AI Resources Batches & Dates

Date

Hadoop Big Data Certification

Hadoop Big Data Certification

Thu 16th Jan 2025

Thu 6th Mar 2025

Thu 22nd May 2025

Thu 24th Jul 2025

Thu 11th Sep 2025

Thu 20th Nov 2025

Thu 11th Dec 2025

Top Rated Course

Top Rated Course

If you wish to make any changes to your course, please

If you wish to make any changes to your course, please