We may not have the course you’re looking for. If you enquire or give us a call on +852 2592 5349 and speak to our training experts, we may still be able to help with your training requirements.

Training Outcomes Within Your Budget!

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

To maintain correct data for huge businesses, we need some kind of game-changing tool or technology that will help us achieve that. This is where Hadoop comes into play. But What is Hadoop? It is an open-source framework that facilitates distributed storage and processing of large datasets using a cluster of computers.

According to Affiliate Market Research, the global Hadoop market was valued at £28.17 billion in 2020 and is projected to reach £663.93 billion by 2030. It is also registered at a CAGR of 37.4% from 2021 to 2030. Hadoop was developed by Doug Cutting and Mike Cafarella. They were inspired by Google's MapReduce and the Google File System papers. In this blog, you will learn What is Hadoop, its core components, applications, its advantages and limitations. Read on further to know more!

Table of Contents

1) What is Hadoop?

2) Core components of Hadoop

3) What is Hadoop used for? Key applications

4) Advantages and limitations of Hadoop

5) Conclusion

What is Hadoop?

Hadoop's distributed architecture means that data is split and stored across various nodes in a cluster, allowing for parallel processing and high availability. It's primarily used for big data analytics, data warehousing, and many other tasks where vast amounts of data need to be stored and processed efficiently.

In this section, you will understand the importance of managing the huge data that all big corporations require. These are the following points:

1) Hadoop Distributed File System (HDFS): It is a distributed file system that stores data across multiple machines. It's designed to be highly fault-tolerant, ensuring data availability even if several machines in the system fail.

2) MapReduce: This is the computational component. It provides a programming model that allows for the processing of large data sets. Tasks are split into smaller sub-tasks and processed in parallel, then aggregated to produce a result.

3) Yet Another Resource Negotiator (YARN): This component manages resources in the Hadoop cluster and schedules tasks.

Significance of Hadoop

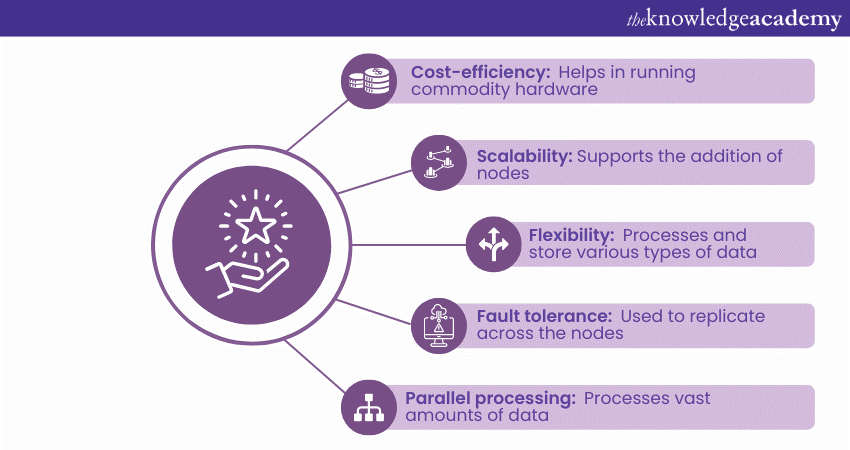

The following points will show you the significance of Hadoop:

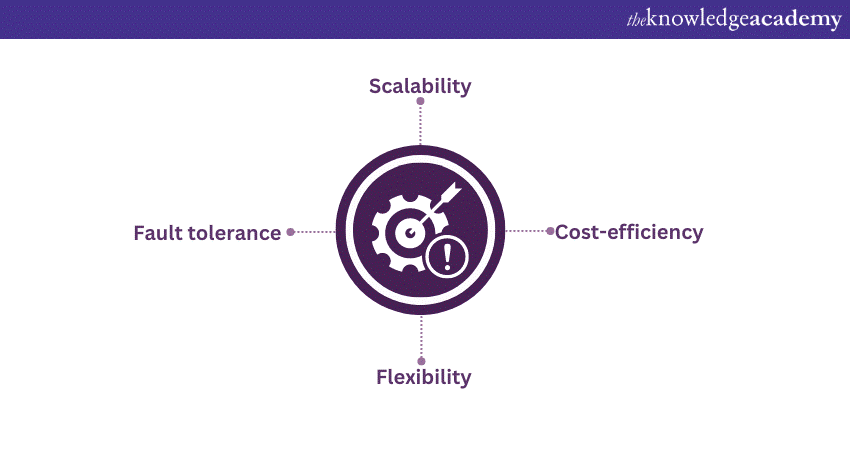

1) Scalability: Hadoop clusters can be scaled out easily. If you need more storage or processing power, simply add more nodes to the cluster. This linear scalability ensures that even as data grows, it can handle it without a proportional increase in cost.

2) Cost-efficiency: It uses commodity hardware — standard, off-the-shelf machines. This is much cheaper than specialised high-performance hardware that other systems might require.

3) Flexibility: Unlike traditional relational databases that require structured data, it can store and process structured, semi-structured, and unstructured data. This makes it perfect for handling diverse data types, from log files to social media posts.

4) Fault tolerance: Due to its distributed nature and data replication features, it offers robust fault tolerance. Even if multiple nodes fail, the system continues to operate.

Unlock the power of big data and analytics- Register now in our Big Data and Analytics Training .

Core components of Hadoop

These core components make Hadoop a powerhouse for distributed data storage and processing. Each component has a crucial role in ensuring that it functions seamlessly, efficiently, and resiliently.

Before you discuss the main components of Hadoop, you need to know about ‘daemons’. Daemons are the background processes that help run the distributed infrastructure. These processes handle data storage and data processing tasks. The main daemons in Hadoop are:

a) NameNode: This is the master server that manages the file system namespace and also controls access to files. Essentially, it maintains the directory tree and the metadata for all the files and directories.

b) DataNode: These are the worker nodes responsible for storing the actual data blocks. Multiple replicas of each block are distributed across different DataNodes to ensure fault tolerance.

c) ResourceManager (RM): This daemon is a part of Hadoop's Yet Another Resource Negotiator (YARN) system, responsible for managing and monitoring resources in the cluster. The ResourceManager acts as the master system, coordinating the distribution of specific tasks (like MapReduce tasks).

d) NodeManager (NM): Running on each of the worker nodes, the NodeManager daemon works in tandem with the ResourceManager. It tracks the resources on its specific node and reports back to the ResourceManager. It's also responsible for executing tasks on its node as directed by the ResourceManager.

Let us now discuss the core components of Hadoop:

1) Hadoop Distributed File System (HDFS): HDFS is a distributed file system fashioned after the Google File System. It stores vast amounts of data across multiple machines, partitioning large files into smaller blocks (typically 128 MB or 256 MB).

The following features are:

a) Fault tolerance: Each block is replicated thrice across the cluster. This ensures data availability even if a node fails.

b) Scalability: HDFS can scale out by adding more DataNodes to the cluster.

c) High throughput: HDFS is designed to support large data files and provide high data access speeds.

Interested in gaining a deeper knowledge on Hadoop, refer to our blog on Hadoop Data Types

2) MapReduce: MapReduce is the computational heart of Hadoop, facilitating distributed data processing.

a) Components: In the initial 'Map' phase, input data is split, sorted, and tokenised into key-value pairs. During the 'Reduce' phase, these key-value pairs are aggregated based on their keys, and the final output is produced.

b) Features: Tasks are divided and run in parallel across the cluster. If a task fails on one node, it is automatically rerouted and executed on a different node. Hadoop is language agnostic. While Java is the primary language, MapReduce can work with any language that can read from standard input and write to standard output.

3) Yet Another Resource Negotiator (YARN): YARN is the resource management layer for Hadoop. It manages and schedules resources across the cluster and was introduced in Hadoop version 2 to enhance its scalability and resource utilisation.

a) ResourceManager (RM): The master daemon of YARN, responsible for resource allocation in the cluster.

b) NodeManager (NM): These are per-node agents that track the resource usage on each machine and report back to the ResourceManager.

c) ApplicationMaster (AM): This component is specific to individual applications. It requests resources from the RM and collaborates with the NM to execute and monitor tasks.

These are its following features:

a) Scalability: Supports larger clusters and more concurrent tasks than the earlier MapReduce framework.

b) Multi-tenancy: Allows multiple applications to share common resources.

c) Improved utilisation: With more flexible scheduling and resource allocation, cluster resources are utilised more efficiently.

Enhance your knowledge of how to improve business processes with our Hadoop Big Data Certification .

What is Hadoop used for? Key applications

Hadoop's versatility is evident in its wide range of applications across industries. Its ability to efficiently process and store vast datasets has made it a cornerstone in big data and analytics.

These are some key applications of Hadoop:

a) Big data analytics: One of the primary reasons for Hadoop's popularity is its proficiency in handling massive datasets. It provides a platform for analysing large sets of structured and unstructured data.

Example: Retailers analyse customer purchasing habits, preferences, and feedback to tailor their product offerings, optimise pricing strategies, and improve customer service.

b) Log and clickstream analysis: Log files and clickstreams provide a treasure trove of user behaviour data. It can process these large sets of logs to derive insights.

Example: An e-commerce website might analyse logs to understand how users navigate through the site, which can be instrumental in refining the user interface or tailoring promotions.

c) Data warehousing and Business Intelligence (BI): It can store huge amounts of data from different sources in a distributed manner and can integrate with BI tools to provide deep business insights.

Example: A financial institution might consolidate data from various systems into Hadoop and then use BI tools to derive insights on market trends, customer segmentation, and risk analysis.

d) Content recommendation: Personalisation is vital in today's digital age. It can process and analyse user data to provide tailored content or product recommendations.

Example: Streaming services like Netflix or Spotify analyse user viewing or listening habits, respectively, to recommend shows, movies, or music.

e) Image and video analysis: The processing of image and video data requires the analysis of large files. Hadoop, with its distributed data processing capability, can efficiently handle this.

Example: In healthcare, medical imaging files can be processed to detect patterns or anomalies, aiding in diagnoses.

f) Scientific simulation and data processing: It is also employed by the scientific community to run simulations and process the resulting vast datasets.

Example: Climate scientists may use Hadoop to simulate and analyse global climate changes based on various parameters and historical data.

g) Search quality improvement: Search engines process vast amounts of data to refine and improve search results. It aids in storing and analysing this data.

Example: A search engine company might analyse user search queries, click-through rates, and website feedback to continually refine and improve the relevance of search results.

h) Fraud detection and prevention: By analysing transaction data and user behaviour, patterns indicating fraudulent activity can be detected.

Example: Credit card companies might process millions of transactions using Hadoop to identify potentially fraudulent transactions based on established patterns.

i) Social media analysis: Social platforms generate a massive amount of user data every second. It can process this data to derive insights about user behaviours, preferences, and trends.

Example: A company might analyse tweets or posts related to their product to gauge public sentiment or identify emerging trends.

Master the art of Hadoop administration- Register now for our Hadoop Administration Training.

Advantages and limitations of Hadoop

In this section you will discover both the advantages and disadvantages of Hadoop. Read on ahead:

Advantages of Hadoop

Here are the following advantages of Hadoop:

1) Cost-efficiency: It is built to run on commodity hardware, which means organisations don’t require high-end, expensive machines to store and process data.

a) Impact: This makes the initial investment relatively low, especially when handling massive datasets. The cost per terabyte of storage with Hadoop is significantly lower than traditional database systems.

2) Scalability: It is inherently scalable, supporting the addition of nodes in its distributed computing model.

a) Impact: As an organisation's data needs to grow, its Hadoop cluster can grow seamlessly by simply adding more nodes, allowing it to manage petabytes of data efficiently.

3) Flexibility: Unlike traditional relational databases, it can process and store varied data types, be it structured, semi-structured, or unstructured.

a) Impact: This means businesses can store data from various sources. These sources can be from social media, transactional systems, or log files. You can also use it for diverse tasks, from analytics to data warehousing.

4) Fault tolerance: Data in Hadoop is inherently replicated (usually three copies) across different nodes.

a) Impact: If a node fails, data processing can continue using data stored on another node. It ensures that data isn't lost and system downtime is minimised.

5) Parallel processing: Hadoop's MapReduce programming model processes vast data sets which are in parallel across a distributed cluster.

a) Impact: Tasks are split and processed concurrently, significantly speeding up computation, especially for large datasets.

6) Open source: it is open source, meaning its framework and source code are freely available.

a) Impact: This encourages a large community of developers to contribute to its ecosystem. This results in regular updates, new features, and plug-ins. It also means companies can customise Hadoop based on their specific needs.

7) Resilience to failure: Aside from data replication, Hadoop's architecture is designed so that individual node failures don't cause the entire system to crash.

a) Impact: This ensures continuity in data processing tasks and reduces potential losses associated with system failures.

8) Large ecosystem: The Hadoop ecosystem comprises various tools and extensions that enhance its capabilities. Examples include Hive for data querying, Pig for scripting, and Oozie for scheduling.

a) Impact: This rich ecosystem provides solutions tailored to different needs, from data visualisation to machine learning, allowing businesses to derive maximum value from their data.

Bridge the gap between data science and big data analytics with our Big Data Analytics & Data Science Integration Course .

Limitations of Hadoop

Now you know the advantages of Hadoop. We are going to discuss the limitations:

1) Complexity: Hadoop's ecosystem, comprising its various tools and extensions, can be intricate and challenging to understand for newcomers.

a) Impact: This learning curve can delay implementation and require significant training, increasing the total cost and time-to-value.

2) Lack of real-time processing: Hadoop's primary design caters to batch processing rather than real-time data processing.

a) Impact: This limitation makes it unsuitable for applications that require immediate insights, such as real-time analytics or online transaction processing.

3) Security concerns: Hadoop didn't focus extensively on security. While there have been improvements, issues like data tampering and unauthorised access persist.

a) Impact: This makes it challenging to ensure data privacy and integrity in its environments without additional tools or interventions.

4) Resource intensive: Hadoop's distributed computing model can be resource-intensive, especially during large-scale data processing tasks.

a) Impact: This can lead to inefficient utilisation of resources if not optimised, thereby increasing operational costs.

5) Not optimal for small data: It is designed for processing large datasets. It may not be efficient or cost-effective for smaller datasets or tasks.

a) Impact: Overhead associated with data distribution and task division can make Hadoop less optimal for businesses with relatively small data needs.

6) Inherent latency: Hadoop's MapReduce programming model has inherent latency due to its two-stage processing (Map and Reduce phases).

a) Impact: This can slow down the overall data processing speed, especially for complex jobs.

7) Limited data processing models: Initially, Hadoop was closely tied to the MapReduce data processing model. Although this has changed over time with the introduction of alternatives, some might still find the model restrictive.

a) Impact: It could be less efficient for operations that don't fit well into the MapReduce paradigm.

8) Fragmented data storage: Hadoop distributes data blocks across nodes, leading to fragmentation.

a) Impact: This can result in slow data retrieval times compared to systems that store data in a more organised manner.

9) Dependency on Java: Hadoop’s ecosystems are Java-based, which might not be the preferred or familiar language for all developers or organisations.

a) Impact: This dependency can restrict development speed and flexibility, especially for teams unfamiliar with Java.

Conclusion

Hadoop has revolutionised big data processing with its scalability and cost-effectiveness. However, while its advantages are transformative, it's essential to be mindful of its limitations. As the data landscape evolves, understanding Hadoop's strengths and weaknesses ensures informed decisions in data management and analytics endeavours.

Unleash the potential of HBase in big data with our HBase Training.

Frequently Asked Questions

Upcoming Data, Analytics & AI Resources Batches & Dates

Date

Hadoop Big Data Certification

Hadoop Big Data Certification

Thu 16th Jan 2025

Thu 6th Mar 2025

Thu 22nd May 2025

Thu 24th Jul 2025

Thu 11th Sep 2025

Thu 11th Dec 2025

Top Rated Course

Top Rated Course

If you wish to make any changes to your course, please

If you wish to make any changes to your course, please