We may not have the course you’re looking for. If you enquire or give us a call on +352 8002-6867 and speak to our training experts, we may still be able to help with your training requirements.

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

In the world of Deep Learning and Artificial Intelligence, PyTorch and TensorFlow stand as titans, each offering a powerhouse of capabilities. Choosing between these two frameworks is no small feat, and understanding their differences is crucial. In this blog, we delve into the distinctions of PyTorch vs TensorFlow, the strengths and weaknesses, and which one suits your needs best.

Table of Contents

1) What is PyTorch?

2) What is TensorFlow?

3) PyTorch vs TensorFlow: Key differences

a) Dynamic vs static computational graphs

b) Ease of use

c) Community and ecosystem

d) Visualisation tools

e) Deployment

f) Mobile and embedded

g) Popularity

4) Conclusion

What is PyTorch?

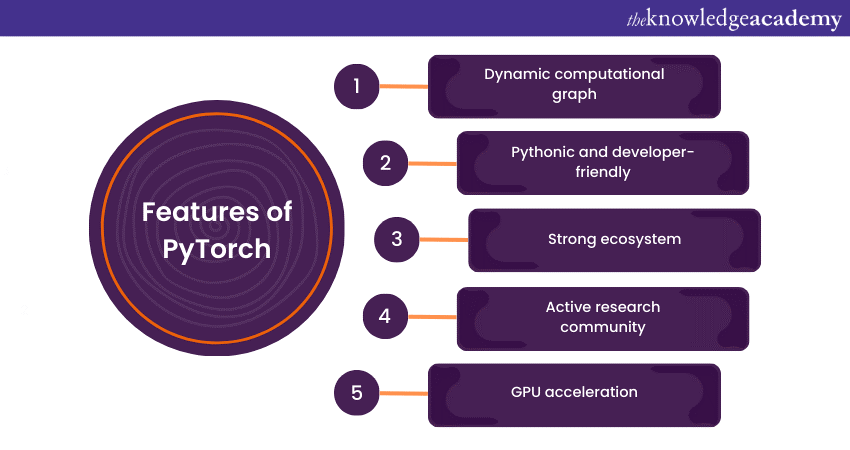

PyTorch is an open-source Machine Learning framework that has gained widespread popularity among researchers, developers, and data scientists for its flexibility, dynamic computation graph, and extensive library of tools and libraries. Developed by Facebook's AI Research Lab (FAIR), PyTorch is designed to provide a seamless and efficient platform for building, training, and deploying Deep Learning models.

One of PyTorch's standout features is its dynamic computation graph, allowing developers to change neural network architecture on the fly, making it an excellent choice for research and experimentation. This dynamic nature is in contrast to static computation graphs used by other frameworks like TensorFlow.

PyTorch offers a comprehensive ecosystem of libraries and tools for various Machine Learning tasks, including computer vision, natural language processing, and reinforcement learning. Its user-friendly interface and extensive documentation make it accessible to newcomers while providing advanced features for experienced researchers. Moreover, PyTorch's strong community support and frequent updates ensure it remains at the forefront of the rapidly evolving field of Machine Learning.

The world of Deep Learning and in-depth knowledge of Neural Network waits for you. Register for our Deep Learning Training!

What Is TensorFlow?

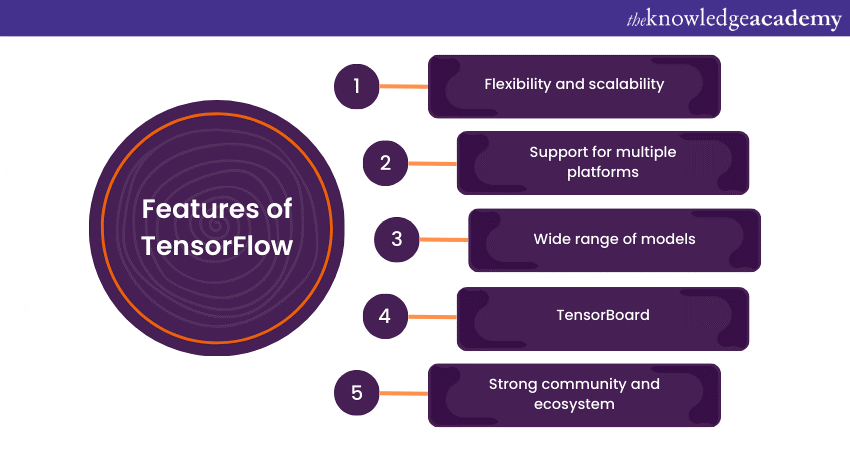

TensorFlow is an open-source Machine Learning framework developed by the Google Brain team. It has become one of the most widely used tools in the field of Artificial Intelligence and Machine Learning. At its core, TensorFlow is designed to facilitate the creation, training, and deployment of Machine Learning models, especially Deep Learning models.

One of TensorFlow's defining characteristics is its use of static computation graphs. Users define the graph structure, including the mathematical operations, before they execute the computation. This design makes TensorFlow efficient, particularly for large-scale production applications.

TensorFlow is versatile, offering multiple APIs for various levels of user expertise. This includes high-level APIs like Keras for those who prefer a more user-friendly approach, as well as the traditional TensorFlow API for more advanced users who require greater control over their models.

The framework supports a wide range of applications, from computer vision and natural language processing to reinforcement learning and more. TensorFlow is especially favored by developers and researchers who need powerful tools for building and deploying Machine Learning models at scale. Its active community, thorough documentation, and consistent updates ensure it remains a pivotal tool in the world of Machine Learning and AI.

PyTorch vs TensorFlow: Key differences

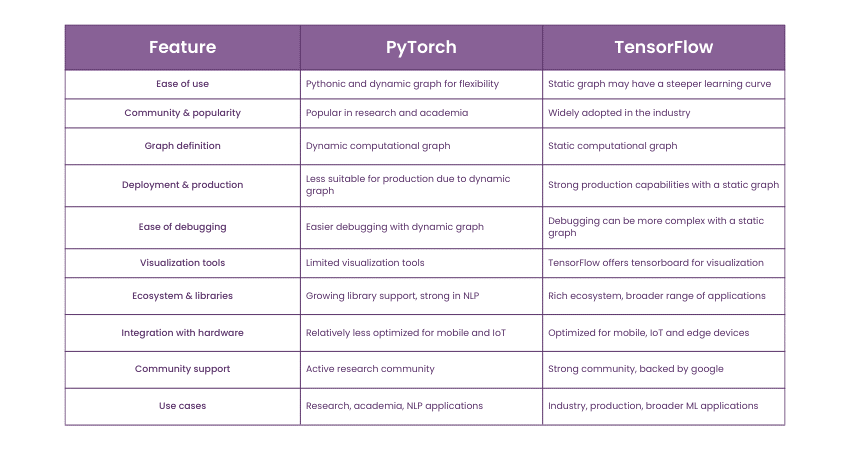

PyTorch and TensorFlow are two of the most popular and powerful Deep Learning frameworks, each with its own strengths and capabilities. Understanding the differences between PyTorch vs TensorFlow can help you choose the right framework for your specific Machine Learning or Deep Learning project. Here's a detailed breakdown of their key distinctions:

Dynamic vs static computational graphs

Dynamic and static computational graphs are fundamental concepts in Deep Learning frameworks like PyTorch and TensorFlow. They define how neural networks are structured, and understanding the differences between them is crucial for choosing the right framework for your Machine Learning project.

In PyTorch, dynamic computational graphs are employed. This means that the graph is constructed on the fly as operations are executed during the forward pass of the model. This dynamic nature makes PyTorch particularly suitable for research and experimentation. Researchers appreciate it because they can modify the model's architecture, insert print statements for debugging, and make real-time adjustments during the training process. This flexibility simplifies the process of developing complex, non-standard neural network architectures.

One key advantage of dynamic graphs is the ease of debugging. Since you can see the computation graph as it is built, identifying issues in the model is more intuitive. However, this flexibility comes at the cost of potential performance drawbacks, especially for production-level deployments.

In contrast, TensorFlow employs static computational graphs. This means that you define the entire computation graph before running any actual computations. This static approach offers optimisation opportunities. TensorFlow can analyse the entire graph and apply various optimisations, like constant folding and kernel fusion, to enhance performance during execution.

While this static approach initially had a steeper learning curve and made debugging more challenging, TensorFlow has since introduced higher-level APIs like Keras. These APIs make it easier to define models in a dynamic fashion. TensorFlow 2.0 also uses eager execution by default, which allows for more dynamic-like behaviour and simplifies debugging.

How can Artificial Intelligence impact your industry? Register for our Artificial Intelligence & Machine Learning Course and exceed in your industry.

Ease of use

One of the crucial aspects in the PyTorch vs TensorFlow debate is the ease of use, which can significantly impact the development process for Machine Learning and Deep Learning projects. Both frameworks have made strides in simplifying their APIs, but there are some key differences:

PyTorch is often praised for its user-friendly and intuitive interface. It uses dynamic computational graphs, which makes it feel more like standard Python programming. This dynamic approach allows developers to modify models on the fly, insert print statements for debugging, and make real-time changes with ease. This is a significant advantage, particularly in research and experimentation, where flexibility is key.

Moreover, PyTorch's error messages are known for being more informative and easier to understand, which accelerates the debugging process. The PyTorch community is also renowned for its helpful resources and extensive documentation. For individuals already familiar with Python and NumPy, the transition to PyTorch is often seamless.

TensorFlow has historically had a steeper learning curve due to its use of static computational graphs. However, with TensorFlow 2.0, eager execution is enabled by default, which makes the framework more dynamic and user-friendly. TensorFlow 2.0 also introduced the Keras API as its high-level interface for defining neural networks. This has made model development in TensorFlow significantly more accessible, as Keras is both user-friendly and well-documented.

In terms of ease of use, PyTorch may have a slight edge due to its dynamic computation graph, simplicity, and detailed error messages. TensorFlow, especially with the advent of TensorFlow 2.0 and the integration of Keras, has made great strides in closing this gap, making it more appealing to developers who prefer a static graph framework without a steep learning curve.

Community and ecosystem

The community and ecosystem surrounding a Deep Learning framework play a pivotal role in its development, adoption, and success. When comparing PyTorch and TensorFlow, it's essential to consider the differences in their respective communities and ecosystems.

PyTorch boasts a vibrant and rapidly growing community, particularly in the academic and research domains. Researchers and individual developers widely embrace it, and its popularity continues to rise. The following factors contribute to its thriving ecosystem:

a) Academic adoption: Many researchers and educational institutions prefer PyTorch for its flexibility and dynamic computation graph, which aligns well with the exploratory nature of academic research.

b) Libraries and resources: PyTorch offers a rich collection of libraries and resources for tasks like natural language processing, computer vision, and reinforcement learning. Notable libraries such as Transformers and fastai have contributed to its popularity.

c) Active development: The PyTorch development team, in collaboration with Facebook AI, consistently releases updates, new features, and improvements, ensuring that the framework remains competitive and up to date.

TensorFlow, developed by Google, boasts a robust presence in both the academic and industrial sectors. The following features distinguish its ecosystem:

a) Industry adoption: TensorFlow has been widely adopted in various industries, including tech giants like Google, Uber, and Airbnb. It is known for its production readiness and scalability.

b) TensorFlow Extended (TFX): For large-scale Machine Learning pipelines and production deployments, TFX provides end-to-end Machine Learning infrastructure.

c) TensorBoard: TensorFlow's integration with TensorBoard offers advanced visualisation tools for model training, evaluation, and debugging, enhancing its usability in research and development.

d) High-level APIs: TensorFlow's integration with Keras simplifies model building and training, attracting both beginners and experienced developers.

Visualisation Tools

Effective visualisation is an essential aspect of Deep Learning model development. Both PyTorch and TensorFlow offer visualisation tools to help researchers and developers gain insights into their models and data. However, the way they handle visualisation differs significantly.

PyTorch is lauded for its simplicity and flexibility when it comes to visualisation. Researchers and developers appreciate its integration with TensorBoardX, a third-party library that provides an interface to TensorBoard. This integration allows users to visualise dynamic computational graphs, scalars, histograms, and images with ease. TensorBoardX extends the capabilities of PyTorch, making it more versatile for visualisation.

One of the notable features of PyTorch is its dynamic computational graph. It allows you to change the model architecture on the fly, making it ideal for experimentation. When visualising the dynamic graph in TensorBoardX, you can easily understand how your model processes data at different stages.

TensorFlow, on the other hand, offers a comprehensive and integrated visualisation solution with TensorBoard. TensorBoard is a web-based tool that provides various components for visualising different aspects of model training. This includes graph visualisation, scalars, histograms, embeddings, and more. TensorFlow's use of static computational graphs makes it suitable for building production-ready models, and TensorBoard aligns well with this by offering extensive visualisation capabilities.

The Tensorboard plugin projector is a handy feature for visualising high-dimensional data. It allows you to explore embeddings in 3D, facilitating a deeper understanding of how your model clusters and separates data points.

Become a Deep Learning Engineer and an understand shallow and deep neural networks. Register for our Neural Networks With Deep Learning Training.

Deployment

Deployment is a critical phase in any Machine Learning project, as it's the stage where your trained models move from the development and testing environment to actual production systems. Both PyTorch and TensorFlow offer various tools and options for deployment, each with its strengths and considerations.

Deployment in PyTorch has traditionally been seen as more researcher-centric, with a focus on flexibility and rapid prototyping. However, recent developments have expanded its deployment capabilities. Here are key points to consider:

a) TorchScript: PyTorch introduced TorchScript, a way to create serialisable and optimisable representations of models. This feature allows you to save your PyTorch model in a format that can be loaded and executed outside of the PyTorch environment. TorchScript enables smoother model deployment in production.

b) LibTorch: LibTorch is a PyTorch library that enables C++ deployment of PyTorch models. It's beneficial when you want to integrate PyTorch models with existing C++ applications.

c) Production Libraries: While PyTorch is known for research and prototyping, libraries like PyTorch Mobile are emerging to facilitate the deployment of PyTorch models on mobile.

TensorFlow has traditionally been favoured in industry applications, and it offers several robust deployment options:

a) TensorFlow Serving: This is a dedicated service for serving TensorFlow models in production. It provides a flexible and efficient way to deploy Machine Learning models as REST APIs.

b) TensorFlow Lite: For mobile and embedded devices, TensorFlow Lite is the go-to solution. It optimises models for these platforms while ensuring low latency and efficient use of resources.

c) TensorFlow.js: This framework allows you to run TensorFlow models in web browsers and Node.js, enabling in-browser Machine Learning Applications.

Enhance your AI skills with the Deep Learning PDF—download it today!

Mobile and embedded

When comparing PyTorch and TensorFlow in the context of mobile and embedded applications, it's essential to understand their respective strengths and weaknesses.

TensorFlow has a significant edge when it comes to deploying Machine Learning models on mobile and embedded devices. The framework offers TensorFlow Lite, a version tailored for these resource-constrained platforms. TensorFlow Lite optimises the model size and inference speed, making it suitable for smartphones, IoT devices, and edge computing. It supports hardware acceleration and has been integrated into platforms like Android, making it the preferred choice for many mobile applications. Additionally, TensorFlow's TensorFlow.js enables the deployment of Machine Learning models in web applications, extending its reach to browsers and web-based mobile applications.

On the other hand, PyTorch has traditionally been less suited for mobile and embedded deployment. While it shines in research and prototyping, its direct support for mobile deployment is limited. However, with the introduction of PyTorch Mobile, the framework has started to address this gap. PyTorch Mobile aims to provide a path for running PyTorch models on mobile and embedded platforms efficiently. Although it's still evolving and might not be as mature as TensorFlow Lite, PyTorch's research-friendly ecosystem has led to innovations that may shape the future of mobile and edge computing in the Deep Learning space.

Popularity

The popularity of PyTorch and TensorFlow is a crucial aspect that influences the choice of Deep Learning framework for various projects. Both frameworks have made significant strides in the field of Artificial Intelligence and Machine Learning, but they differ in terms of their user base and areas of prominence.

PyTorch has gained substantial popularity in the research community and among academics. Its ascent in popularity can be attributed to several factors. Firstly, PyTorch's dynamic computational graph approach aligns well with the research community's needs. Researchers often need the flexibility to change model architectures on the fly and experiment with new ideas seamlessly, which PyTorch's dynamic graph supports. Secondly, PyTorch offers an intuitive Pythonic interface, making it accessible to developers and researchers who are more comfortable with Python. Additionally, PyTorch's extensive libraries, particularly in the domain of natural language processing, have contributed to its growing popularity.

TensorFlow, created by Google Brain, enjoys widespread popularity, primarily in the industry. TensorFlow's adoption was accelerated by its static computational graph, which lends itself well to optimised production-ready models. As companies turned to Deep Learning for various applications, TensorFlow's robust ecosystem, support for distributed computing, and deployment options made it an attractive choice. TensorFlow also boasts a vast collection of pre-trained models, making it an asset for industries seeking rapid integration.

Conclusion

In Deep Learning, PyTorch and TensorFlow continue to lead the way and shape the landscape. The difference between PyTorch vs TensorFlow may sometimes seem subtle, yet these nuances can significantly impact your AI journey. Whether you opt for the dynamic elegance of PyTorch or the production-ready might of TensorFlow, your choice should align with your specific goals and preferences.

Frequently Asked Questions

What are the Other Resources and Offers Provided by The Knowledge Academy?

The Knowledge Academy takes global learning to new heights, offering over 3,000 online courses across 490+ locations in 190+ countries. This expansive reach ensures accessibility and convenience for learners worldwide.

Alongside our diverse Online Course Catalogue, encompassing 19 major categories, we go the extra mile by providing a plethora of free educational Online Resources like News updates, Blogs, videos, webinars, and interview questions. Tailoring learning experiences further, professionals can maximise value with customisable Course Bundles of TKA.

Upcoming Data, Analytics & AI Resources Batches & Dates

Date

Deep Learning with TensorFlow Training

Deep Learning with TensorFlow Training

Fri 14th Mar 2025

Fri 9th May 2025

Fri 11th Jul 2025

Fri 12th Sep 2025

Fri 14th Nov 2025

Top Rated Course

Top Rated Course

If you wish to make any changes to your course, please

If you wish to make any changes to your course, please