We may not have the course you’re looking for. If you enquire or give us a call on +44 1344 203 999 and speak to our training experts, we may still be able to help with your training requirements.

Training Outcomes Within Your Budget!

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

Imagine a bustling metropolis where every building, road, and utility is meticulously planned and dynamically managed. This is the essence of Kubernetes Architecture, a system designed to orchestrate the complex interplay of containers in a cloud environment. It’s not just about managing workloads; it’s about creating a resilient, scalable, and efficient ecosystem that can adapt to the ever-changing demands of the digital age.

Kubernetes Architecture operates on principles that echo the ingenuity of urban planning. At its core, it leverages a master-slave model, where the control plane oversees the state of the cluster, ensuring that every node and pod functions harmoniously. Dive in to learn more about it!

Table of Contents

1) What is Kubernetes Architecture?

2) High-level Overview

3) Anatomy of a Kubernetes Cluster

4) Components in Detail: What Powers Kubernetes?

5) Networking in Kubernetes

6) Strategies for Achieving High Availability

7) Security Features in Kubernetes

8) What are Kubernetes Architecture Best Practices?

9) Conclusion

What is Kubernetes Architecture?

Kubernetes has become a buzzword in the tech industry. But what is it exactly? Kubernetes is a dynamic toolbox for managing containerised applications, automatically adjusting resources for seamless scaling and operation. It manages containers, which are lightweight, stand-alone executable packages. These containers can include all elements needed to run an application.

Containers are easy to deploy but can be hard to manage at scale. Here is where Kubernetes comes in. Kubernetes Architecture provides the tools to handle hundreds and thousands of containers. Think of it as an Operating System for your containerised applications. It creates, deletes, and organises containers as needed.

The architecture comprises multiple components. These are divided into the Control Plane and the Data Plane. The Control Plane is the brains of the operation. It makes global decisions and maintains the cluster's overall state. The Data Plane consists of Worker nodes. These execute tasks as directed by the Control Plane.

A third key part is the storage layer. It handles data persistence across the cluster. Together, these components form an integrated system. This system automates tasks like load balancing, scaling, and health monitoring.

Understanding this can seem like a tall order. Yet, it's crucial for anyone involved in deploying or managing Kubernetes. So, why is the Kubernetes Architecture built this way? The answer lies in the goals of Kubernetes. It aims for high availability, fault tolerance, and extreme scalability.

High-level Overview

The Kubernetes Architecture has three primary building blocks: the Master node, the Worker nodes, and Kubernetes objects. Let's take a closer look at each of these elements to understand their roles and functionalities in this Kubernetes Architecture overview.

Master Node

The Master node is the orchestrating powerhouse of a Kubernetes cluster, coordinating all activities as the command centre. Think of it as the conductor of an orchestra, setting the tempo and cues for all other instruments, the Worker nodes.

It deals with the cluster's overall management. For instance, it determines which node should run a particular container. It balances the workloads across Worker nodes, ensuring optimal utilisation. The Master node looks after the health of the cluster. If a Worker node fails, it reallocates the tasks to another Worker. This ensures high availability and fault tolerance.

Another critical role is its interaction with the user. Whether it's through CLI, API, or a dashboard, the Master node receives your input. It parses your commands and translates them into actionable tasks. These are then dispatched to the appropriate Worker nodes.

Worker Node

While the Master node is the brains, Worker nodes are the muscle. These units do the actual work assigned by the Master node. They run containers, which are instances of your applications. Each Worker node has its own local environment. This is essential for running containers. It includes necessary elements like the OS, a container runtime, and a special Kubernetes agent called Kubelet.

Kubelet is the liaison between a Worker node and the Master node. It ensures the containers are running as expected. Kubelet receives instructions from the Master node and implements them locally. Worker nodes also handle networking between containers. This involves routing, DNS handling, and load balancing. These functions enable smooth communication between different parts of an application. The image below will help you understand high level architecture clearly.

Kubernetes Objects

Kubernetes objects are the high-level abstractions over your containerised applications. They define the state of your cluster, specifying how applications should deploy and run. A Kubernetes object is a manifestation of your desired state. When you create one, you inform the Master node of your expectations. For example, specify the number of replica Pods or define a service to expose the app to the internet.

Among the basic objects, Pods are the most elemental. A Pod wraps one or more containers, encapsulating storage resources, a unique network IP, and management policies. Services are another crucial object. They help in accessing Pods and can distribute network traffic among them.

ConfigMaps and Secrets are special objects that store configuration data and sensitive information. They allow you to manage configurations separately from container images, making your applications more portable and secure.

Anatomy of a Kubernetes Cluster

Unpacking the complexities of Kubernetes Architecture involves getting into its cluster anatomy. The cluster structure consists of three main layers: The control plane, the Data plane, and the Storage layer components. By understanding these layers, we can gain a deeper understanding of how Kubernetes maintains its resilience, scalability, and manageability.

The Control Plane

The Control plane is the decision-making hub within a Kubernetes cluster. It's usually housed in the Master node and has several vital components. The API Server is the cornerstone of this layer. It exposes the Kubernetes API and is the gateway for all interactions within the cluster. It processes RESTful API requests, validates them, and executes the subsequent operations.

Then we have ‘etcd’, the consistent and highly available key-value store. It acts as the primary datastore for the cluster, storing configuration data, state data, and metadata. This ensures that the cluster returns to its desired state even after failures.

The Scheduler is another crucial Control plane component. It's responsible for placing Pods, the smallest unit of computing in Kubernetes, onto suitable Worker nodes. When you submit a request to run a Pod, the Scheduler finds the best node to host it. It considers factors like resource utilisation, constraints, and affinity rules.

Controllers are indispensable parts of the Control plane. They come in various flavours like Node Controller, Replication Controller, and Endpoints Controller. Controllers maintain the cluster's desired state by continuously comparing and adjusting the current state as needed.

Hone your DevOps expertise with our comprehensive DevOps Courses - Register today!

The Data Plane

The Data plane is the layer where the actual workloads run. It exists mainly on the Worker nodes. The Kubelet is the node agent that ensures all containers in the Pod are healthy. It starts, stops, and restarts containers as needed to keep the system stable.

Another key element is the Kube-proxy, responsible for networking functions within Kubernetes. It takes care of IP addressing, load balancing, and network routing. Kube-proxy ensures that data packets go to the correct containers and that services are accessible from the outside world.

And then there's the container runtime. This is the engine that runs your containers. While Docker is commonly used, Kubernetes also supports other runtimes like containerd. The runtime launches and runs containers according to the specifications in their images.

Unlock new opportunities with our comprehensive Kubernetes Training for DevOps - Start mastering Kubernetes today!

The Storage Layer

The Storage layer refers to how the platform manages and provides storage resources (like disk space) for your applications. It ensures that your applications have a place to store data, whether it's databases, files, or other information they need to work correctly. This Storage layer ensures data is available, reliable, and can be easily connected to your running applications.

However, for more persistent needs, there are Persistent Volumes (PVs). PVs exist independently of the Pod lifecycle, offering a way to store data more durably. To use these, Pods can make Persistent Volume Claims (PVCs), which are like tickets to access storage.

Storage Classes are the templates that define how PVs should be provisioned. They describe the type of storage to use, whether it's cloud-based or local, and the access modes. This abstraction simplifies the storage provisioning process.

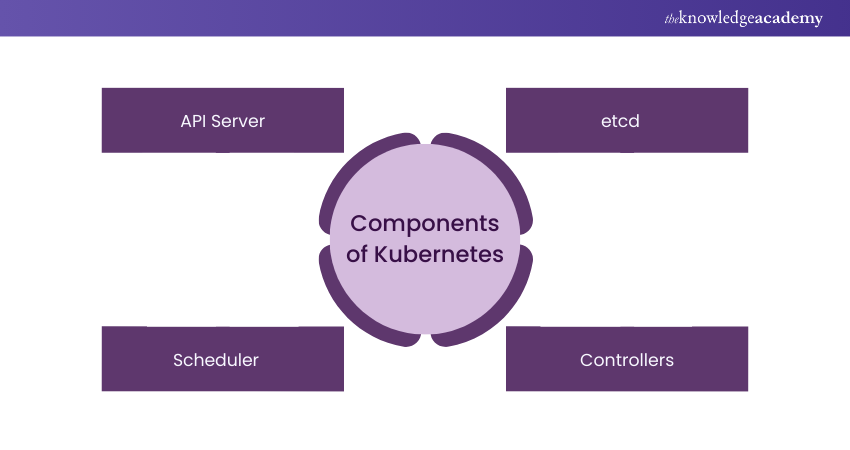

Components in Detail: What Powers Kubernetes?

Kubernetes is a tightly integrated collection of components working in harmony. Understanding these individual elements provides insights into how Kubernetes operates. From its frontend API server to its backend data storage mechanisms, each component has its own specialised role. In this section, we go deep into the nitty-gritty of these components.

1) API Server

Often seen as the 'front door' to the Kubernetes Control plane, the API Server is a critical component. It serves as the interface between users and the backend Control plane. It receives all RESTful API calls, whether they come from a human operator or another internal component.

After receiving these requests, the API Server performs authentication and authorisation checks. It also validates if the request format meets the expected schema. Once a request passes these checks, the API Server processes it, coordinating with other components like the ‘etcd’ and Scheduler.

2) etcd

Consider ‘etcd’ as the 'brain' of the Kubernetes cluster. It's a highly reliable, distributed key-value store that saves the cluster's state. This is where data like configurations, secrets, and the status of running Pods are stored. ‘etcd’ is especially vital for maintaining high availability and fault tolerance. When changes occur in the cluster, such as Pod creation or deletion, ‘etcd’ is updated. Its consistency guarantees that each cluster node has a unified and up-to-date view of the system state.

3) Scheduler

In the Kubernetes Architecture, the Scheduler acts like a highly efficient traffic cop. It decides where to run new Pods based on several factors. These factors include resource availability, user-defined constraints, and other policies. The Scheduler scans through all available nodes to find the most suitable fit for each Pod. Once it makes a decision, it instructs the API Server to update the ‘etcd’ database. The selected Worker node then receives the new Pod for execution.

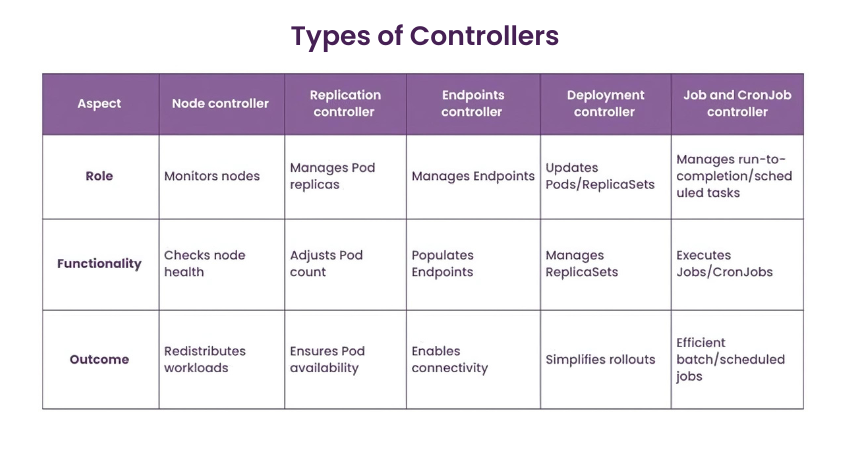

4) Controllers

Controllers are important in the Kubernetes control plane, acting as the automation engines that power the cluster. These components continually monitor the cluster's actual state and strive to bring it in line with the desired state. They correct discrepancies as they arise, with each controller maintaining specific aspects of the system's functionality.

Understanding controllers helps you grasp how Kubernetes remains so stable and efficient. Below are some key types of controllers in Kubernetes:

1) Node Controller

a) Role: Monitors the health of nodes within the cluster.

b) Functionality: Keeps tabs on the availability of nodes and takes action if a node becomes unresponsive.

c) Outcome: Helps in ensuring that workloads are not scheduled on failed nodes and redistributes existing workloads if needed.

2) Replication Controller

a) Role: Manages the number of identical copies of a Pod running in a cluster.

b) Functionality: Constantly compares the number of running Pods with the desired count and creates or deletes Pods as needed.

c) Outcome: Ensures high availability and reliability by maintaining the desired number of Pod replicas.

Kickstart your Docker journey with our Introduction to Docker Training – Sign up now!

3) Endpoints Controller

a) Role: Manages the Endpoints objects that represent network endpoints.

b) Functionality: Populates the Endpoints objects with IP addresses based on Service and Pod status.

c) Outcome: Enables network connectivity within the cluster by keeping service-to-pod mapping accurate.

4) Deployment Controller

a) Role: Facilitates declarative updates to Pods and ReplicaSets.

b) Functionality: Manages the desired state of deployments through ReplicaSets.

c) Outcome: Simplifies application rollouts, rollbacks, and scaling.

5) Job and CronJob Controller

a) Role: Manages run-to-completion and scheduled tasks.

b) Functionality: Ensures that Jobs and CronJobs execute their tasks as specified.

c) Outcome: Provides a framework for running batch and scheduled jobs efficiently.

5) Kubelet

Located on every Worker node, the Kubelet is the bridge between the Master and Worker nodes. Its main task is to ensure that all containers in a Pod are running smoothly. Kubelet does this by frequently checking the health status of the containers. It communicates with the Master node, specifically the API Server, to report the health status or to receive new instructions. If a container becomes unresponsive or crashes, the Kubelet takes corrective actions, like restarting the container.

6) Kube-proxy

Networking is a critical function within a Kubernetes cluster, and Kube-proxy serves as its backbone. It runs on each Worker node, handling all networking tasks. Kube-proxy manages service discovery, load balancing, and routing. It reads services and endpoints information from the ‘etcd’ store to dynamically update its rules. This ensures that network requests reach the right services and Pods. Kube-proxy can work in different modes, including User Space, iptables, and IPVS, each with its own set of capabilities and limitations.

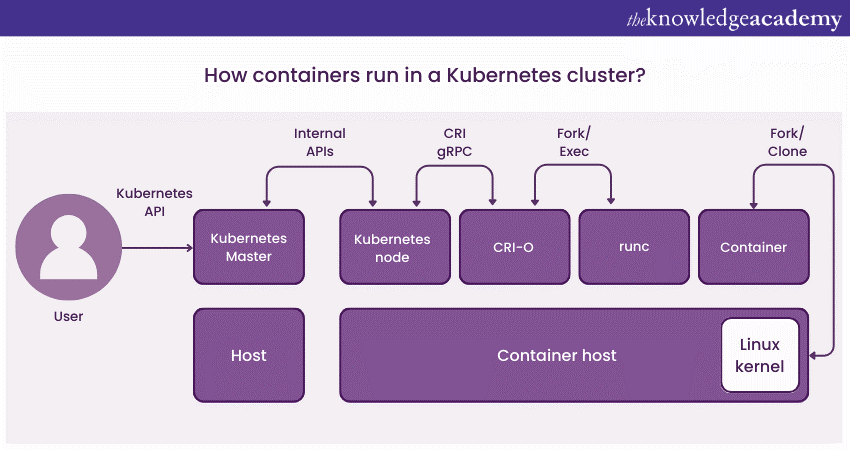

7) Container Runtime

Executing containers is the fundamental operation in Kubernetes, and that’s the job of the container runtime. Different runtimes like Docker, containerd, and CRI-O can be plugged into Kubernetes.

No matter your choice, the container runtime handles pulling images, unpacking them, and running them as containers. It interacts closely with the Kubelet, which instructs it to start, stop, or restart containers based on the desired state.

8) Persistent Volumes (PVs) and Persistent Volume Claims (PVCs)

Data storage in Kubernetes is managed through Persistent Volumes (PVs) and Persistent Volume Claims (PVCs). PVs are like long-term storage units that exist independently of any Pod lifecycle. PVCs, on the other hand, are requests for these storage units. When a Pod requires persistent storage, it uses a PVC to claim specific storage space from a PV. This mechanism allows for data persistence across Pod restarts, ensuring data integrity and availability.

9) Networking in Kubernetes

Networking is a cornerstone in Kubernetes Architecture. It performs an important role in communication between Pods, services, and the external world. Given its complexity, understanding Kubernetes networking can be challenging. This section aims to simplify it by breaking down its core elements.

10) Pod-to-Pod Communication

It’s role, mechanism and significance are discussed below:

a) Role: Enables direct communication between Pods, making it the basic unit of networking in Kubernetes.

b) Mechanism: Utilises a flat network namespace, which allows every Pod to see all other Pods without NAT.

c) Significance: Facilitates smooth intra-application communication, which is essential for microservices-based applications.

11) Service Networking

It’s role, mechanism and significance are discussed below:

a) Role: Manages the exposure of applications, allowing them to be accessible within the cluster or from the internet.

b) Mechanism: Uses a Service object in Kubernetes to provide a single DNS name and load balances traffic to application Pods.

c) Significance: Provides a stable endpoint for applications, decoupling the consumer from the individual Pod IPs.

12) Ingress and Egress

It’s role, mechanism and significance are discussed below:

a) Role: Controls how external users and systems interact with services running inside the Kubernetes cluster.

b) Mechanism: Ingress controllers manage incoming traffic based on routing rules. Network Policies govern what outgoing traffic is permitted.

c) Significance: Enables application exposure to the internet, ensuring proper routing and security controls.

13) Network Policies

It’s role, mechanism and significance are discussed below:

a) Role: Dictates what kind of communication is permitted between Pods or between Pods and other network endpoints.

b) Mechanism: Implemented via Network Policy objects, these rules can be set to control both incoming and outgoing traffic.

c) Significance: Enhances security by controlling which Pods communicates with each other, preventing unwanted or insecure access.

14) DNS and Name Resolution

It’s role, mechanism and significance are discussed below:

a) Role: Aids in the discovery of services within the Kubernetes cluster, making it easier for developers and systems to interact.

b) Mechanism: Uses CoreDNS or similar in-cluster DNS providers to map service names to their corresponding IP addresses.

c) Significance: Allows for more human-friendly communication, eliminating the need to remember or hard-code specific IP addresses.

15) Strategies for Achieving High Availability

Ensuring constant access to critical applications and services is crucial for achieving high availability. Implementing strong strategies reduces time without operation and boosts system trustworthiness.

1) Multiple Master Nodes

Having several master nodes in a Kubernetes cluster guarantees increased availability as it removes any potential single points of failure. In the event of a single master node failure, alternative nodes can step in to assume control of the cluster, ensuring continuous operation and reducing downtime. This repetition is essential for ensuring reliable cluster management.

2) Load Balancing

Efficiently distributing incoming traffic across multiple nodes or pods within a cluster through load balancing prevents overwhelming any single component. This guarantees efficient use of resources, improves performance, and enhances the overall stability and availability of applications.

3) Horizontal and Vertical Scalability

Increasing the capacity of Kubernetes horizontally means adding extra nodes or pods to manage larger workloads, whereas vertical scalability boosts the capabilities of current nodes or pods. Both methods guarantee that the cluster can effectively adjust to changing needs, preserving both performance and availability.

4) Auto-scaling Mechanisms

Auto-scaling for pods and nodes ensures that the cluster can adjust to changing workloads automatically. This is essential to uphold high availability, particularly when dealing with sudden increases in traffic. In Kubernetes, tools such as Horizontal Pod Autoscaler (HPA) and Cluster Autoscaler are crucial for efficient auto-scaling.

5) Pod Scaling

Pod scaling automatically increases or decreases the number of running pods in response to the current workload, guaranteeing the application's ability to manage sudden increases in traffic while sustaining performance levels. The ability to scale dynamically is crucial for efficient resource management and ensuring high availability in Kubernetes setups.

6) Cluster Federation

Cluster federation connects multiple Kubernetes clusters, increasing redundancy and allowing for workload distribution in various regions to enhance availability and performance. Federated clusters enable global failover tactics, allowing a different cluster to assume control if one is unavailable.

7) Monitoring and Alerting

Consistent observation of cluster elements and workloads is crucial for maintaining high availability. Monitoring tools provide immediate information on the health and performance of clusters. When combined with a strong, alert system, monitoring enables quick reaction to possible problems before they escalate

8) Disaster Recovery Planning

Having a disaster recovery plan is essential for ensuring high availability, which includes routinely backing up data and quickly recovering operations in the event of a significant failure. Frequent testing of disaster recovery protocols guarantees their efficiency and enables the team to perform well in stressful situations.

Security Features in Kubernetes

The Kubernetes Architecture includes several built-in security features to protect both the Control plane and the applications running in a cluster. This section will elaborate on these features to offer an understanding of how Kubernetes maintains a secure environment.

Role-based Access Control (RBAC): In Kubernetes, Role-based Access Control, commonly known as RBAC, is used to set permissions on who can access what within a cluster. It provides fine-grained control over the operations that can be performed, limiting the potential for unauthorised actions. The primary elements include Roles and RoleBindings, which work together to define and assign permissions to users or groups.

Security Contexts: Security contexts are another essential feature for maintaining cluster security. They define privilege and access control settings for Pods and containers. This includes settings like running a container as a non-root user or limiting the system calls that the container can make. Security contexts can be defined at the Pod level or for individual containers, offering flexibility in how you apply security policies.

Network Policies: We touched on network policies under networking, but they are worth mentioning again in the context of security. Network policies are essentially firewall rules for your cluster. They allow you to define which Pods can communicate among each other and with external systems, providing a crucial layer of security against unauthorised or malicious network access.

Secrets Management: Sensitive data like passwords, tokens, or keys should never be stored openly. Kubernetes offers a Secrets object to handle such sensitive information securely. Secrets mounts as data volumes or exposed as environment variables to the containers, thus avoiding hardcoding of sensitive information in the application code.

API Server Authentication and Authorisation: The API server acts as the front door to any Kubernetes cluster. Ensuring secure access to the API server is critical for the safety of the cluster. Kubernetes provides various ways to authenticate and authorise users, ranging from simple static token files to more complex systems like OpenID Connect.

What are Kubernetes Architecture Best Practices?

The best practices suggest a platform strategy considering the security, governance, monitoring, storage, networking, container lifecycle management and orchestration like Kubernetes.

Here are some of the suggested best practices for architecting Kubernetes clusters:

a) Verify the use of the latest Kubernetes version

b) Invest in upfront training for developer and operations teams

c) Establish enterprise-wide governance

d) Ensure alignment and integration of tools and vendors with Kubernetes orchestration

e) Improve security by integrating image-scanning processes in CI/CD, scanning during build and run phases

f) Exercise caution with open-source code from GitHub repositories

g) Adopt RBAC across the entire cluster

h) Uphold the principles of least privilege and zero-trust models

i) Secure containers by allowing only non-root users and configuring the file system as read-only

j) Avoid default values to enhance clarity and reduce the risk of errors

k) Prioritise small container sizes for faster builds, reduced disk space usage, and quicker image pulls

l) When uncertain, allow a crash to occur

m) Automate CI/CD pipeline to avoid manual deployment of Kubernetes

Conclusion

Kubernetes Architecture serves as the foundation for contemporary containerised applications, providing scalability, resilience, and effective management. By fully understanding its parts, you can enhance workloads, guarantee high availability, and streamline operations. Comprehending the architecture of Kubernetes enables the creation of resilient, adaptable, and future-proof systems. This ensures your success in today's changing tech environment.

Elevate your ML deployment lead the AI orchestration revolution with our Kubeflow Training today!

Frequently Asked Questions

Kubernetes Storage Architecture is responsible for overseeing the storage and access of data within a cluster. Persistent Volumes (PVs) and Persistent Volume Claims (PVCs) are used to separate storage from pods, enabling adaptable, expandable, and durable data storage options that can extend past pod durations.

Kubernetes is primarily used to automate the deployment, scaling, and management of containerised applications. It coordinates containers on a group of machines, ensuring optimal use of resources, availability, and simplified management, allowing developers to easily handle intricate applications.

The Knowledge Academy takes global learning to new heights, offering over 30,000 online courses across 490+ locations in 220 countries. This expansive reach ensures accessibility and convenience for learners worldwide.

Alongside our diverse Online Course Catalogue, encompassing 17 major categories, we go the extra mile by providing a plethora of free educational Online Resources like News updates, Blogs, videos, webinars, and interview questions. Tailoring learning experiences further, professionals can maximise value with customisable Course Bundles of TKA.

The Knowledge Academy’s Knowledge Pass, a prepaid voucher, adds another layer of flexibility, allowing course bookings over a 12-month period. Join us on a journey where education knows no bounds.

The Knowledge Academy offers various DevOps Certification, including the Kubernetes Training, and Running Containers On Amazon Elastic Kubernetes Service EKS Training. These courses cater to different skill levels, providing comprehensive insights into What is Kubernetes.

Our Programming & DevOps Blogs cover a range of topics related to Kubernetes, offering valuable resources, best practices, and industry insights. Whether you are a beginner or looking to advance your Programming & DevOps skills, The Knowledge Academy's diverse courses and informative blogs have got you covered.

Upcoming Programming & DevOps Resources Batches & Dates

Date

Kubernetes Training

Kubernetes Training

Fri 14th Feb 2025

Fri 11th Apr 2025

Fri 13th Jun 2025

Fri 15th Aug 2025

Fri 10th Oct 2025

Fri 12th Dec 2025

Top Rated Course

Top Rated Course

If you wish to make any changes to your course, please

If you wish to make any changes to your course, please