We may not have the course you’re looking for. If you enquire or give us a call on +44 1344 203 999 and speak to our training experts, we may still be able to help with your training requirements.

Training Outcomes Within Your Budget!

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

Imagine your computer as a bustling city with countless tasks vying for attention. Each process, like a busy citizen, wants its turn to run on the CPU. But how does the OS decide who gets the spotlight next? That’s where CPU Scheduling in OS steps in – the conductor of this digital orchestra!

This blog unveils how CPU Scheduling in OS occurs. Read on and learn how the various scheduling algorithms keep your system's productivity going!

Table of Content

1) What is CPU Scheduling?

2) CPU Scheduling Types

3) CPU Scheduling Key Factors

4) CPU Scheduling Algorithm Types

5) Various CPU Scheduling Algorithms Comparison

6) Conclusion

What is CPU Scheduling?

CPU Scheduling is a crucial process in Operating Systems that helps determine which process will use the CPU while another process is on hold. The main goal of CPU Scheduling is to ensure that the CPU is utilised efficiently, making the system faster and fairer.

CPU Scheduling Types

There are four conditions under which CPU Scheduling decisions are taken:

1) If a process is switching between the running state and the waiting state.

2) If a process is switching from the running state to the ready state (on the occurrence of an interrupt, for example)

3) If a process is switching between waiting and ready states (e.g. when its I/O request is completed)

4) If a process terminates upon completion of execution.

Now we explore the two categories of CPU Scheduling: Non-preemptive and Pre-emptive scheduling.

1) Non-Preemptive Scheduling

In Non-Preemptive Scheduling, new processes are executed only after the current process has been completed. The process holds the CPU's resources (CPU time) until its state is terminated or pushed to the process waiting state. If the CPU is currently executing a process, it is only interrupted once it is completed.

Once the process has completed its execution, the processer picks the following process from the ready queue (the queue in which all processes prepared for execution are stored).

Here’s an example:

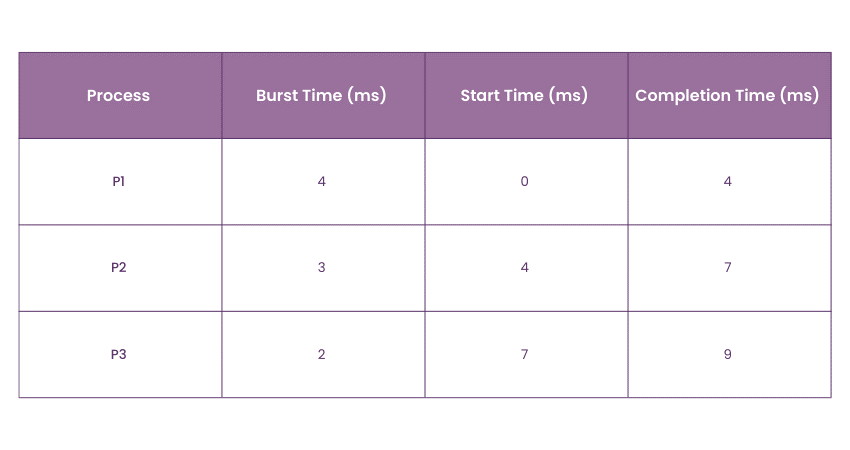

Consider three processes:

a) P1 with a burst time of 4 ms

b) P2 with a burst time of 3 ms

c) P3 with a burst time of 2 ms

Assume all processes arrive at the same time. Using the First-Come, First-Served (FCFS) Non-Preemptive Scheduling algorithm, the CPU executes them in the order they arrive:

a) P1 starts and runs for 4 ms.

b) P2 starts immediately after P1 and runs for 3 ms.

c) P3 starts after P2 and runs for 2 ms.

In this table:

a) Start Time is when the process begins executing.

b) Completion Time is when the process finishes execution.

Each process runs to completion before the next one starts.

2) Preemptive Scheduling

Preemptive Scheduling considers that some processes may have a higher priority and, hence, must be executed before the processes that have a lower priority. In this form of scheduling, CPU resource is allocated to a process for only a limited period. Then, those resources are taken back and assigned to another process (the next in execution).

If the process has yet to complete its execution, it is placed back in the ready state, where it will remain until it gets a chance to execute once again. When we examine the conditions that dictate CPU Scheduling decisions, we find predictability in process selection. For conditions 1 and 4, if a process is in the ready queue, it must be chosen for execution.

Let's explore this type of CPU Scheduling with an example:

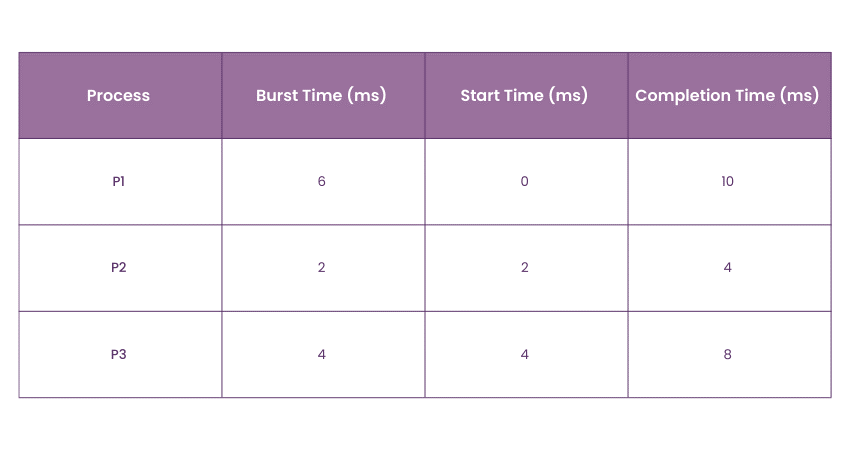

Consider three processes:

a) P1 with a burst time of 6 ms

b) P2 with a burst time of 2 ms

c) P3 with a burst time of 4 ms

Assume all processes arrive at the same time, and the system uses the Shortest Remaining Time First (SRTF) Preemptive Scheduling algorithm.

a) P1 begins execution but gets interrupted after 2 ms by P2 (which has a shorter burst time).

b) P2 runs to completion (2 ms).

c) P3 arrives and preempts P1 because P3 has a shorter remaining time (4 ms).

d) P3 runs to completion (4 ms).

e) P1 resumes and finishes its remaining 4 ms.

In this table, processes can be preempted and resumed based on the scheduling algorithm's decision.

Gain deeper insight into the installation of OS and service packs in our comprehensive Computer Hardware Troubleshooting Course - Sign up now!

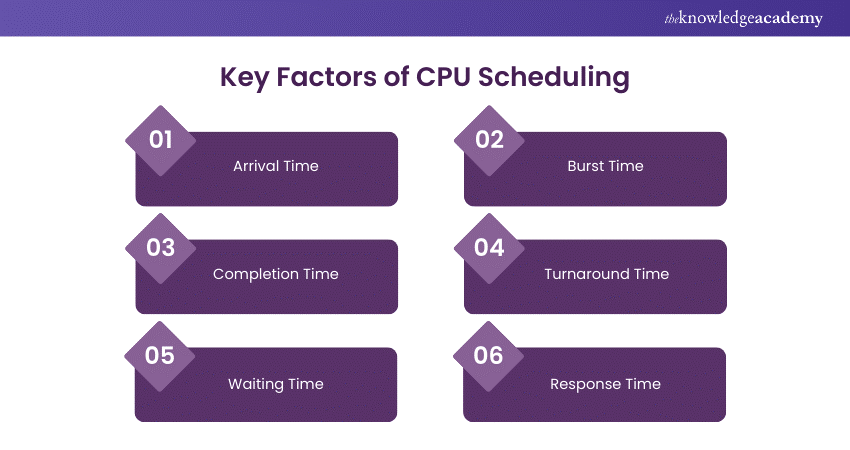

CPU Scheduling Key Factors

Now that we've explored how, as a crucial feature of OS, CPU Scheduling governs processor time among the numerous tasks running on a computer, let's dive into its key concepts

1) Arrival Time

In CPU Scheduling, the arrival time is that moment in time when a process enters the ready queue and awaits execution by the CPU. In other words, it's the point at which a process becomes eligible for scheduling. Many CPU Scheduling algorithms consider arrival time when selecting the following process for execution.

A scheduler, for example, may favour earlier arrival timings over those with later arrival times to reduce the waiting time for a process in the ready queue. Hence, it can assist in ensuring the efficient execution of processes.

2) Burst Time

Burst time, also known as 'execution time', is simply the amount of CPU time a process needs to complete its execution. It's the time a process spends on the CPU to perform a specific task or unit of a job. The complexity of the task, the efficiency of the code, and the system’s resources all play a role in determining the burst time of a process.

Burst time is also an essential factor in CPU Scheduling. A scheduler, for example, may favour processes with shorter burst durations over those with longer burst times. This will help reduce the time a process spends operating on the CPU and ensure that the system can make optimum use of the processor’s resources.

3) Completion Time

Completion time is when a process finishes execution and is no longer processed by the CPU. It's an essential metric in CPU Scheduling, as it can help determine the scheduling algorithm's efficiency and the process's waiting time. It's the summation of the arrival, waiting, and burst times. For example, a scheduling algorithm that consistently results in shorter process completion times is considered more efficient than one with longer completion times.

4) Turnaround Time

The time elapsed between a process's arrival and its completion is known as turnaround time. That is, the duration it takes for a process to complete its execution and leave the system.

Turnaround Time = Completion Time – Arrival Time

A scheduling algorithm that consistently produces shorter turnaround times for processes is considered more efficient than one with longer turnaround times.

5) Waiting Time

This is the duration of a process in the ready queue before it begins executing. It helps assess the scheduling algorithm's efficiency. For example, a scheduling method that consistently results in reduced wait times for processes is considered more efficient than one that regularly results in longer wait times.

Waiting Time = Turnaround Time – Burst Time

Additionally, waiting time plays a big role in measuring a scheduling algorithm's efficiency and determining a system’s perceived responsiveness to user queries. A long wait time can significantly contribute to a negative user experience, as users may perceive the system as slow to respond to their requests.

6) Response Time

Response time is the amount of time it takes for the CPU to respond to a process's request. It's the duration between a process's arrival and its first run and an essential parameter in CPU Scheduling since it may assist in determining a system’s perceived responsiveness to user requests.

Response time = Time it started executing – Arrival time

The number of processes waiting in the ready queue, their priority, and the features of the scheduling algorithm all impact response time. For example, a scheduling algorithm that favours processes with shorter burst times may result in quicker response times for those processes.

CPU Scheduling Algorithm Types

Now that you've explored the two main CPU Scheduling methods (Preemptive and Non-preemptive), let's look at the various types of CPU Scheduling Algorithms

1) First Come, First Serve

First Come, First Serve (FCFS) is the most straightforward Operating System scheduling algorithm. This algorithm states that the process that requests the CPU first is allocated the CPU first and is implemented using a FIFO queue.

Here are some characteristics of FCFS:

a) FCFS supports non-preemptive and preemptive CPU Scheduling.

b) Tasks are always executed using a first-come, first-serve concept.

c) FCFS is easy to implement and use.

d) This algorithm could perform more efficiently, and the wait time is relatively high.

2) Shortest Job First (SJF)

Shortest Job First (SJF) is a scheduling process that picks the waiting process with the shortest execution time to execute next. This scheduling method may or may not be preemptive and significantly reduces the average waiting time for other processes waiting to be executed.

Characteristics of SJF include:

a) Shortest Job First has the advantage of having a minimum average waiting time among all Operating System scheduling algorithms.

b) It is associated with each task as a unit of time to complete.

c) If shorter processes keep coming, it may cause starvation. The concept of ageing can solve this problem.

3) Longest Job First (LJF)

The Longest Job First (LJF) scheduling process is just the opposite of the Shortest Job First (SJF). As the name suggests, this algorithm is based on the fact that the process with the largest burst time is processed first and is non-preemptive in nature.

Characteristics of LJF include:

a) The CPU is always assigned to the process with the most considerable burst time among all the processes waiting in a waiting queue.

b) If two processes have the same burst time, the tie is broken using FCFS, i.e., the arrived process is processed first.

c) LJF CPU Scheduling can be of both preemptive and non-preemptive types.

Gain expertise on the latest industry-standard technologies and tools with our Engineering Skills Training - Register now!

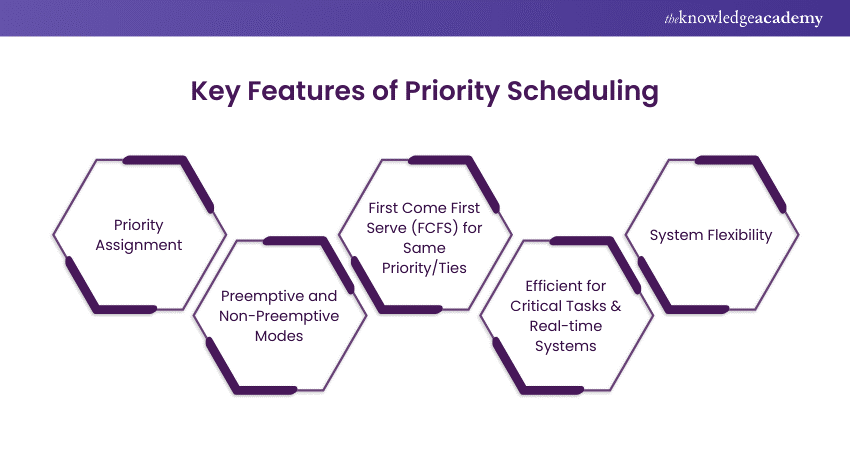

4) Priority Scheduling

A preemptive priority CPU Scheduling algorithm is a preemptive method of CPU Scheduling that works based on the priority of a process. In this case, the editor sets the functions as necessary, meaning the most essential process must be done first. In case of conflict, where there is more than one process with equal value, the most crucial CPU planning algorithm works based on the FCFS (First Come, First Serve) algorithm.

Key characteristics of priority scheduling include:

a) Schedules tasks based on priority.

b) When the higher-priority work arrives, and a task with less priority is executed, the higher-priority process will replace the less-priority process.

c) The later is suspended until the execution is complete.

d) The lower the number assigned, the higher the priority level of a process.

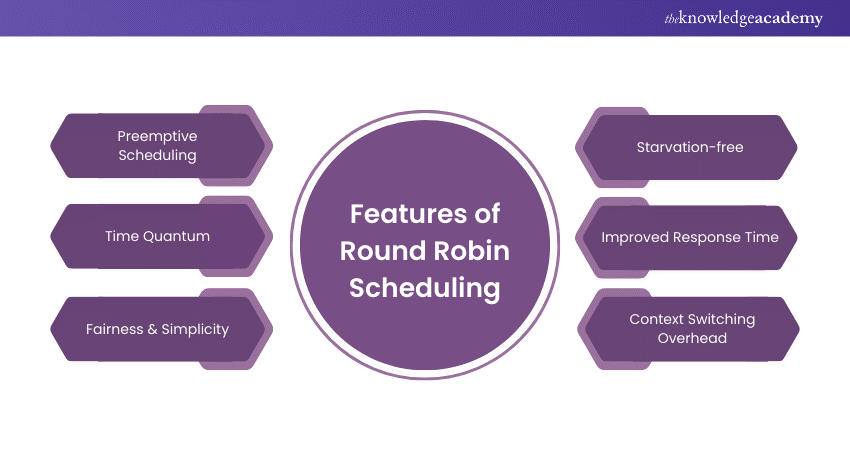

5) Round Robin

Round Robin is a scheduling algorithm in which each process is cyclically assigned a fixed time slot. It's the preemptive version of the FCFS CPU Scheduling algorithm and generally focuses on time sharing technique.

Characteristics of Round robin include:

a) It’s simple, easy to use, and starvation-free, as all processes get the balanced CPU allocation.

b) One of the most widely used methods in CPU Scheduling.

c) It’s considered preemptive as the processes are given to the CPU for a minimal time.

d) Round robin seems fair as every process gets an equal share of CPU.

e) The newly created process gets added to the end of the ready queue.

6) Shortest Remaining Time First(SRTF)

The Shortest Remaining Time First (SRTF) is the preemptive version of the Shortest Job First (SJF). This algorithm allocates the processor to the job closest to completion. So, in SRTF, the process with the shortest remaining time is selected to execute until completion.

Characteristics of SRTF include:

a) The SRTF algorithm processes jobs faster than the SJF algorithm.

b) By continually selecting the process closest to completion, SRTF ensures efficient use of CPU time.

c) It continuously re-evaluates the remaining burst time of each process.

7) Longest Remaining Time First(LRTF)

The Longest Remaining Time First (LRTF) is a preemptive version of the LJF scheduling algorithm. The Operating System uses this algorithm to program incoming processes for systematic use. It schedules those processes first, with the longest processing time remaining for completion.

Characteristics of LRTF include:

a) The CPU is always assigned to the process with the most considerable burst time among all the processes waiting in a waiting queue.

b) If two processes have the same burst time, the tie is broken using FCFS, i.e., the process that arrived first is processed.

c) LRTF CPU Scheduling can be both preemptive and non-preemptive.

d) This is the only process to execute once the longest task is completed.

e) All the jobs or processes finish at approximately the same time.

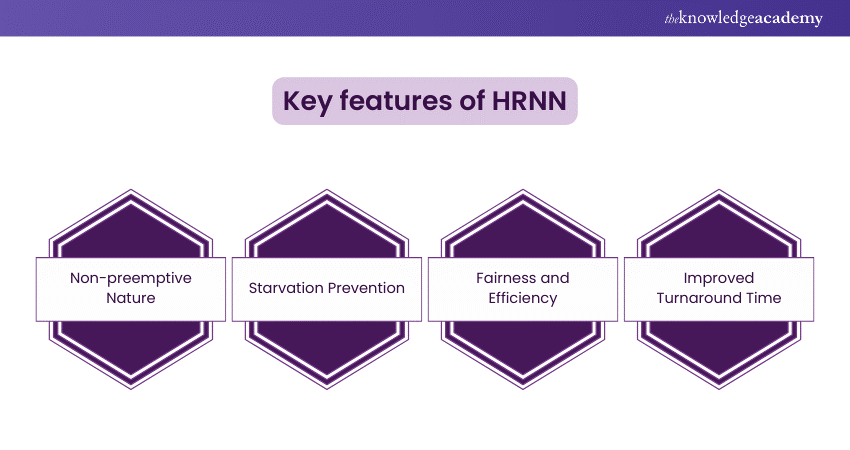

8) Highest Response Ratio Next (HRRN)

Highest Response Ratio Next (HRRN) is a non-preemptive CPU Scheduling algorithm recognised as one of the most optimal. It prioritises processes by calculating the response ratio for all available methods and selecting the highest ratio. Once chosen, the process runs to completion.

Characteristics of HRRN include:

a) The criteria for HRRN is Response Ratio, and the mode is non-preemptive.

b) HRRN is considered to be the modification of Shortest Job First to reduce the problem of starvation.

c) During the HRRN scheduling algorithm, the CPU is allotted to the next process with the highest response ratio and not to the process with the shortest burst time.

Gain insight into the importance of database systems in applications through our Introduction to Database Training – Sign up now!

9) Multiple Queue Scheduling

Multiple Queue Scheduling divides processes into different queues based on their characteristics or priority levels. Each queue can have its scheduling algorithm, and processes are managed within their respective queues. This approach allows for flexible and efficient handling of different types of processes.

Key features of Multiple Queue Scheduling include:

a) Processes are divided into multiple queues, each with a different priority level. For example, interactive processes might be placed in a high-priority queue, while batch processes might be placed in a lower-priority queue.

b) Once a process is assigned to a queue, it remains in that queue for its entire execution.

c) Each queue can have its own scheduling algorithm. For instance, a high-priority queue might use round-robin scheduling, while a lower-priority queue might use First Come, First Serve (FCFS) scheduling.

d) Higher-priority queues can preempt processes in lower-priority queues, ensuring that high-priority processes are executed promptly.

10) Multilevel Feedback Queue Scheduling (MLFQ)

Multilevel Feedback Queue Scheduling (MLFQ) CPU Scheduling is like Multilevel Queue Scheduling with the added benefit of moving between the queues in this process. Thus, it is much more efficient.

Key features of MLFQ include:

a) A process's priority can be adjusted dynamically. For example, if a process uses a lot of CPU time, its priority might be lowered, while processes that wait for a long time might increase their priority.

b) Each queue is assigned a time quantum, which determines how much CPU time a process in that queue can use before being preempted and possibly moved to a lower-priority queue.

c) MLFQ uses a feedback mechanism to adjust processes' priorities based on their behaviour over time. This helps balance the load and ensures that no process is starved of CPU time.

d) Higher-priority processes can preempt lower-priority ones, ensuring critical tasks promptly get the CPU time they need.

e) By moving processes that wait too long in lower-priority queues to higher-priority queues, MLFQ helps prevent starvation.

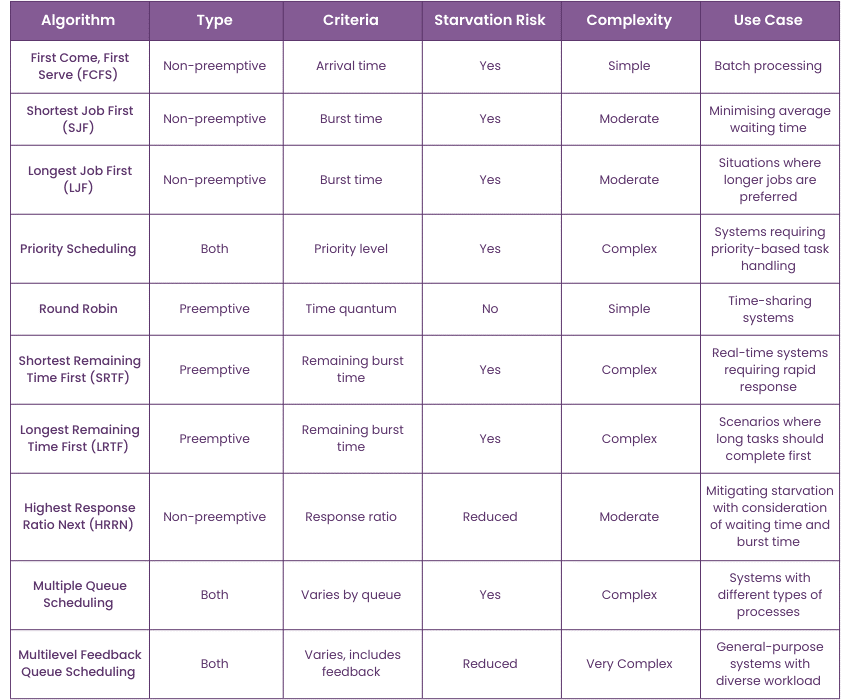

Various CPU Scheduling Algorithms Comparison

This table summarises the differences and similarities between the various CPU Scheduling algorithms discussed above:

Conclusion

In conclusion, CPU Scheduling is the backbone of efficient Operating Systems. It ensures optimal performance through various algorithms tailored to diverse needs. By understanding the mechanisms associated with CPU Scheduling in OS, you will gain insights into how multitasking, process prioritisation, and resource management work seamlessly behind the scenes.

Enhance your skills is developing user-centric interfaces with our comprehensive UX Design Course – Sign up now!

Frequently Asked Questions

CPU Scheduling is required in OS because it allows one process to use the CPU while another process is delayed due to unavailability of resources. This makes the system faster, more efficient, and fairer.

The four circumstances of CPU Scheduling are:

a) A process switches from the running to the waiting state.

b) A process switches from the running state to the ready state.

c) A process switches from the waiting state to the ready state.

d) A process concludes its execution and terminates.

The Knowledge Academy takes global learning to new heights, offering over 30,000 online courses across 490+ locations in 220 countries. This expansive reach ensures accessibility and convenience for learners worldwide.

Alongside our diverse Online Course Catalogue, encompassing 19 major categories, we go the extra mile by providing a plethora of free educational Online Resources like News updates, Blogs, videos, webinars, and interview questions. Tailoring learning experiences further, professionals can maximise value with customisable Course Bundles of TKA.

The Knowledge Academy’s Knowledge Pass, a prepaid voucher, adds another layer of flexibility, allowing course bookings over a 12-month period. Join us on a journey where education knows no bounds.

The Knowledge Academy offers various IT Support and Solution Training Courses, including the Computer Hardware Troubleshooting Course and the IT Fundamentals Course. These courses cater to different skill levels, providing comprehensive insights into What is Troubleshooting.

Our IT Infrastructure & Networking Blogs cover a range of topics related to Operating Systems, offering valuable resources, best practices, and industry insights. Whether you are a beginner or looking to advance your Operating System knowledge, The Knowledge Academy's diverse courses and informative blogs have got you covered.

Upcoming Programming & DevOps Resources Batches & Dates

Date

Computer Hardware Troubleshooting Course

Computer Hardware Troubleshooting Course

Fri 29th Nov 2024

Fri 27th Dec 2024

Fri 3rd Jan 2025

Fri 7th Mar 2025

Fri 2nd May 2025

Fri 4th Jul 2025

Fri 5th Sep 2025

Fri 7th Nov 2025

Halloween sale! Upto 40% off - 95 Vouchers Left

Halloween sale! Upto 40% off - 95 Vouchers Left

Top Rated Course

Top Rated Course

If you wish to make any changes to your course, please

If you wish to make any changes to your course, please