We may not have the course you’re looking for. If you enquire or give us a call on + 1-866 272 8822 and speak to our training experts, we may still be able to help with your training requirements.

Training Outcomes Within Your Budget!

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

Big Data Processing is a way to deal with vast amounts of information that is too big and messy for regular computers to handle easily. It involves using special techniques and tools to clean up, organise, and make sense of all this data. Think of it as taking a big jumble of numbers, words, and other stuff and turning it into something useful.

With Big Data Processing, we can find important patterns, learn new things, and make better decisions based on this massive amount of information. In this blog, we will tell you all you need to know about what is Big Data Processing, including its importance, challenges, and use cases. Keep reading to learn more!

Table of Contents

1) What is Big Data Processing?

2) Importance of Big Data Processing

3) Five Stages of Big Data Processing

4) Big Data Processing Architectures

5) Best Big Data Tools

6) Challenges in Big Data Processing

7) Use Cases of Big Data Processing

8) Conclusion

What is Big Data Processing?

Big Data Processing involves methods and frameworks for handling vast amounts of information and deriving valuable insights. It begins with data acquisition and cleaning to ensure quality. Once you have reliable data, you can use it for statistical analysis or develop Machine Learning models to make predictions.

Importance of Big Data Processing

Big Data is growing rapidly and shows no signs of slowing down. Organisations in many industries are discovering how valuable this data can be. To unlock that value, effective Big Data Processing is essential. In this section, we will look at why Big Data Processing is so important.

a) Informed Decision-Making: Big Data Processing empowers organisations to make data-driven decisions. By checking vast amounts of data, businesses can gain insights into customer behaviour, market trends, and operational efficiency. This leads to informed decision-making, reducing the reliance on gut feeling and intuition.

b) Competitive Advantage: Organisations that effectively process Big Data gain a significant competitive advantage. They can respond quickly to market changes, identify new opportunities, and optimise their operations. This flexibility helps businesses stay ahead of the competition.

c) Personalisation: Big Data Processing allows companies to personalise their offerings. Whether it's tailoring product recommendations, creating personalised marketing campaigns, or providing customised services, Big Data enables businesses to meet the unique needs and preferences of their customers.

d) Improved Customer Experience: Understanding customer behaviour and preferences through Big Data Analytics helps improve the overall customer experience. Companies can address pain points, enhance user interfaces, and provide better support, leading to higher customer satisfaction and loyalty.

e) Cost Reduction: Big Data Processing can lead to cost savings. By analysing operational data, organisations can identify inefficiencies, reduce waste, and optimise resource allocation. This efficiency translates to lower operational costs.

f) Predictive Analytics: Organisations can use Big Data Processing for forecasting and risk assessment. For example, in finance, predictive models can help identify potential fraud. Meanwhile, in healthcare, they can predict disease outbreaks. Such insights are invaluable for risk mitigation and planning.

g) Innovation: Big Data Processing fosters innovation by uncovering new opportunities and trends. It enables organisations to explore uncharted territories, develop various products or services, and expand into new markets based on data-backed insights.

h) Scientific Discovery: Big Data Processing plays an important role in fields like healthcare and scientific research. Researchers can analyse massive datasets to make breakthroughs in genomics, drug discovery, climate modelling, and more.

i) Supply Chain Optimisation: Companies with complex supply chains can use Big Data Processing to optimise their logistics. Real-time data analysis helps track shipments, predict delays, and ensure goods are delivered efficiently and on time.

j) Security and Fraud Detection: Big Data Processing is essential for cybersecurity. It enables the identification of unusual patterns and potential security threats in real-time. By detecting anomalies, organisations can proactively mitigate risks and protect sensitive data.

Join our Big Data and Analytics Training to elevate your Big Data Skills. Sign up today!

5 Stages of Big Data Processing

Understanding the stages of Big Data Processing is crucial for effectively managing and analysing large datasets. Here are the five essential stages:

1) Data Extraction

In this initial step, data is collected from various sources such as enterprise applications, web pages, sensors, marketing tools, and transactional records. Professionals extract data from both unstructured and structured streams, merging and verifying information by removing inaccuracies. Accurate and labelled data sets a quantitative standard and goal for improvement, forming the foundation for future decisions.

2) Data Transformation

Data transformation involves converting or modifying data into required formats to build insights and visualisations. Techniques such as aggregation, normalisation, feature selection, binning, clustering, and concept hierarchy generation are used to transform unstructured data into structured data. These transformations make the data user understandable. This stage enhances the efficiency of business and analytical operations, enabling firms to make better data-driven decisions.

3) Data Loading

In this stage, transformed data is loaded into a centralised database system. Before loading, the database is indexed, and constraints are removed to streamline the process. The use of Big Data ETL (Extract, Transform, Load) automates, standardises, and optimises the loading process, whether batch-driven or real-time.

4) Data Visualisation/BI Analytics

Data Analytics tools and methods enable firms to visualise large datasets and create dashboards, providing an overview of business operations. Business Intelligence (BI) Analytics answers key business growth and strategy questions through predictions and what-if analyses. These tools help stakeholders understand data patterns and correlations between attributes.

5) Machine Learning Application

This stage focuses on creating models that evolve with new input using learning algorithms to analyse large amounts of data quickly. There are three types of Machine Learning:

a) Supervised Learning: Uses labelled data for training models to predict outcomes, often used with historical data for future predictions.

b) Unsupervised Learning: Utilises unlabelled data and training algorithms to identify patterns without historical labels.

c) Reinforcement Learning: Algorithms learn to make decisions based on observations, guided by a reward function to encourage correct decisions.

Machine Learning in Big Data Processing automates pattern recognition and feature extraction in complex unstructured data without human intervention, making it a vital resource for Big Data research.

Transform your career with our Big Data Architecture Training – Register today!

Big Data Processing Architectures

The architecture of Big Data Processing is a structured framework designed to handle, process, and analyse large volumes of data efficiently. This architecture supports the five stages of Big Data Processing:

1) Data Extraction Layer

a) Sources: Data is collected from diverse sources such as enterprise applications, web pages, sensors, marketing tools, and transactional records.

b) Data Ingestion: Tools like Apache Flume, Kafka, and Sqoop are used to extract data from these sources. Data is ingested in real-time or in batches, ensuring a steady flow of information into the system.

2) Data Transformation Layer

a) Processing Engines: This layer involves transforming the extracted data into the required formats using processing engines like Apache Spark, Hadoop MapReduce, and Apache Beam.

b) Techniques: Transformation techniques such as aggregation, normalisation, feature selection, binning, clustering, and concept hierarchy generation are applied. These methods convert unstructured data into structured data, making it user-understandable and ready for analysis.

3) Data Storage Layer

a) Storage Solutions: Transformed data is stored in centralised databases or data warehouses. Popular storage solutions include Hadoop Distributed File System (HDFS), NoSQL databases like Cassandra and MongoDB, and cloud storage services like Amazon S3 and Google Cloud Storage.

b) Indexing and Optimisation: Before loading data, databases are indexed, and constraints are removed to improve efficiency. This ensures faster retrieval and query performance.

4) Data Visualisation and BI Analytics Layer

a) Visualisation Tools: Tools such as Tableau, Power BI, and QlikView are used to create dashboards and visualisations. These tools provide an overview of business operations, enabling stakeholders to understand data patterns and correlations.

b) BI Analytics: Business Intelligence (BI) tools perform what-if analyses and predictions, helping firms make informed decisions based on data insights.

5) Machine Learning and Advanced Analytics Layer

a) ML Models: This layer focuses on developing Machine Learning models using libraries and frameworks like TensorFlow, Scikit-learn, and PyTorch.

b) Types of Learning: Supervised learning, unsupervised learning, and reinforcement learning algorithms are employed to analyse data, recognise patterns, and make predictions. These models continuously evolve with new input, automating the analysis of large datasets without human intervention.

The Big Data Processing Architecture integrates various tools and techniques to manage, process, and analyse vast amounts of data. Each layer of the architecture plays a crucial role in ensuring that data is efficiently collected, transformed, stored, visualised, and analysed. This enables organisations to derive actionable insights and make data-driven decisions.

Step into a bright future with our Hadoop Big Data Certification – join now!

Best Big Data Tools

In the world of Big Data, choosing the right tools is essential for efficient data processing, analysis, and visualisation. Here are six of the best tools that stand out for their capabilities and performance:

1) Apache Spark

Apache Spark is a high-speed Big Data Processing and Machine Learning Analytics Engine. It offers an easy-to-use API that handles large datasets efficiently, making it ideal for fast analytics queries. Spark also provides several libraries that support SQL queries, graph processing, and the development of Machine Learning models, enabling developers to create complex workflows more efficiently.

2) Hadoop

Apache Hadoop is a robust, fault-tolerant, open-source Big Data Processing platform developed by the Apache Software Foundation. Written in Java, Hadoop can manage any type of data, whether structured, semi-structured, or unstructured. It breaks tasks into small sub-tasks, distributing them across data nodes in a Hadoop cluster, which processes data in smaller quantities to reduce network traffic.

3) ATLAS.ti

ATLAS.ti offers accessible research tools and top-tier technology for uncovering meaningful insights. It is widely used in academia, market research, and customer experience studies, supporting both qualitative and mixed-methods analysis.

4) HPCC

Developed by LexisNexis Risk Solutions, HPCC is a powerful Big Data Processing solution. It provides data processing services on a unified platform with a common structure and scripting languages, minimising the need for extensive programming. HPCC is known for its effectiveness in handling large-scale data processing tasks.

5) Apache Cassandra

Apache Cassandra is a highly scalable NoSQL database designed to manage large volumes of data efficiently. It is ideal for businesses that require high availability and cannot afford data loss during data centre outages. Cassandra allows seamless horizontal data transfer across clusters and is not constrained by joins or predefined schemas.

6) Apache Storm

Apache Storm is a master-slave architecture computation system perfect for real-time Data Analysis. It excels in low latency, scalability, and ease of deployment, making it the leading tool for real-time intelligence. As an open-source platform, Storm is utilised by both small and large-scale businesses to process large volumes of data swiftly.

Challenges in Big Data Processing

Big Data Processing comes with several challenges that can make it tricky to work with all that massive data. Let's break down these challenges in simple terms:

a) Volume: Big Data means lots and lots of data, way more than regular computers can handle easily. Managing such huge amounts of data is a big challenge.

b) Velocity: Data comes in fast, like a speedy train. Processing it quickly so you can use it is a challenge. Think of it like trying to catch a fast-flying ball.

c) Variety: Data comes in different forms - text, numbers, images, videos, and more. Making sense of all these different types is like solving a puzzle with pieces from different games.

d) Veracity: Data may not be very trustworthy at times, as it might have errors or be inconsistent. Dealing with unreliable data is a bit like trying to read a book with missing pages.

e) Complexity: Big Data often involves lots of data sources and systems. Keeping everything working smoothly together is like juggling many balls at once without dropping any.

f) Privacy and Security: Big Data often includes sensitive information. Protecting this data from hackers and making sure it's used responsibly is a big concern, like keeping your secrets safe from prying eyes.

g) Costs: Storing and processing Big Data can be expensive. Balancing the need for power with the budget can be like trying to keep your house warm without burning too much money on heating.

h) Scalability: As data grows, systems need to grow too. Ensuring that everything can grow smoothly without major disruptions is a bit like expanding a house while still living in it.

i) Skill gap: Working with Big Data requires specialised skills. Finding people who understand Big Data can be tricky.

j) Legal and Ethical Concerns: There are rules about how data can be used, and they vary by place. Complying with these rules and making sure you're doing the right thing can be a bit like following the rules of a game.

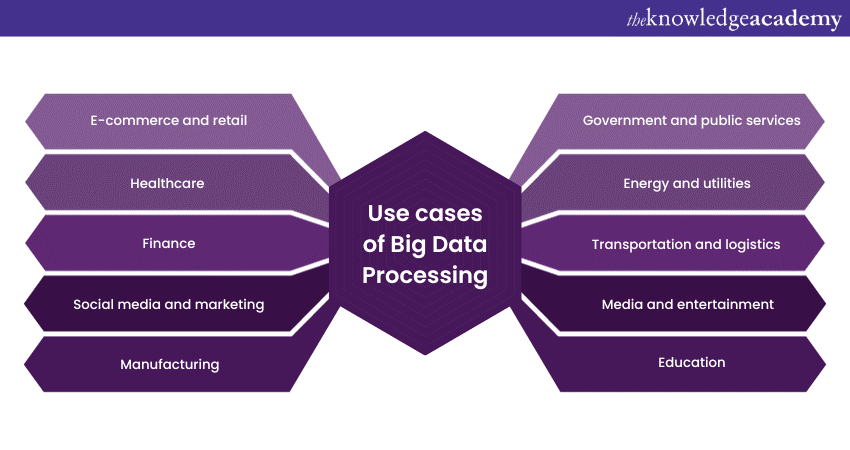

Use cases of Big Data Processing

Big Data Processing involves the careful analysis of vast and complex datasets to extract valuable insights and drive informed decision-making. Here, we explore ten key use cases of Big Data Processing, showcasing how organisations use its power to revolutionise their operations and provide greater value to their stakeholders.

a) E-commerce and Retail: In the world of online shopping, Big Data helps companies like Amazon recommend products that you might like based on your browsing and purchase history. It also ensures that your favourite products are always in stock.

b) Healthcare: Big Data plays an important role in predicting disease outbreaks like the flu by analysing data from healthcare providers. It also helps pharmaceutical companies speed up the development of new drugs.

c) Finance: Banks use Big Data to protect your money by detecting fraudulent transactions as they happen. They also analyse vast amounts of data to make smart decisions about loans and investments.

d) Social media and Marketing: Social media platforms utilise Big Data to understand how you feel about the content you see and to show you ads that are relevant to your interests.

e) Manufacturing: Manufacturers use Big Data to make sure their machines are always working efficiently. They can predict when a machine might break down, reducing costly downtime.

f) Government and Public Services: Governments analyse data to allocate resources effectively. For example, they can use Big Data to decide where to send emergency services during a natural disaster.

g) Energy and Utilities: Companies that provide electricity and water use Big Data to manage their resources better. They can also help consumers save energy by analysing their usage patterns.

h) Transportation and Logistics: Companies like FedEx use Big Data to plan the most efficient delivery routes. This not only saves time and money but also reduces emissions.

i) Media and Entertainment: Streaming services like Netflix use Big Data to recommend movies and TV shows you'll enjoy. Advertisers also use it to show you ads that are more likely to catch your attention.

j) Education: Big Data is used in education to identify how students are performing and where they might need help. This allows educators to provide personalised support and improve learning outcomes.

Conclusion

Big Data Processing is the engine driving data-driven decision-making and innovation across industries. By cleaning, organising, and extracting valuable insights from massive and complex datasets, Big Data Processing empowers organisations to enhance customer experiences. It also improves operations and helps detect fraud, predict outcomes, and make informed choices.

Understand the basics of Big Data with our Big Data Analysis Course. Sign up now!

Frequently Asked Questions

Processing Big Data is essential to extract valuable insights from vast amounts of information. It enables businesses to make data-driven decisions, uncover patterns and trends, improve operational efficiency, and enhance customer experiences, ultimately driving innovation and competitive advantage.

Big Data Processing offers numerous advantages, including improved decision-making through data-driven insights, enhanced operational efficiency, the ability to predict trends and behaviours, personalised customer experiences, and competitive advantage. It also facilitates innovation and helps identify new business opportunities.

The Knowledge Academy takes global learning to new heights, offering over 30,000 online courses across 490+ locations in 220 countries. This expansive reach ensures accessibility and convenience for learners worldwide.

Alongside our diverse Online Course Catalogue, encompassing 17 major categories, we go the extra mile by providing a plethora of free educational Online Resources like News updates, Blogs, videos, webinars, and interview questions. Tailoring learning experiences further, professionals can maximise value with customisable Course Bundles of TKA.

The Knowledge Academy’s Knowledge Pass, a prepaid voucher, adds another layer of flexibility, allowing course bookings over a 12-month period. Join us on a journey where education knows no bounds.

The Knowledge Academy offers various Big Data and Analytics Training, including Big Data Analysis, Big Data Architecture Training, and Hadoop Big Data Certification. These courses cater to different skill levels and provide comprehensive insights into Lean Principles.

Our Data, Analytics & AI Blogs cover a range of topics related to Lean Management, offering valuable resources, best practices, and industry insights. Whether you are a beginner or looking to advance your Data, Analytics & AI skills, The Knowledge Academy's diverse courses and informative blogs have got you covered.

Upcoming Data, Analytics & AI Resources Batches & Dates

Date

Hadoop Big Data Certification

Hadoop Big Data Certification

Thu 30th Jan 2025

Thu 27th Feb 2025

Thu 20th Mar 2025

Thu 29th May 2025

Thu 3rd Jul 2025

Thu 4th Sep 2025

Thu 20th Nov 2025

Top Rated Course

Top Rated Course

If you wish to make any changes to your course, please

If you wish to make any changes to your course, please