We may not have the course you’re looking for. If you enquire or give us a call on +27 800 780004 and speak to our training experts, we may still be able to help with your training requirements.

Training Outcomes Within Your Budget!

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

Hadoop, an open-source framework, has revolutionised the way we handle and process Big Data with its suite of tools. Hadoop Tools are a suite of software applications and frameworks designed to facilitate the storage, processing, management, and analysis of vast volumes of data, enabling efficient Big Data operations. In this blog, we will delve into the top 10 Hadoop Tools you should know about, as well as the benefits they provide. Read more to find out!

Table of Contents

1) What is Hadoop?

a) What are Hadoop Tools?

2) Advantages of using Hadoop Tools

3) Top 10 Hadoop Tools

a) Hadoop Distributed File System (HDFS)

b) Data ingestion tools

c) Data processing tools

d) Data storage and management tools

e) Workflow and job scheduling tools

4) Conclusion

What is Hadoop?

Hadoop is an open-source Java-based framework designed for the storage and processing of large data sets. It leverages distributed storage and parallel processing to manage big data and analytics tasks by breaking them into smaller, concurrent workloads. The core Hadoop framework consists of four modules:

a) Hadoop Distributed File System (HDFS): A distributed file system where each Hadoop node processes local data, reducing network latency and providing high-throughput data access without predefined schemas.

b) Yet Another Resource Negotiator (YARN): Manages cluster resources and schedules applications, handling resource allocation and job scheduling.

c) MapReduce: A programming model for large-scale data processing that divides tasks into subsets processed in parallel, then combines the results into a cohesive dataset.

d) Hadoop Common: Provides the libraries and utilities shared across Hadoop modules.

The Hadoop ecosystem includes additional tools like Apache Pig, Apache Hive, Apache HBase, Apache Spark, Presto, and Apache Zeppelin for comprehensive big data management and analysis.

What are Hadoop Tools?

Hadoop tools are various software components that complement the core Hadoop framework, enhancing its ability to handle big data tasks. These tools assist in data collection, storage, processing, analysis, and management. They are crucial because they extend Hadoop's functionality, allowing for more complex and efficient data workflows.

By enabling advanced analytics, streamlined data integration, and real-time processing, these tools help organisations harness the full potential of their big data. This comprehensive ecosystem supports diverse data needs, making Hadoop a versatile and powerful platform for big data solutions.

Advantages of using Hadoop Tools

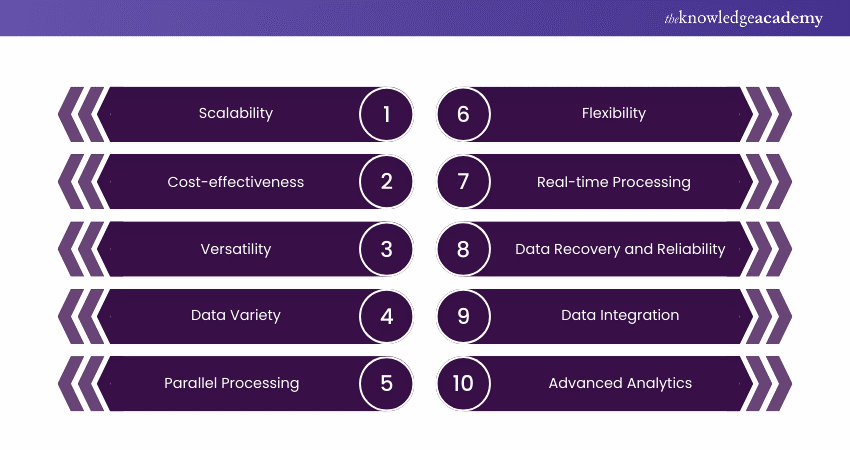

Using Hadoop Tools offers a multitude of benefits that significantly enhance the way organisations manage, process, and gain insights from Big Data. Here are some key advantages of incorporating these tools into your data management and analysis workflows:

a) Scalability: Hadoop tools are designed to tackle Big Data scalability challenges. By distributing data across a cluster of nodes, these tools enable seamless expansion of storage and processing capabilities as data volumes grow. Adding more nodes to the cluster ensures performance and efficiency are maintained even with massive datasets.

b) Cost-effectiveness: Hadoop's open-source nature and compatibility with commodity hardware make it a cost-effective solution for Big Data management. Organisations can avoid the high costs of proprietary software and hardware, making it affordable to store and process large volumes of data. This affordability democratises access to powerful data management and analysis capabilities.

c) Versatility: The Hadoop ecosystem offers a wide range of tools covering various data processing and analysis aspects. From data ingestion using Apache Sqoop and Apache Flume to processing with Apache MapReduce and Apache Spark, and analytics with machine learning libraries, this versatility allows users to choose tools that meet their specific needs at different stages of the data pipeline.

d) Data Variety: In the era of Big Data, information comes in various forms: Structured data from databases, semi-structured data like JSON and XML, and unstructured data such as text and multimedia. Hadoop tools can efficiently handle all these data types, enabling organisations to derive insights from diverse sources and gain a comprehensive view of their data.

e) Parallel Processing: Hadoop tools leverage parallel processing, breaking down tasks into smaller sub-tasks that can be executed simultaneously across different nodes in the cluster. This significantly speeds up data processing, allowing for quick analysis of large datasets. Apache MapReduce and Apache Spark are prime examples of tools that utilise parallel processing.

f) Flexibility: Hadoop tools embrace the schema-on-read concept, meaning data doesn't need to be rigidly structured before ingestion. This flexibility allows data scientists and analysts to experiment with different analyses and queries without requiring data restructuring upfront, leading to faster iterations and insights.

g) Real-time Processing: Tools like Apache Kafka and Apache Storm address the need for real-time data processing. Apache Kafka efficiently manages and streams large volumes of data in real time, while Apache Storm enables parallel processing of these data streams across the cluster. This real-time capability is crucial for applications requiring instant insights and timely responses.

h) Data Recovery and Reliability: Hadoop's distributed architecture provides built-in fault tolerance. Data is replicated across multiple nodes, ensuring that if one node fails, data can still be retrieved from other nodes. This reliability is essential for maintaining uninterrupted operations despite hardware failures.

i) Data Integration: Hadoop tools facilitate data integration by enabling processing and analysis of data from various sources and formats in a unified environment. This integration capability allows organisations to gain a holistic view of their data, revealing insights that might not be apparent when examining individual data silos.

j) Advanced Analytics: Hadoop tools support advanced analytics, including machine learning (ML), data mining, and predictive modelling. Libraries like MLlib for Apache Spark and Mahout enable data professionals to perform sophisticated analyses, uncover hidden patterns, and make data-driven predictions that drive business decisions.

Top 10 Hadoop Tools in 2024

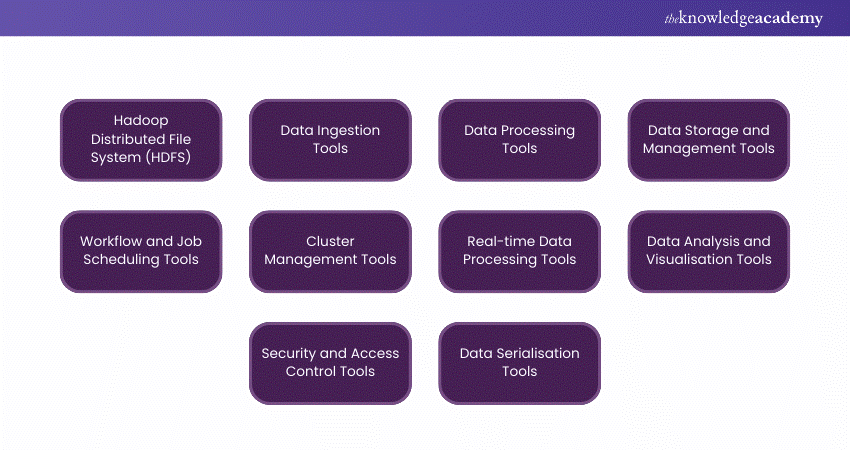

Here’s a detailed list of Hadoop Tools that you should know about:

1) Hadoop Distributed File System (HDFS)

The Hadoop Distributed File System (HDFS) stands as the cornerstone of the Hadoop ecosystem, offering a resilient and scalable platform for storing massive amounts of data. Built to tackle the challenges posed by Big Data, HDFS employs a distributed architecture to store data across a cluster of machines. It achieves fault tolerance by replicating data blocks across multiple nodes, ensuring data availability even in the face of hardware failures.

One of the distinctive features of HDFS is its ability to handle extremely large files by breaking them down into smaller blocks, typically 128MB or 256MB in size. These blocks are then distributed across the cluster, enabling parallel processing and efficient data retrieval. HDFS also incorporates a master-slave architecture, with a single NameNode serving as the master and multiple DataNodes acting as slaves.

2) Data Ingestion Tools

Data ingestion tools are pivotal components within the Hadoop ecosystem that facilitate the seamless movement of data from external sources into the Hadoop platform. Apache Sqoop and Apache Flume are two essential tools in this category:

a) Apache Sqoop: Focuses on structured data, enabling efficient data transfer between relational databases and Hadoop's storage systems. Its command-line interface and connectors for various database systems streamline the import and export of data, allowing organisations to leverage existing data stores for Big Data processing.

b) Apache Flume: Designed for ingesting large volumes of streaming data, such as logs, into Hadoop. Its architecture comprises agents that collect, aggregate, and transfer data to its storage. This tool ensures that data flows smoothly and reliably from diverse sources to clusters, supporting real-time analytics and processing.

3) Data Processing Tools

Data processing tools are essential in the Hadoop ecosystem, enabling efficient manipulation, transformation, and analysis of large datasets. Apache MapReduce, a foundational tool, provides a programming model for distributed data processing. It decomposes complex tasks into smaller subtasks and distributes them across nodes, allowing for parallel computation and scalability.

For those who prefer SQL, Apache Hive offers a SQL-like interface to query and manage structured data, simplifying the complexities of MapReduce. In contrast, Apache Pig uses a high-level scripting language, Pig Latin, to streamline data transformations, making it ideal for data preparation.

These tools cater to various needs and skill levels, from developers creating custom processing logic in MapReduce to analysts utilising Hive's familiar querying language. By facilitating seamless data processing, these tools empower data professionals to derive insights, uncover patterns, and generate valuable reports from their stored data.

4) Data Storage and Management Tools

Data storage and management tools, such as Apache HBase and Apache Cassandra, are indispensable components within the ecosystem.

Apache HBase, a distributed NoSQL database, excels in providing real-time access to vast volumes of data. Built on Hadoop’s HDFS, it offers linear scalability and automatic sharding. These features make it ideal for scenarios where quick data retrieval is crucial. On the other hand, Apache Cassandra, another prominent NoSQL database, offers seamless scalability and fault tolerance.

Apache HBase's column-family structure and Apache Cassandra's column-oriented design allow for efficient storage and retrieval, suiting applications where fast and scalable data management is paramount. From real-time analytics, time-series data to applications demanding rapid access to vast datasets, these tools empower users to store, manage, and retrieve information effectively within the framework.

5) Workflow and Job Scheduling Tools

Workflow and job scheduling tools are pivotal components within the Hadoop ecosystem, streamlining the orchestration and execution of complex data processing workflows. Apache Oozie and Apache Azkaban stand out as essential tools in this domain.

a) Apache Oozie: Empowers users to define, manage, and automate intricate workflows that involve multiple data processing tasks. It acts as a coordinator, seamlessly integrating various ecosystem components and ensuring the orderly execution of jobs. With support for dependencies and triggers, Oozie simplifies the handling of complex data pipelines.

b) Apache Azkaban: Offers a user-friendly interface for designing, scheduling, and monitoring workflows. It facilitates the creation of data processing flows by enabling the arrangement of interdependent jobs. Azkaban's visual interface enhances user experience, making it simpler to manage and track the progress of jobs.

Unlock the power of Hadoop with expert guidance – Sign up for our Hadoop Administration Training today!

6) Cluster Management Tools

Cluster management tools, such as Apache Ambari and Apache ZooKeeper, play a pivotal role in the efficient operation of Hadoop ecosystems. Here's a detailed explanation of these tools:

a) Apache Ambari: Offers a user-friendly web interface that simplifies cluster provisioning, configuration, and monitoring. It streamlines the installation of components, ensuring consistency across nodes and easing the management burden.

b) Apache ZooKeeper: Provides distributed coordination services critical for maintaining configuration information, synchronisation, and distributed naming within clusters.

Together, these tools enhance the reliability, scalability, and overall health of clusters. As a result, they allow administrators to focus on optimising performance and ensuring seamless data processing.

7) Real-time Data Processing Tools

Real-time data processing tools, such as Apache Storm and Apache Kafka, are crucial in the modern data landscape. Apache Storm enables rapid processing of streaming data with minimal latency, making it essential for time-sensitive applications like real-time analytics and event-driven processing.

Meanwhile, Apache Kafka functions as a high-throughput, fault-tolerant messaging system, efficiently managing the ingestion, storage, and real-time streaming of large data volumes. These tools empower businesses to extract immediate insights from continuously evolving data streams, enhancing decision-making and enabling timely responses to emerging trends and events.

8) Data Analysis and Visualisation Tools

Data analysis and visualisation tools, such as Apache Spark and Apache Zeppelin, are crucial for extracting insights from vast datasets within the Hadoop ecosystem. Apache Spark's high-speed in-memory processing enables advanced analytics, machine learning, and graph computations, providing actionable insights in real time.

Conversely, Apache Zeppelin offers an interactive platform for visually exploring and presenting data. Supporting multiple languages and integrating seamlessly with Spark, Zeppelin allows analysts to create dynamic, collaborative data narratives that reveal complex patterns and trends. Together, these tools transform complex data into accessible, informative visuals that drive informed decision-making.

9) Security and Access Control Tools

Security and access control tools are essential in the Hadoop ecosystem, ensuring the protection of sensitive data and system integrity. Apache Ranger serves as a central authority for managing security policies, fine-tuning access controls, and providing comprehensive auditing across various components. Its role-based authorisation system allows administrators to define and enforce data access privileges effectively.

Complementing Ranger, Apache Knox acts as a protective barrier, offering secure access to services through a unified entry point. Together, these tools strengthen the ecosystem against unauthorised access, data breaches, and compliance violations, enhancing trust and reliability in Hadoop-based data environments.

10) Data Serialisation Tools

Efficient data serialisation is crucial for storing and transmitting data across Hadoop clusters. Apache Avro simplifies this process, ensuring compatibility and schema evolution. Avro allows data structures to change over time without breaking compatibility, making it ideal for environments with frequently changing schemas.

With dynamic typing, compact binary data encoding, and schema evolution support, Avro streamlines data serialisation and deserialisation for storage, processing, and communication within the Hadoop ecosystem. Its compatibility with multiple programming languages and integration with Hadoop components make it a versatile choice for effective data serialisation management.

Conclusion

To sum it up, familiarity with these top 10 Hadoop Tools is paramount in the domain of Big Data. From storage and processing to security and analysis, mastering these tools empowers professionals to navigate the complexities of data management and unleash its true potential.

Unlock the power of data with our Big Data and Analytics Training – join now to gain essential skills for thriving in the world of data-driven insights!

Frequently Asked Questions

The four modules of Hadoop are:

a) Hadoop Distributed File System (HDFS) for data storage

b) Yet Another Resource Negotiator (YARN) for resource management

c) MapReduce for data processing

d) Hadoop Common for essential libraries and utilities

Apache Spark is the most useful Hadoop tool due to its high-speed in-memory processing capabilities. These enable real-time analytics, advanced machine learning, and efficient handling of large-scale data processing tasks.

The Knowledge Academy takes global learning to new heights, offering over 30,000 online courses across 490+ locations in 220 countries. This expansive reach ensures accessibility and convenience for learners worldwide.

Alongside our diverse Online Course Catalogue, encompassing 17 major categories, we go the extra mile by providing a plethora of free educational Online Resources like News updates, Blogs, videos, webinars, and interview questions. Tailoring learning experiences further, professionals can maximise value with customisable Course Bundles of TKA.

The Knowledge Academy’s Knowledge Pass, a prepaid voucher, adds another layer of flexibility, allowing course bookings over a 12-month period. Join us on a journey where education knows no bounds.

The Knowledge Academy offers various Big Data & Analytics Courses, including Hadoop Big Data Certification Training, Apache Spark Training and Big Data Analytics & Data Science Integration Course. These courses cater to different skill levels, providing comprehensive insights into Key Characteristics of Big Data.

Our Data, Analytics & AI Blogs cover a range of topics related to Big Data, offering valuable resources, best practices, and industry insights. Whether you are a beginner or looking to advance your Big Data and Marketing skills, The Knowledge Academy's diverse courses and informative blogs have got you covered.

Upcoming Data, Analytics & AI Resources Batches & Dates

Date

Hadoop Big Data Certification

Hadoop Big Data Certification

Thu 12th Dec 2024

Thu 23rd Jan 2025

Thu 20th Mar 2025

Thu 22nd May 2025

Thu 17th Jul 2025

Thu 18th Sep 2025

Thu 20th Nov 2025

Halloween sale! Upto 40% off - Grab now

Halloween sale! Upto 40% off - Grab now

Top Rated Course

Top Rated Course

If you wish to make any changes to your course, please

If you wish to make any changes to your course, please